ماه: شهریور 1404

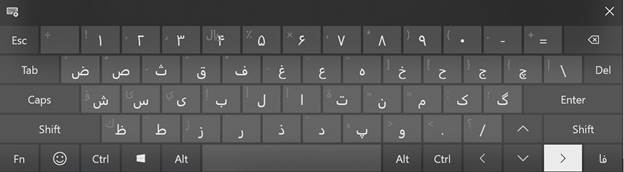

تمرین تایپ دهانگشتی فارسی تیروتیرتمرین تایپ دهانگشتی فارسی تیروتیر

تمرین تایپ فارسی آنلاین/آفلاین

تمرین تایپ انگلیسی آنلاین/آفلاین

آموزش تایپ دهانگشتی فارسی

درس ۱: حروف «ت»، «ب» + کلید فاصله

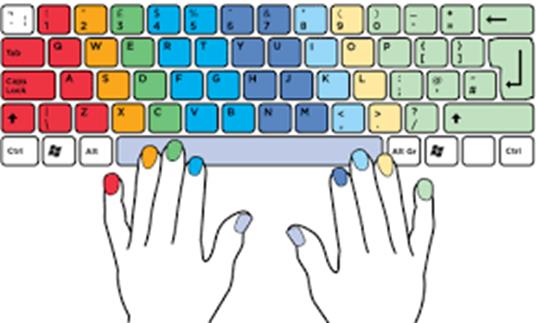

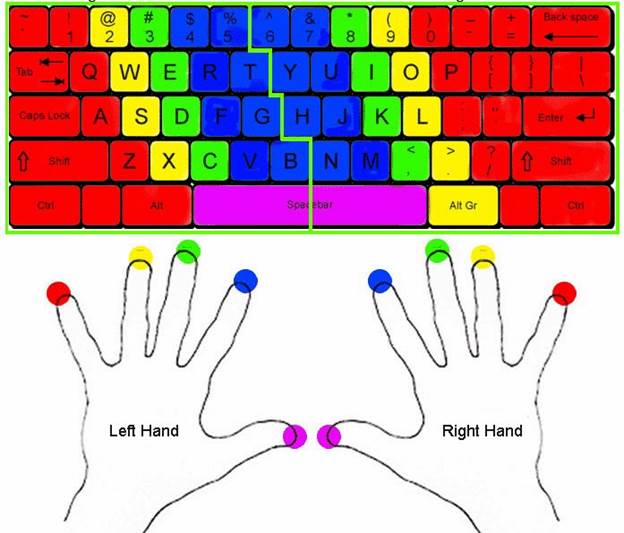

✅ یادگیری: محل قرارگیری انگشتان اشاره و شست؛ کلیدهای پایه.

🧠 نکات مهم:

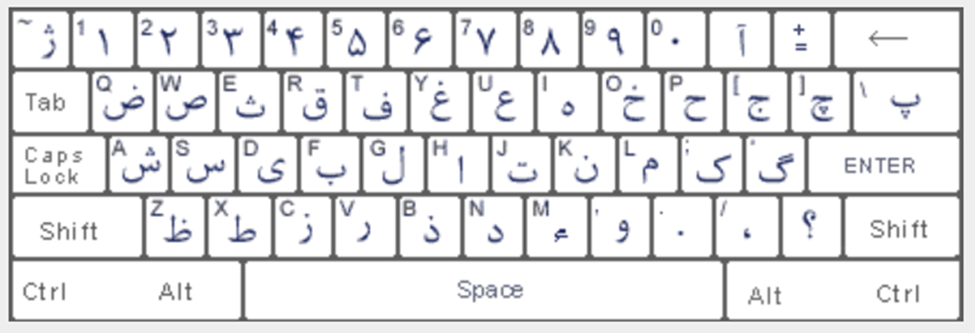

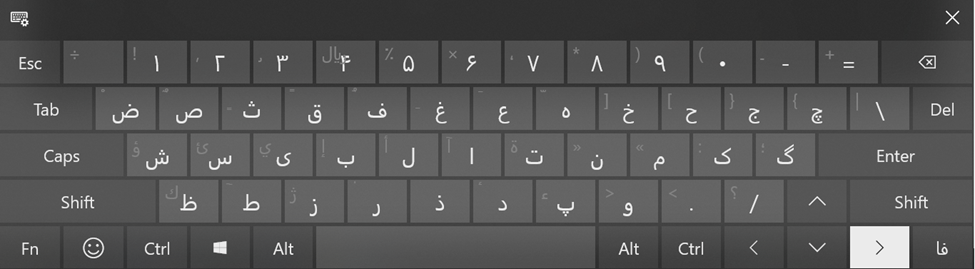

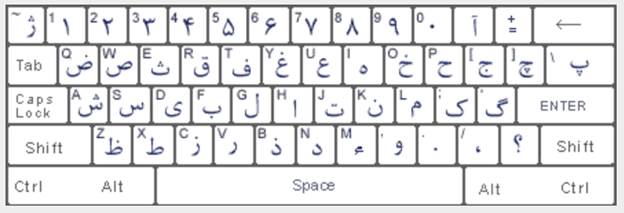

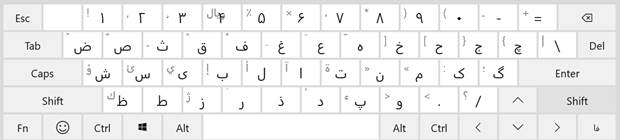

- انگشت اشاره دست چپ روی «ت»

- انگشت اشاره دست راست روی «ب»

- شستها برای زدن فاصله

✍️ تمرینها:

- ت ب ت ب ب ت ت ب

- ت ب ت ب ت ب ب ت

- تبت ببت تب تب تبتب ببتب

- چالش سرعت (۳۰ ثانیه): ت ب ت ب ت ب ت ب

درس ۲: حروف «ن» و «ی»

✅ یادگیری: استفاده از انگشتان میانی

🧠 نکات مهم:

- «ن» = انگشت میانی دست راست

- «ی» = انگشت میانی دست چپ

✍️ تمرینها:

- ن ی ن ن ی ی ن ی

- ت ب ن ی ب ن ی ت ن ت ی

- بتن یتن تبنی بیتی نیتی

- ن ی ت ب ن ت ی ب ت ب ن ی

درس ۳: حروف «م» و «س»

✅ یادگیری: استفاده از انگشتان انگشتری

🧠 نکات مهم:

- «م» = انگشت انگشتری دست راست

- «س» = انگشت انگشتری دست چپ

✍️ تمرینها:

- م س م س س م

- ت ب ن ی م س ب س ن م ت ی

- سمب نیتم یبمس تنسم

- م م س س ی ی ن ن ب ب ت ت

درس ۴: حروف «ک» و «ش»

✅ یادگیری: استفاده از انگشت کوچک دست راست و چپ

🧠 نکات مهم:

- «ک» = انگشت کوچک دست راست

- «ش» = انگشت کوچک دست چپ

✍️ تمرینها:

- ش ک ش ش ک ک ش

- ک ش م س ت ب

- کشم شتنب شمسک

- ش ک م س ن ی ب ت

درس ۵: مرور و ترکیب ۸ حرف پایه

✅ مرور: ت، ب، ن، ی، م، س، ک، ش + فاصله

✍️ تمرینها:

- ت ب ن ی م س ک ش

- شبنم، تمیش، بیکس، نیمت

- شمس تبریزی؛ بکتاش نیکسیرت

- تمرین سرعت: بنویس بدون نگاه به کیبورد (در ۱ دقیقه):

«شکستن سکوت شب، بیداری مداوم است.»

نمونه ساده ۱- آزمون نهایی آموزشگاهنمونه ساده ۱- آزمون نهایی آموزشگاه

«مفاهیم اولیه و اساسی کامپیوتر»

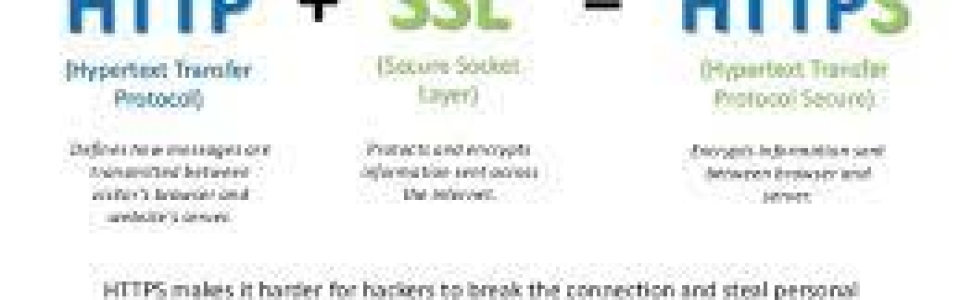

📝 سوالات تشریحی مفاهیم اولیه ICDL:

1. کامپیوتر را با زبان ساده تعریف کنید و مراحل اصلی کار آن را بنویسید.

2. تفاوت بین سختافزار (Hardware) و نرمافزار (Software) را توضیح دهید و برای هرکدام دو مثال بنویسید.

3. منظور از داده (Data) و اطلاعات (Information) چیست؟ با یک مثال توضیح دهید.

4. RAM و هارد دیسک چه تفاوتی با هم دارند؟ عملکرد هرکدام را توضیح دهید.

5. اینترنت چیست و چه کاربردهایی در زندگی روزمره دارد؟ حداقل ۳ مورد بنویسید.

6. سیستمعامل چیست؟ چه وظیفهای دارد و یک مثال برای آن بنویسید.

7. اگر هنگام کار با اینترنت یک ایمیل ناشناس با فایل پیوست برایتان بیاید، چه کار میکنید؟ چرا؟

8. یک مثال واقعی از کاربرد کامپیوتر در مدرسه یا خانه بنویسید و اجزای سختافزاری و نرمافزاری مورد استفاده در آن را مشخص کنید.

9. چرا داشتن رمز عبور قوی در اینترنت مهم است؟ چه نکاتی را باید برای ساخت رمز قوی رعایت کنیم؟

10. مراحل ذخیرهسازی یک فایل در کامپیوتر را به زبان ساده بنویسید (از باز کردن برنامه تا ذخیرهی فایل در فلش مموری).

🟦 بخش ویندوز (Windows) – سوالات عملی:

1. ایجاد پوشه و مدیریت فایلها

🔸 یک پوشه با نام ICDL_Practice در دسکتاپ بسازید.

🔸 داخل آن یک پوشهی دیگر به نام Documents و یک فایل متنی با نام Test.txt بسازید.

🔸 فایل را به پوشهی Documents منتقل کنید و سپس پوشهی اصلی را به فلش مموری کپی کنید.

2. شخصیسازی دسکتاپ

🔸 تصویر پسزمینه دسکتاپ را تغییر دهید و یک تصویر دلخواه از پوشهی Pictures انتخاب کنید.

🔸 رزولوشن صفحه را روی بالاترین گزینه تنظیم کنید.

3. مدیریت پنجرهها

🔸 دو پنجره باز کنید (مثلاً Notepad و File Explorer)

🔸 با استفاده از کلیدهای ترکیبی، آنها را در دو طرف صفحه قرار دهید.

🔸 بین پنجرهها جابهجا شوید و توضیح دهید از چه کلیدهایی استفاده کردید.

4. میانبرها و نوار وظیفه

🔸 یک میانبر از برنامهی Word روی دسکتاپ بسازید.

🔸 آن را به نوار وظیفه (Taskbar) سنجاق کنید.

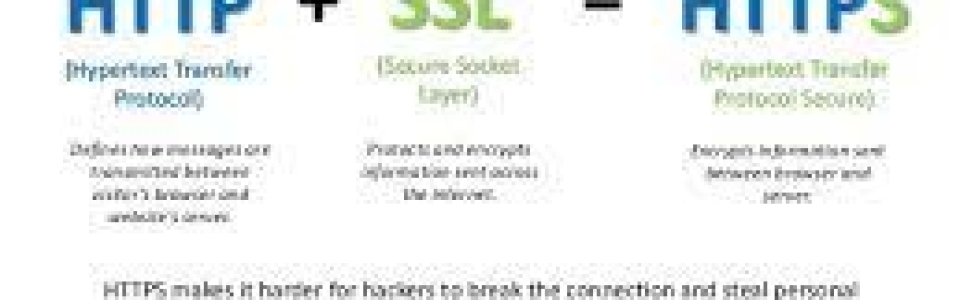

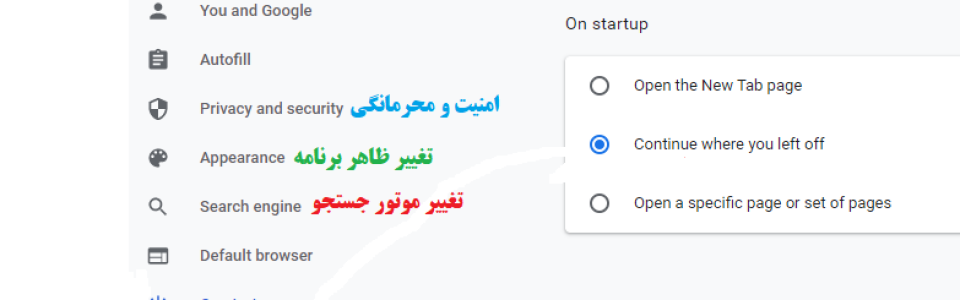

🟨 بخش اینترنت (Internet) – سوالات عملی:

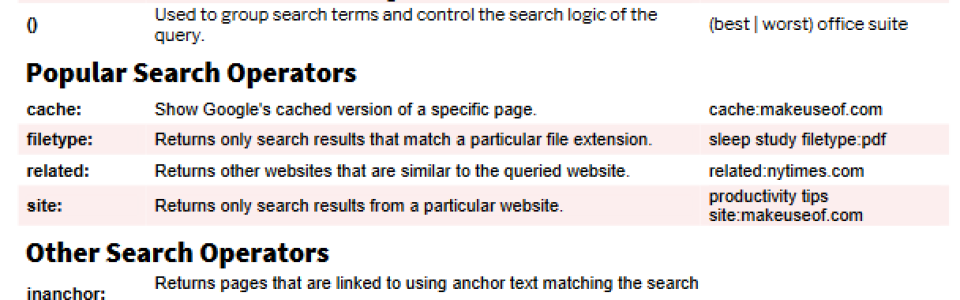

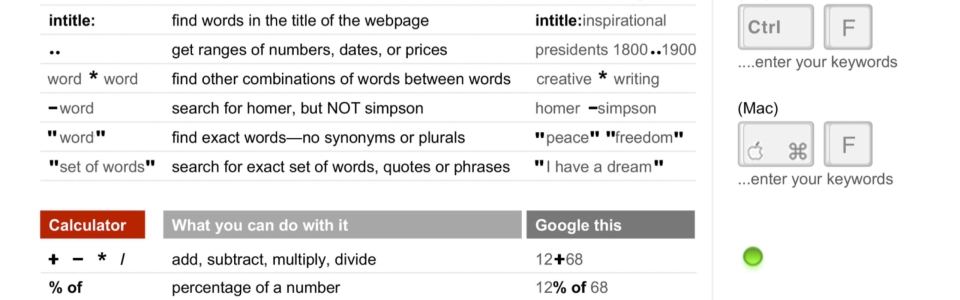

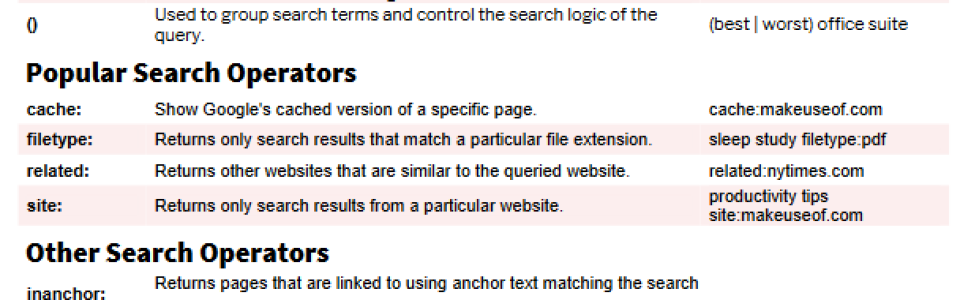

1. استفاده از موتور جستجو

🔸 با استفاده از مرورگر Chrome یا Firefox وارد سایت www.google.com شوید.

🔸 موضوع “هوش مصنوعی چیست؟” را جستجو کرده و خلاصهای از یک مقالهی مرتبط بنویسید.

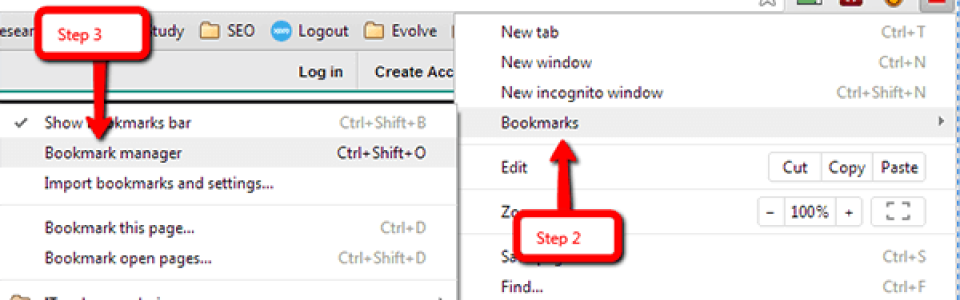

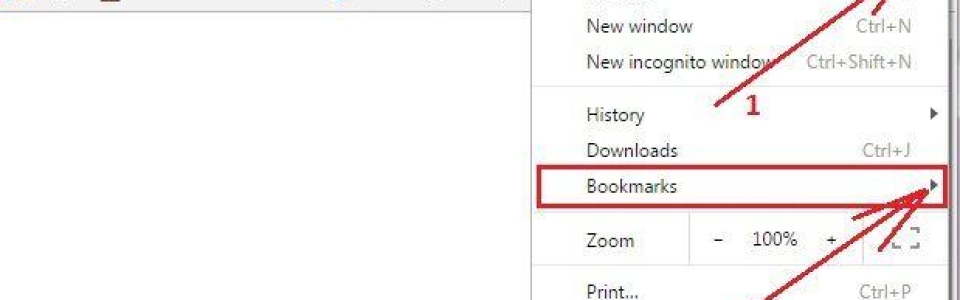

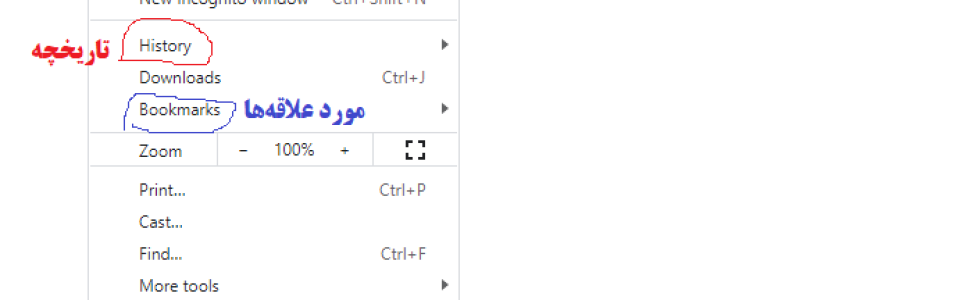

2. مدیریت بوکمارکها

🔸 سایت www.wikipedia.org را باز کرده و آن را به بوکمارکهای مرورگر خود اضافه کنید.

🔸 نام آن را به “دانشنامه ویکی” تغییر دهید و در پوشهای به نام “ICDL” قرار دهید.

3. دانلود فایل و مشاهدهی مسیر ذخیره

🔸 یک فایل PDF از سایت رسمی دانلود کنید.

🔸 مسیر ذخیرهشدن فایل را مشخص کرده و آن را در پوشهی Downloads باز کنید.

4. ایمیل و پیوستها

🔸 وارد ایمیل خود شوید (مثلاً Gmail).

🔸 یک ایمیل جدید بنویسید، موضوع را “تمرین ICDL” قرار دهید و یک فایل متنی پیوست (Attach) کنید.

🔸 برای معلم یا همکلاسی خود ارسال کنید.

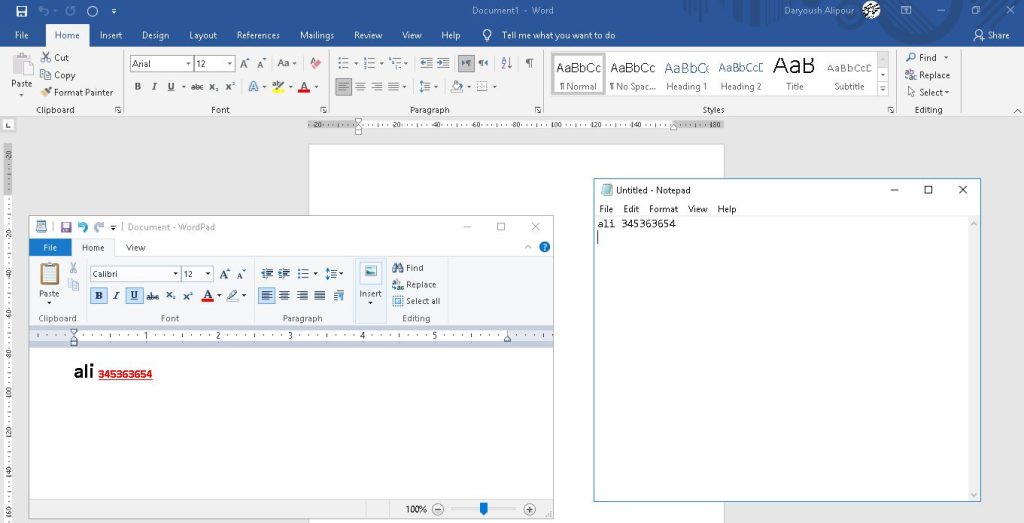

word

1-یک سند 5 صفحه ای ایجاد کنید که هر 3 دقیقه یکبار به طور خودکار ذخیره شود و بهصورت فقط خواندنی باز شود.

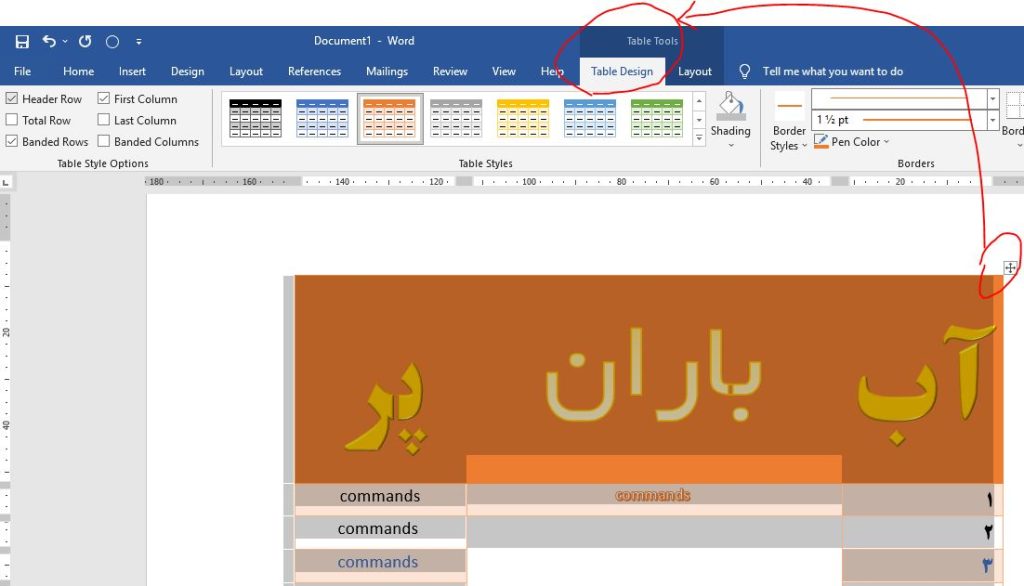

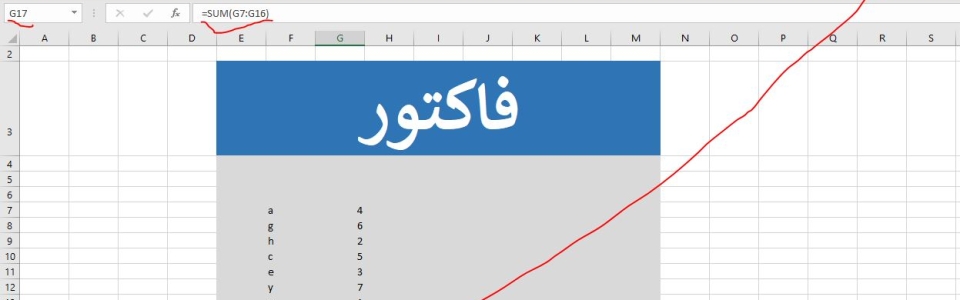

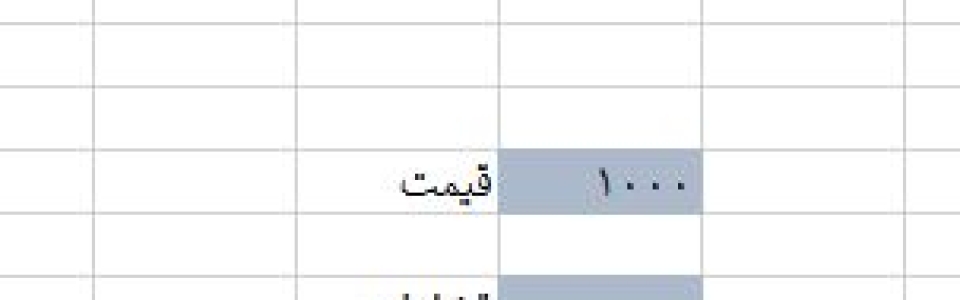

2-جدول زیر را ایجاد کنید.

| شرح | تعداد | قیمت | ||

| جمع کل | ||||

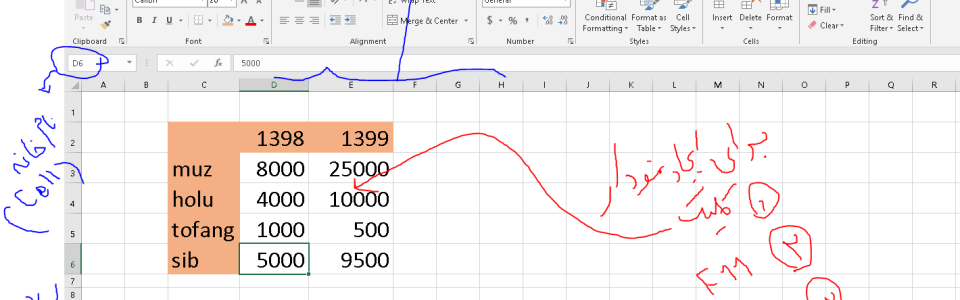

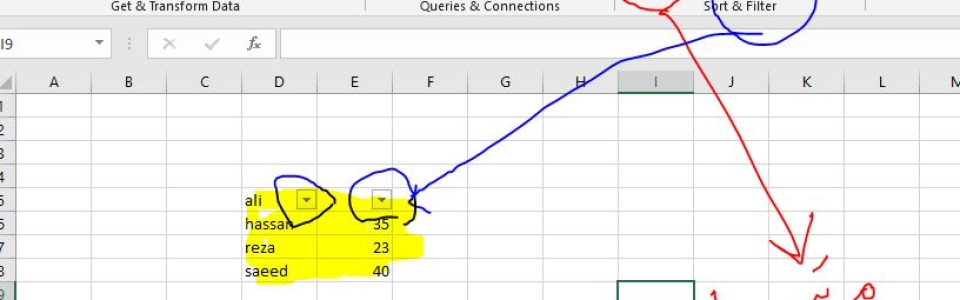

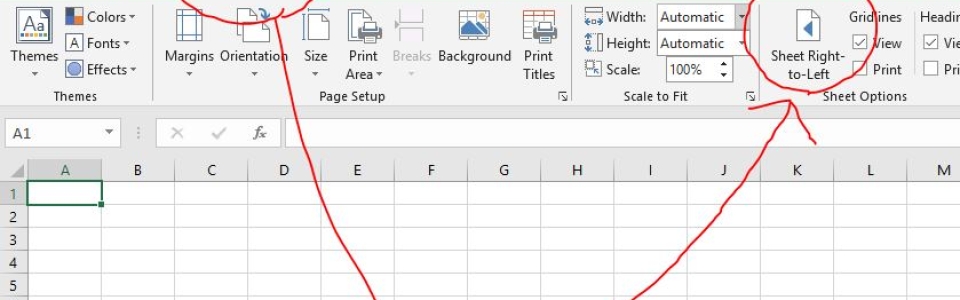

Excel

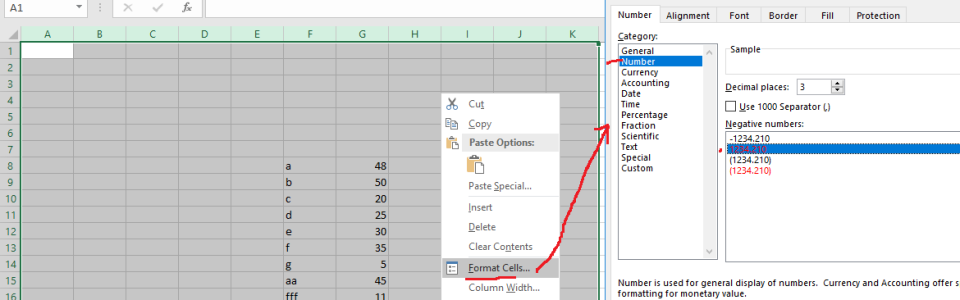

3-یک صفحه کاری ایجاد کنید و نام کاربرگ اول آن را tirotir قرار دهید و سپس قالببندی اعداد را تغییر دهید تا اعداد منفی قرمز دیده شوند.

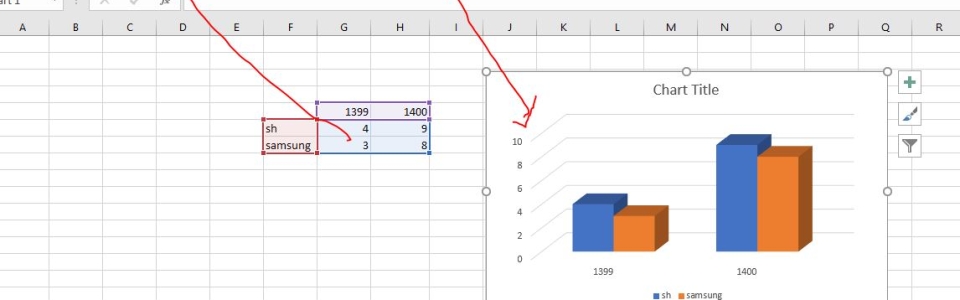

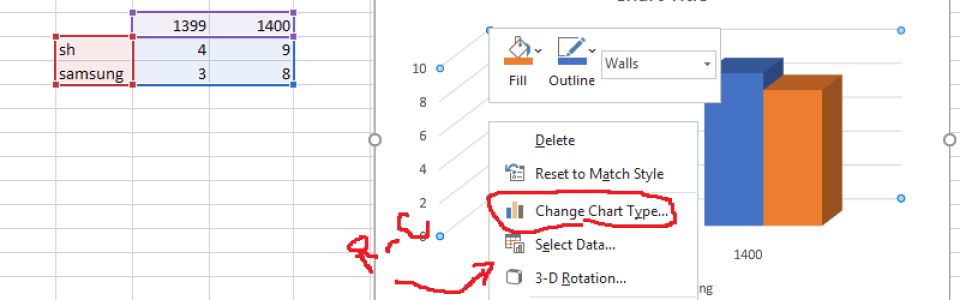

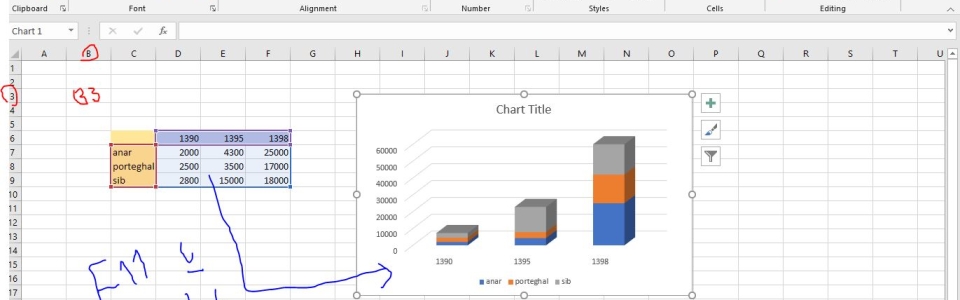

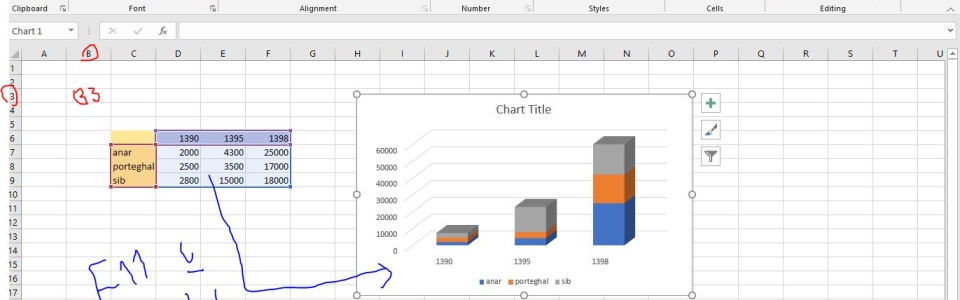

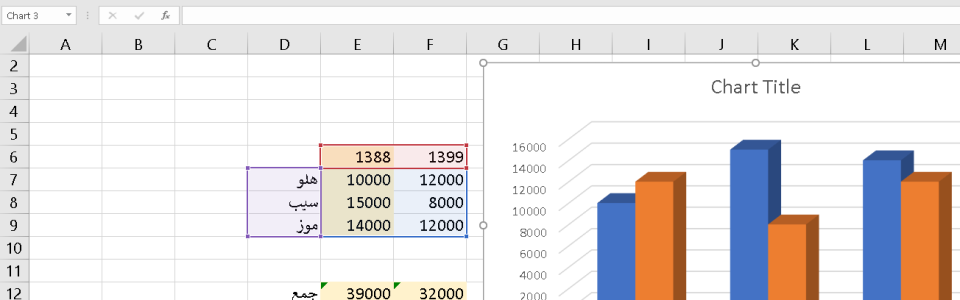

4- جدول زیر را با فرمول مناسب ایجاد کنید و یک نمودار ستونی ۳ بعدی از آن نمایش دهید.

| نام درس | تعداد واحد | نمره | نمره باضریب |

| زبان | 2 | 15 | |

| شیمی | 3 | 16 | |

| فیزیک | 4 | 14 |

Power point

5- سه اسلاید ایجاد کنید در اسلاید اول نام خود را بصورت سه بعدی و رنگی و در اسلاید دوم یک دیاگرام و در سومی یک تصویر قرار دهید.

Access

6-جدولی ایجاد کنید که شامل فیلدهای نام و فامیلی و شماره تلفن باشد

7-برای جدول بالا گزارشی تهیه کنید که در سر صفحه آن نام شما و در پا صفحه تاریخ روز درج شده باشد.

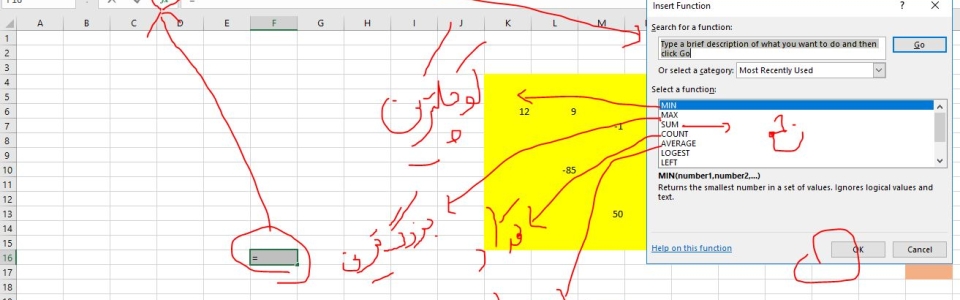

Excel:

3-جدول زیر را با استفاده از توابع پر کنید.

A1=556 و B1=793

| کوچکتر | بزرگتر | میانگین | جمع |

4- به ستون BوCکاربرگ جاری فرمت تاریخ بدهید.

Powerpoint

5- به کلمه HAPPYبگونه ای افکت دهید که بعد از اجرای جلوه پنهان شود

6- یک ارائه کاری حاوی 7 اسلاید بسازید هریک را شماره گذاری نموده و ترتیبی دهید که به ترکیب زیر اجرا شود.(3-7-4-6-1-5-2)

Access:

7-جدولی با فیلدهای زیر را طراحی کنید و Code-pرا کلید اصلی قرار دهید

code-p(کلید اصلی –عددبصورت اتوماتیک پر شود)-نام 15 کاراکتری

8- از جدول بالا یک فرم و یک گزارش تهیه کنید.

مرور مبانی ICDLمرور مبانی ICDL

آموزشگاه هوش مصنوعی: مفاهیم اولیه کامپیوتر و سختافزار

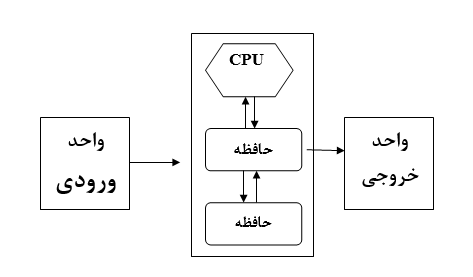

- تعریف کامپیوتر و انواع آن

پرسش: کامپیوتر چیست و چه انواعی دارد؟

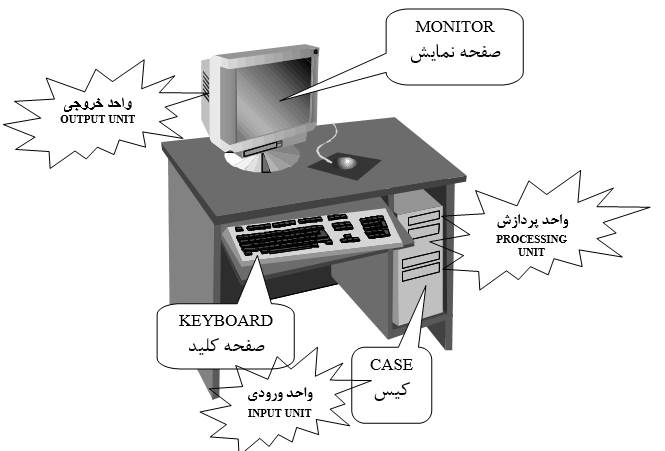

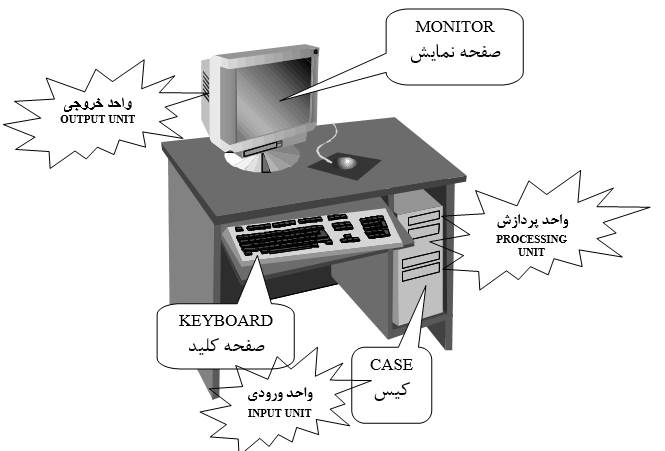

پاسخ: کامپیوتر دستگاهی است که دادهها را پردازش و نتایج را نمایش میدهد. انواع آن شامل دسکتاپ -برای کارهای ثابت-، لپتاپ -قابل حمل-، و تبلت -صفحه لمسی- است.

مثال: یک لپتاپ میتواند برای برنامهنویسی و تبلت برای خواندن کتابهای الکترونیکی استفاده شود. - شناخت اجزای اصلی کامپیوتر

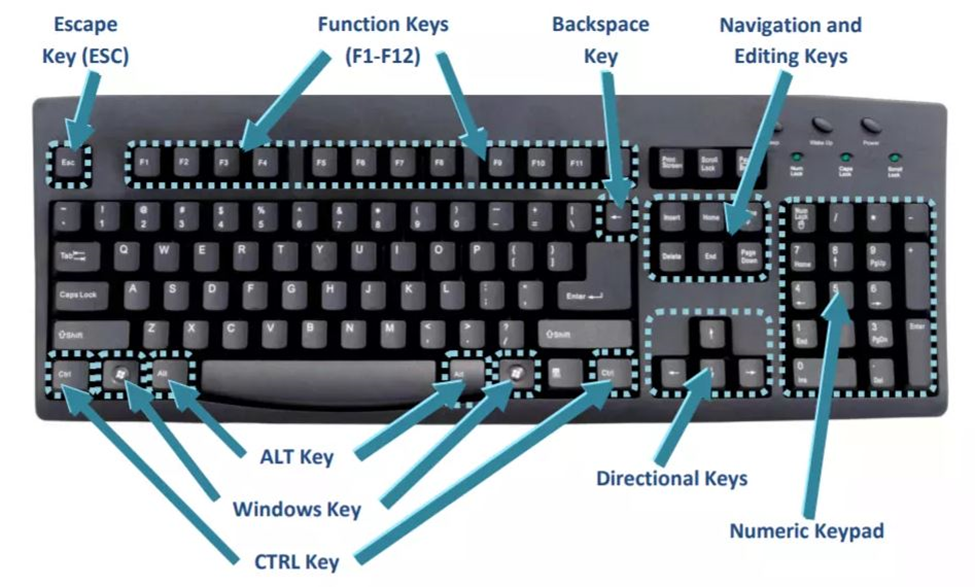

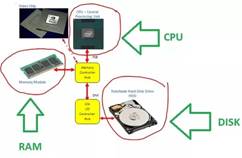

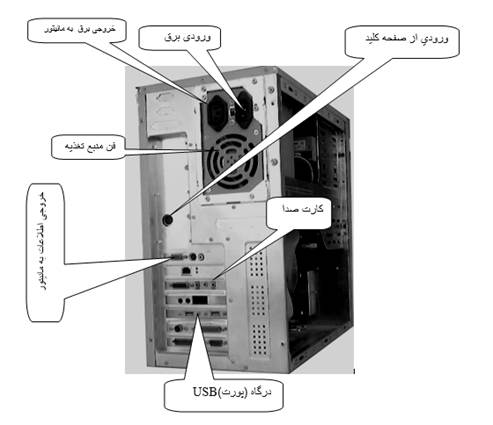

پرسش: اجزای اصلی کامپیوتر کداماند؟

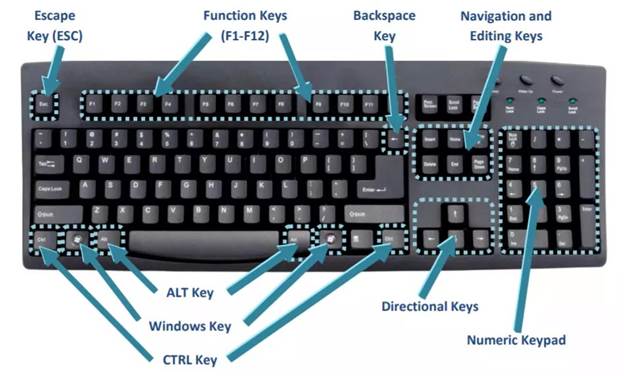

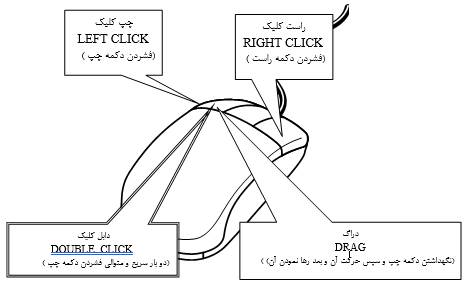

پاسخ: اجزا شامل ورودی -مانند ماوس و کیبورد-، پردازش -CPU-، خروجی -مانیتور، چاپگر-، و ذخیرهسازی -HDD/SSD- هستند.

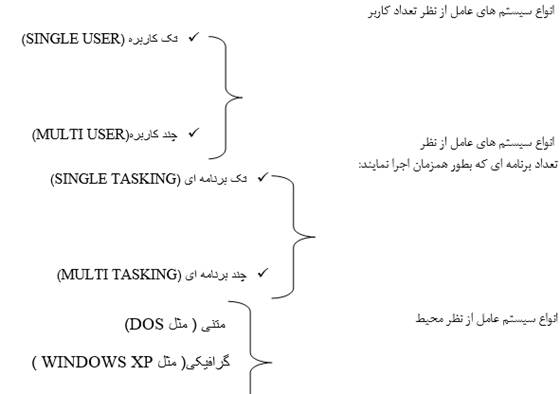

راه عملی: کیبورد را وصل کنید و در برنامه “نوتپد” متن تایپ کنید؛ نتیجه در مانیتور نمایش داده میشود. - مفهوم سیستمعامل

پرسش: سیستمعامل چیست؟

پاسخ: سیستمعامل نرمافزاری است که بین کاربر و سختافزار واسطه میشود؛ ویندوز، لینوکس و مک از نمونهها هستند.

مثال عملی: اگر در ویندوز هستید، کلید Start را فشار دهید و نرمافزار Word را باز کنید. - تفاوت نرمافزار و سختافزار

پرسش: نرمافزار و سختافزار چه تفاوتی دارند؟

پاسخ: سختافزار اجزای فیزیکی سیستم است؛ نرمافزار برنامههایی است که روی آن اجرا میشوند.

راه عملی: ماوس را لمس کنید -سختافزار- و از مرورگر کروم برای جستجو استفاده کنید -نرمافزار-. - مدیریت فایل و پوشهها

پرسش: چگونه فایل و پوشهها را مدیریت کنیم؟

پاسخ: فایلها دادههای ذخیرهشده و پوشهها ساختاری برای مرتبسازی آنها هستند.

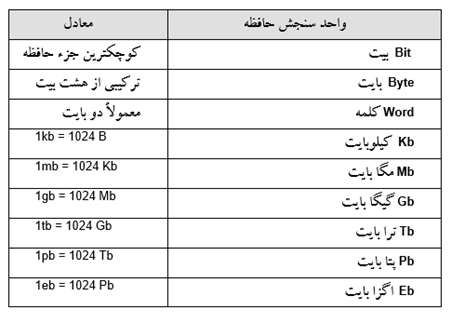

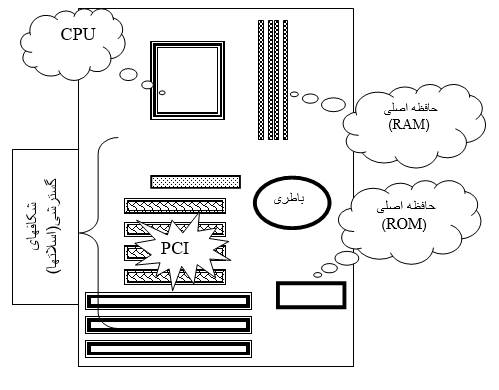

مثال عملی: پوشهای به نام “درس” در دسکتاپ ایجاد کنید و فایلهای متنی خود را داخل آن قرار دهید. - RAM و حافظه ذخیرهسازی

پرسش: RAM چه تفاوتی با HDD/SSD دارد؟

پاسخ: RAM حافظه موقتی است که سرعت پردازش را افزایش میدهد، ولی HDD/SSD برای ذخیرهسازی دائمی دادهها استفاده میشود.

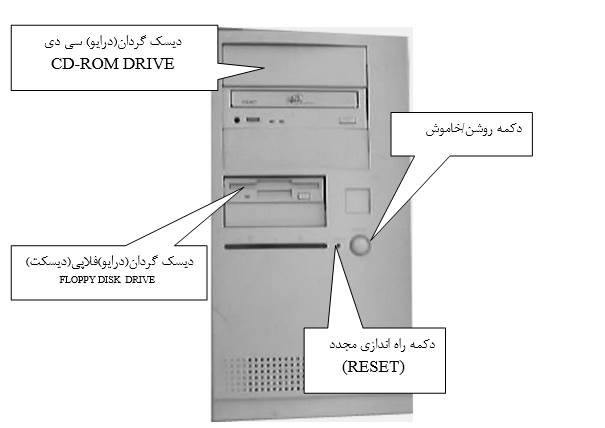

راه عملی: مرورگر را باز کنید -RAM استفاده میشود-، سپس فایلی در هارد دیسک ذخیره کنید. - بوت شدن کامپیوتر

پرسش: بوت شدن چیست؟

پاسخ: بوت فرآیند شروع به کار کامپیوتر است که BIOS یا UEFI نقش اصلی در شناسایی سختافزارها دارد.

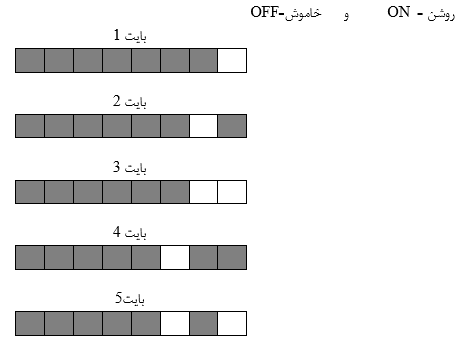

مثال: هنگام روشن کردن سیستم، لوگوی شرکت سازنده ظاهر میشود که نشاندهنده فرآیند بوت است. انواع دادهها

پرسش: دادهها چند نوع هستند؟

پاسخ: دادهها به متنی -docx-، صوتی -mp3-، تصویری -jpg- تقسیم میشوند.

راه عملی: یک آهنگ را در پلیر باز کنید -داده صوتی-.- درایور سختافزاری

پرسش: درایور چیست؟

پاسخ: درایور نرمافزاری است که سختافزار را برای سیستمعامل قابل استفاده میکند.

مثال عملی: اگر پرینتر کار نمیکند، بررسی کنید که درایور آن نصب شده باشد. - پورتهای ارتباطی

پرسش: پورتهای ارتباطی چه کاربردی دارند؟

پاسخ: USB برای اتصال فلش، HDMI برای نمایشگر، و Ethernet برای اتصال به شبکه استفاده میشوند.

راه عملی: فلش را به پورت USB وصل کنید و فایلها را بررسی کنید.

2- سختافزارهای اصلی کامپیوتر

- پردازنده -CPU-

پرسش: پردازنده چه نقشی در کامپیوتر دارد؟

پاسخ: CPU مغز کامپیوتر است و مسئول انجام محاسبات و اجرای دستورات است.

راه عملی: هنگام باز کردن نرمافزاری مانند فتوشاپ، CPU دادهها را پردازش کرده و نرمافزار را اجرا میکند. - کارت گرافیک -GPU-

پرسش: کارت گرافیک چه کاربردی دارد؟

پاسخ: GPU پردازش تصاویر و گرافیکهای سنگین -مانند بازی یا رندر- را انجام میدهد.

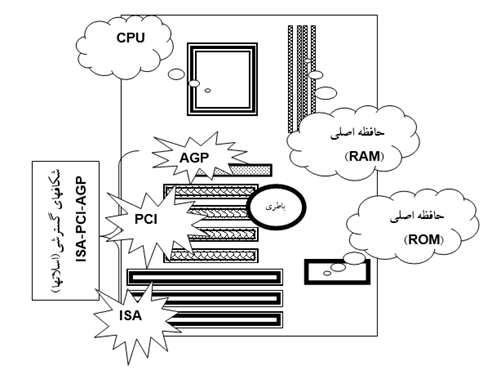

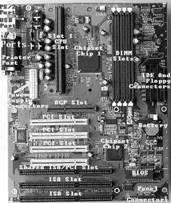

مثال عملی: هنگام بازیهای گرافیکی مانند FIFA، کارت گرافیک تصاویر را پردازش میکند. - مادربرد -Motherboard-

پرسش: مادربرد چیست؟

پاسخ: مادربرد قطعهای است که همه اجزای کامپیوتر مانند CPU، RAM، و GPU را به هم متصل میکند.

راه عملی: مادربرد را مانند مرکز کنترل کامپیوتر تصور کنید؛ تمام کابلها و قطعات به آن متصل میشوند. - حافظههای ذخیرهسازی -HDD/SSD/NVMe-

پرسش: چه تفاوتی بین HDD، SSD، و NVMe وجود دارد؟

پاسخ: HDD سرعت پایینتری دارد و مکانیکی است، SSD سریعتر و الکترونیکی است، و NVMe جدیدترین تکنولوژی با سرعت بسیار بالاست.

راه عملی: اگر بازی یا برنامهای را روی SSD نصب کنید، بسیار سریعتر اجرا میشود. - مانیتور -LCD و LED-

پرسش: مانیتور LCD با LED چه تفاوتی دارد؟

پاسخ: LED روشنایی بیشتر و مصرف کمتری دارد، اما LCD معمولاً ارزانتر است.

مثال: در مانیتور LED تصاویر روشنتر و رنگها زندهتر به نظر میرسند. - منبع تغذیه -Power Supply-

پرسش: منبع تغذیه چه وظیفهای دارد؟

پاسخ: پاور برق مورد نیاز قطعات سیستم را تأمین میکند.

راه عملی: اگر سیستم روشن نمیشود، بررسی کنید که پاور سالم باشد. - ابزارهای ورودی -Input Devices-

پرسش: ابزارهای ورودی چه هستند؟

پاسخ: ابزارهایی که داده را وارد سیستم میکنند، مانند ماوس، کیبورد و اسکنر.

راه عملی: با کیبورد متنی در Word تایپ کنید یا با ماوس روی آیکون کلیک کنید. - ابزارهای خروجی -Output Devices-

پرسش: ابزارهای خروجی چه کاربردی دارند؟

پاسخ: ابزارهایی که خروجی دادهها را نمایش میدهند، مانند مانیتور، اسپیکر، و پرینتر.

راه عملی: سندی را چاپ کنید تا خروجی آن را روی کاغذ ببینید. - قطعات خنککننده -Cooling Devices-

پرسش: قطعات خنککننده چه نقشی دارند؟

پاسخ: فن و هیتسینک دمای قطعات داخلی را کنترل و از داغ شدن بیش از حد جلوگیری میکنند.

راه عملی: هنگام اجرای بازی سنگین، صدای فن بیشتر میشود تا حرارت را کاهش دهد. - شبکههای بیسیم و کارت شبکه

پرسش: کارت شبکه چیست؟

پاسخ: کارت شبکه امکان اتصال کامپیوتر به شبکه بیسیم -Wi-Fi- را فراهم میکند.

راه عملی: Wi-Fi لپتاپ را روشن کنید و به یک شبکه متصل شوید.

3- اصول اولیه کار با کامپیوتر

- روشن و خاموش کردن صحیح

پرسش: چرا روشن و خاموش کردن صحیح مهم است؟

پاسخ: خاموش کردن ناگهانی میتواند به سیستمعامل یا سختافزار آسیب برساند.

راه عملی: برای خاموش کردن، از منوی Start گزینه Shut Down را انتخاب کنید. - اتصال لوازم جانبی

پرسش: چگونه لوازم جانبی را متصل کنیم؟

پاسخ: با استفاده از پورتهای USB، لوازم مانند ماوس، کیبورد و پرینتر به کامپیوتر متصل میشوند.

راه عملی: پرینتر را با کابل USB وصل کنید و فایل خود را چاپ کنید. - استفاده از مرورگر

پرسش: چگونه از مرورگر استفاده کنیم؟

پاسخ: مرورگرهایی مانند کروم یا فایرفاکس ابزارهایی برای دسترسی به اینترنت هستند.

راه عملی: کروم را باز کرده و آدرس www.google.com را تایپ کنید. - مدیریت برنامهها

پرسش: چگونه نرمافزار نصب یا حذف کنیم؟

پاسخ: نرمافزارها از طریق بخش “Apps” یا “Control Panel” مدیریت میشوند.

راه عملی: وارد Control Panel شوید، یک برنامه انتخاب و آن را حذف کنید. - آنتیویروس و بهروزرسانی سیستم

پرسش: چرا آنتیویروس مهم است؟

پاسخ: آنتیویروس از سیستم در برابر ویروسها و بدافزارها محافظت میکند.

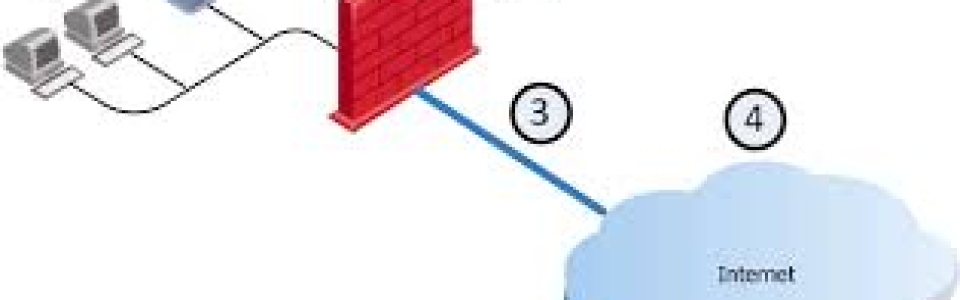

راه عملی: یک نرمافزار آنتیویروس نصب کرده و سیستم را اسکن کنید. - مفهوم فایروال

پرسش: فایروال چیست؟

پاسخ: فایروال از دسترسی غیرمجاز به کامپیوتر جلوگیری میکند.

راه عملی: وارد تنظیمات ویندوز شوید و فایروال را فعال کنید. - بکاپ گرفتن از اطلاعات

پرسش: چگونه از اطلاعات بکاپ بگیریم؟

پاسخ: با استفاده از ابزارهایی مانند هارد اکسترنال یا سرویسهای ابری.

راه عملی: فایلهای مهم خود را در گوگل درایو آپلود کنید. - عیبیابی اولیه

پرسش: چگونه مشکلات ساده را حل کنیم؟

پاسخ: بررسی اتصالات، ریاستارت کردن، یا بستن برنامههای معیوب.

راه عملی: اگر سیستم هنگ کرد، دکمه Power را نگه دارید و دوباره روشن کنید. - حافظههای خارجی

پرسش: حافظههای خارجی چه کاربردی دارند؟

پاسخ: برای انتقال یا ذخیره دادهها از فلش مموری یا هارد اکسترنال استفاده میشود.

راه عملی: فایل را روی فلش ذخیره و در کامپیوتر دیگری باز کنید. - مشکلات سختافزاری رایج

پرسش: چگونه مشکلات سختافزاری را تشخیص دهیم؟

پاسخ: عدم روشن شدن سیستم ممکن است به پاور مربوط باشد؛ عدم شناسایی دستگاه به درایور.

راه عملی: اگر ماوس کار نمیکند، پورت USB را تغییر دهید یا درایور را بررسی کنید.

در سال ۲۰۲۵، شرکتهای اینتل و AMD پردازندههای جدیدی را معرفی کردهاند که عملکرد و کارایی سیستمها را بهبود میبخشند. در ادامه، به معرفی برخی از این پردازندهها و سیستمهای پیشنهادی میپردازیم.

پردازندههای جدید

اینتل:

- سری Core Ultra 200: اینتل در نمایشگاه CES 2025 از پردازندههای سری Core Ultra 200 رونمایی کرد که برای لپتاپها و سیستمهای رومیزی طراحی شدهاند. این پردازندهها با معماری بهبودیافته و مصرف انرژی کمتر، عملکرد بهتری را ارائه میدهند.

AMD:

- Ryzen 9 9955HX3D: در CES 2025، AMD از پردازنده Ryzen 9 9955HX3D رونمایی کرد که با ۱۶ هسته و ۳۲ رشته پردازشی، فرکانس ۵.۴ گیگاهرتز و ۱۴۴ مگابایت کش، عملکرد بالایی را ارائه میدهد.

سیستم پیشنهادی برای برنامهنویسی و هوش مصنوعی -بدون GPU-:

۱. سیستم اقتصادی:

- پردازنده: Intel Core i5-13500 -هستههای قدرتمند با عملکرد بالا برای پردازش چند رشتهای-.

- رم: 16 گیگابایت DDR5 -پاسخگویی سریعتر به عملیات و مدیریت چندین برنامه-.

- حافظه ذخیرهسازی: 512 گیگابایت SSD NVMe -سرعت بالا برای بوت و ذخیره پروژهها-.

- مادربرد: MSI Pro B760M-A WIFI -دارای پشتیبانی از رم DDR5 و اتصالات پیشرفته-.

- منبع تغذیه: ۶۰۰ وات -برای اطمینان از پایداری سیستم-.

کاربرد: مناسب برای کارهای روزمره برنامهنویسی و اجرای پروژههای سبک هوش مصنوعی.

۲. سیستم میانرده:

- پردازنده: AMD Ryzen 7 7700 -۸ هسته و ۱۶ رشته برای پردازشهای چندگانه-.

- رم: 32 گیگابایت DDR5 -اجرای همزمان پروژههای سنگین و ماشینهای مجازی-.

- حافظه ذخیرهسازی: 1 ترابایت SSD NVMe -فضای کافی برای دیتاستها و پروژهها-.

- مادربرد: ASUS TUF Gaming B650-PLUS -مجهز به پشتیبانی از سختافزارهای جدید-.

- منبع تغذیه: ۷۵۰ وات -برای پایداری سیستم و امکان ارتقا-.

کاربرد: مناسب برای برنامهنویسی حرفهای، مدلسازی و آموزش الگوریتمهای متوسط هوش مصنوعی.

۳. سیستم حرفهای:

- پردازنده: AMD Ryzen 9 7950X -۱۶ هسته و ۳۲ رشته با عملکرد فوقالعاده برای مدلسازی پیشرفته-.

- رم: 64 گیگابایت DDR5 -مدیریت همزمان چندین پروژه حجیم و ماشینهای مجازی-.

- حافظه ذخیرهسازی: 2 ترابایت SSD NVMe -سرعت و فضای کافی برای دیتاستهای حجیم-.

- مادربرد: Gigabyte X670E Aorus Master -با امکانات پیشرفته برای بهرهگیری از سختافزارهای مدرن-.

- منبع تغذیه: ۸۵۰ وات -قابلیت ارتقا و استفاده طولانیمدت-.

کاربرد: ایدهآل برای برنامهنویسان حرفهای و متخصصین هوش مصنوعی که با مدلهای بزرگ و پردازشهای سنگین کار میکنند.

نکات مهم:

- اگر پروژههای هوش مصنوعی شما به GPU نیاز دارد، میتوانید در آینده کارت گرافیکهای NVIDIA RTX سری ۳۰ یا ۴۰ را اضافه کنید.

- استفاده از سیستمهای SSD NVMe در تمامی پیشنهادها برای سرعت بوت و بارگذاری بسیار اهمیت دارد.

- پردازندههای Ryzen با توجه به هستههای بیشتر، برای پروژههای پردازشی و مدلسازی مناسبتر هستند.

- حتماً به تهویه مناسب کیس و استفاده از قطعات با کیفیت برای اطمینان از طول عمر سیستم توجه کنید.

آموزشگاه هوش مصنوعی

مبانی و مفاهیم اولیه کار با کامپیوتر و سختافزار

1- مفاهیم پایه کامپیوتر:

- تعریف کامپیوتر و انواع آن (دسکتاپ، لپتاپ، تبلت).

- شناخت اجزای اصلی کامپیوتر (ورودی، پردازش، خروجی، ذخیرهسازی).

- مفهوم سیستمعامل (ویندوز، لینوکس، مک).

- آشنایی با نرمافزارها و تفاوت آنها با سختافزار.

- معرفی مفهوم فایل و پوشه و نحوه مدیریت آنها.

- تفاوت بین حافظه RAM و حافظه ذخیرهسازی (HDD/SSD)

- مفهوم بوت شدن کامپیوتر و نقش BIOS/UEFI

- معرفی انواع دادهها (متنی، صوتی، تصویری).

- مفهوم درایور سختافزاری و اهمیت نصب آن.

- آشنایی با پورتهای ارتباطیUSB، HDMI، Ethernet

2- سختافزارهای اصلی کامپیوتر:

- معرفی پردازنده (CPU) و وظیفه آن.

- آشنایی با کارت گرافیک (GPU) و نقش آن در پردازش گرافیکی.

- مفهوم مادربرد (Motherboard) و ارتباط اجزا در آن.

- آشنایی با انواع حافظههای ذخیرهسازیHDD، SSD، NVMe

- تفاوت میان مانیتور LCD و LED

- کارکرد منبع تغذیه (Power Supply) و نحوه تأمین برق اجزا.

- معرفی ابزارهای ورودی: ماوس، کیبورد، اسکنر.

- آشنایی با ابزارهای خروجی: پرینتر، اسپیکر.

- آشنایی با قطعات خنککننده (فن، هیتسینک).

- معرفی شبکههای بیسیم و کارتهای شبکه (Wi-Fi Adapter)

3- اصول اولیه کار با کامپیوتر:

- روشن و خاموش کردن صحیح کامپیوتر.

- اتصال و نصب لوازم جانبی (پرینتر، ماوس، کیبورد).

- نحوه استفاده از مرورگر برای دسترسی به اینترنت.

- مدیریت برنامهها (نصب و حذف نرمافزار).

- اهمیت آنتیویروس و بهروزرسانی سیستم.

- مفهوم فایروال و نقش آن در امنیت کامپیوتر.

- بکاپ گرفتن از اطلاعات شخصی.

- اصول عیبیابی اولیه (ریاستارت، بررسی اتصالات).

- نحوه استفاده از حافظههای خارجی (فلش مموری، هارد اکسترنال).

- شناسایی مشکلات سختافزاری رایج (مانند روشن نشدن سیستم یا عدم شناسایی دستگاهها).

مرور پاورپوینت و عملیمرور پاورپوینت و عملی

۳۰ مورد برای پاورپوینت:

- ایجاد یک اسلاید جدید

انتخاب تب Home –> New Slide –> انتخاب نوع اسلاید. - افزودن متن به اسلاید

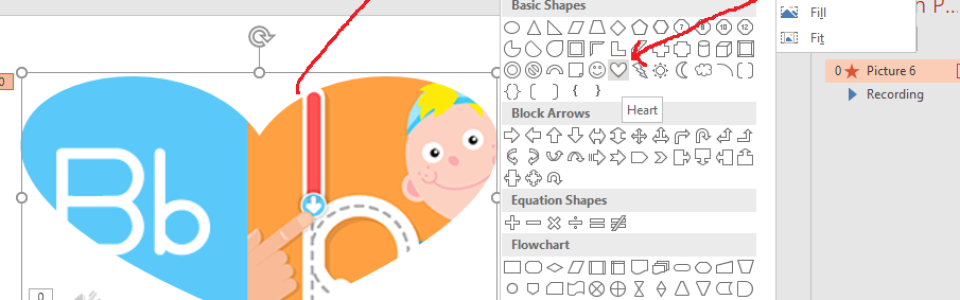

کلیک روی جعبه متنی و تایپ متن. - افزودن تصویر به اسلاید

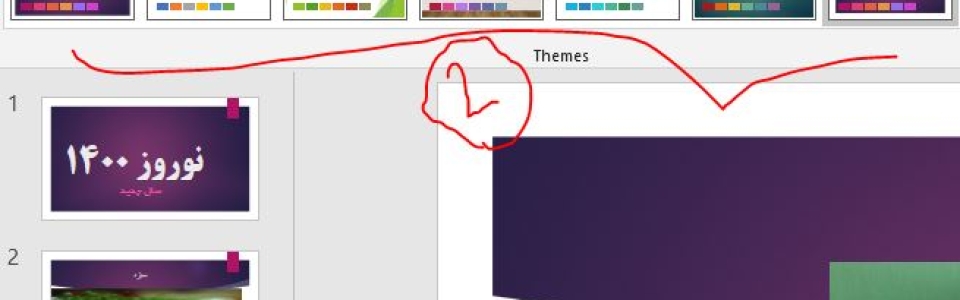

انتخاب تب Insert –> Pictures –> انتخاب تصویر از محل ذخیره. - استفاده از قالب آماده (Theme)

انتخاب تب Design –> انتخاب قالب (Theme) از گالری. - انتخاب طرحبندی (Layout) اسلاید

انتخاب اسلاید –> انتخاب تب Home –> Layout –> انتخاب طرحبندی. - انتقال بین اسلایدها با استفاده از انیمیشنها

انتخاب اسلاید –> انتخاب تب Transitions –> انتخاب نوع انتقال. - افزودن لینکهای اینترنتی به اسلاید

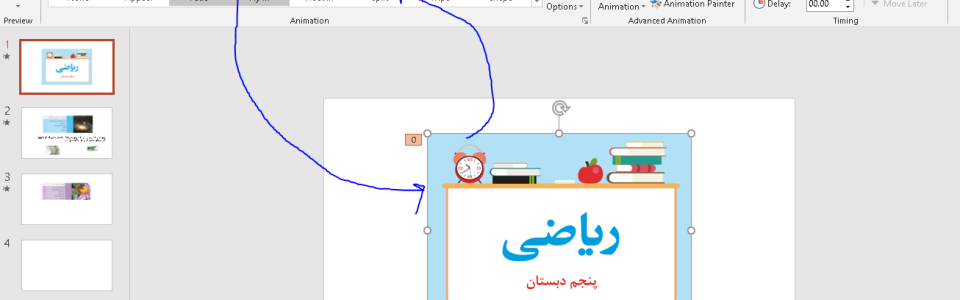

انتخاب متن یا تصویر –> راستکلیک –> Hyperlink –> وارد کردن URL. - استفاده از انیمیشن برای اشیاء

انتخاب شیء –> انتخاب تب Animations –> انتخاب نوع انیمیشن. - ایجاد نمودار در اسلاید

انتخاب تب Insert –> Chart –> انتخاب نوع نمودار و وارد کردن دادهها. - ایجاد جعبه متن در اسلاید

انتخاب تب Insert –> Text Box –> تایپ متن در جعبه. - افزودن ویدیو به اسلاید

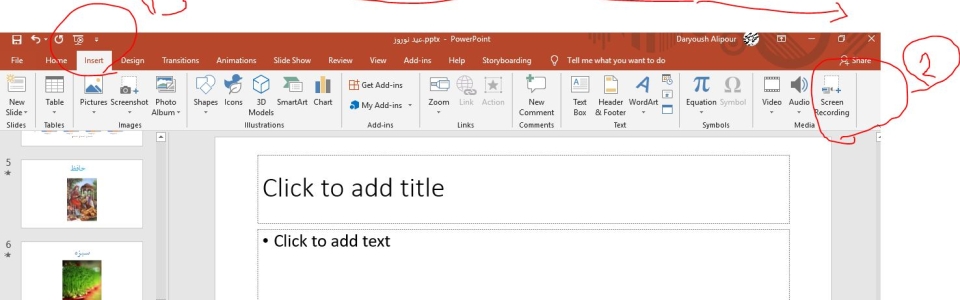

انتخاب تب Insert –> Video –> انتخاب ویدیو از فایل یا آنلاین. - استفاده از SmartArt در پاورپوینت

انتخاب تب Insert –> SmartArt –> انتخاب نوع SmartArt.

- تنظیم زمان برای هر اسلاید

انتخاب تب Transitions –> مدت زمان برای نمایش اسلاید را وارد کنید. - ایجاد افکت برای اشیاء هنگام ورود به اسلاید

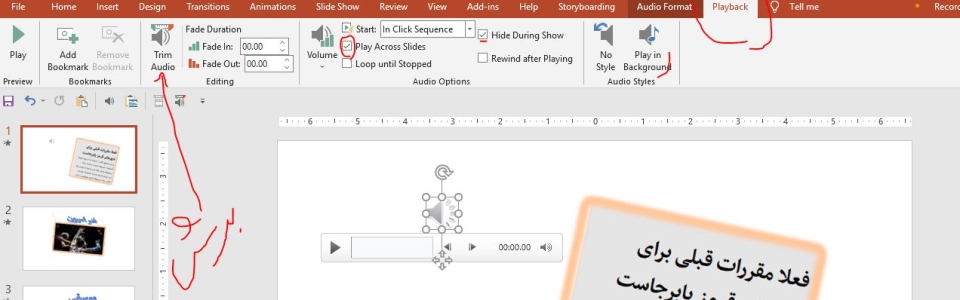

انتخاب شیء –> انتخاب تب Animations –> انتخاب نوع انیمیشن ورود. - افزودن صدا به اسلاید

انتخاب تب Insert –> Audio –> انتخاب منبع صدا. - تنظیم یک اسلاید به عنوان اسلاید عنوان

انتخاب اسلاید اول –> انتخاب تب Home –> Layout –> انتخاب Title Slide. - ایجاد فهرست مطالب در پاورپوینت

استفاده از جعبه متن و Bullet Points برای ایجاد فهرست. - استفاده از قابلیت Slide Master برای تنظیمات کلی

انتخاب تب View –> Slide Master –> ویرایش قالبهای اسلاید.

- ایجاد جدول در اسلاید

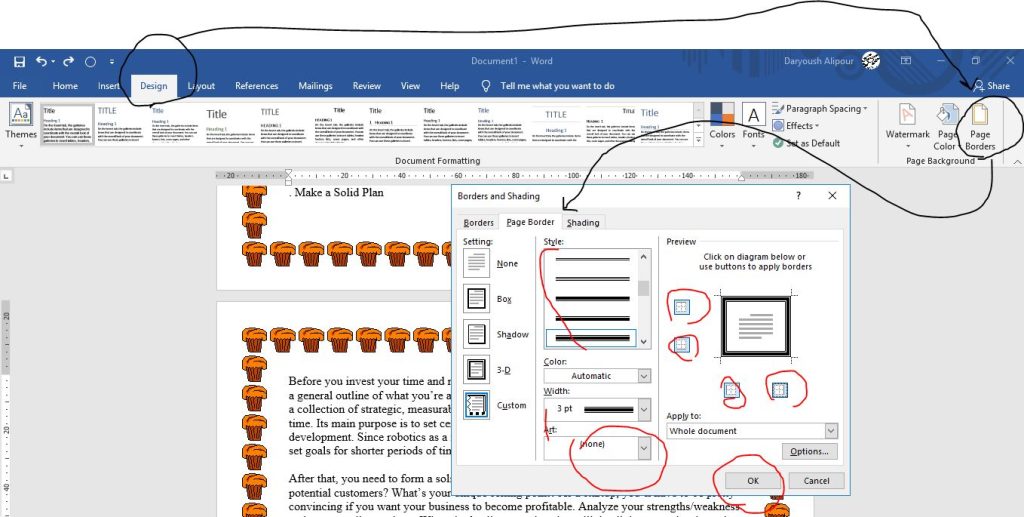

انتخاب تب Insert –> Table –> انتخاب تعداد ردیف و ستون. - افزودن حاشیه به اسلاید

انتخاب تب Design –> Page Borders –> انتخاب حاشیه برای اسلاید. - نمایش اسلاید به صورت Full Screen

فشار دادن F5 یا انتخاب تب Slide Show –> From Beginning. - افزودن انیمیشن به متن

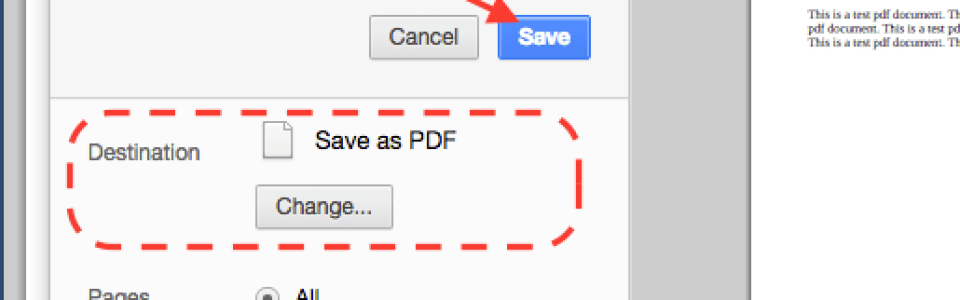

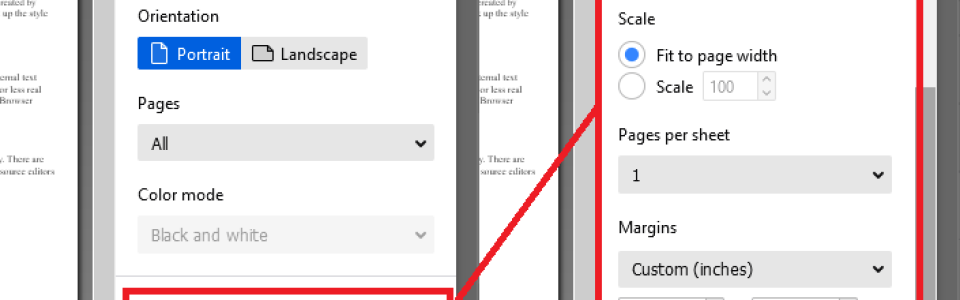

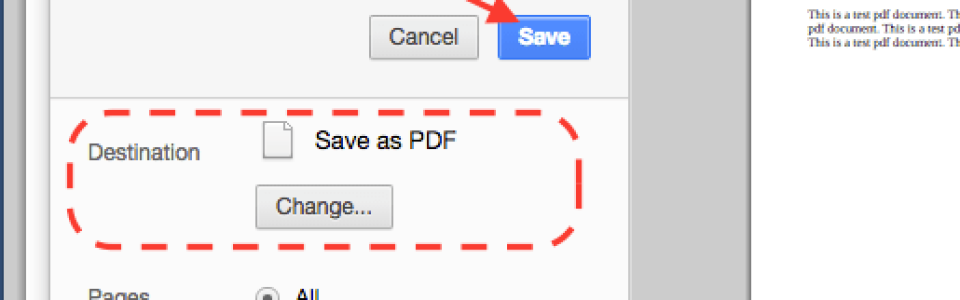

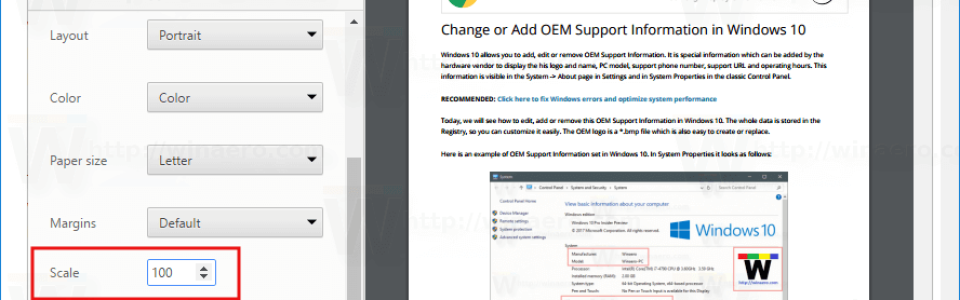

انتخاب متن –> انتخاب تب Animations –> انتخاب نوع انیمیشن. - ذخیره پاورپوینت به صورت PDF

انتخاب تب File –> Save As –> انتخاب PDF. - استفاده از قابلیت Slide Show برای تمرین

انتخاب تب Slide Show –> Rehearse Timings. - افزودن پاورقی به اسلاید

انتخاب تب Insert –> Footer –> وارد کردن متن پاورقی. - پیدا کردن اسلاید خاص با استفاده از جستجو

فشار دادن Ctrl+F و تایپ عنوان اسلاید برای جستجو. - تنظیمات چاپ برای اسلاید

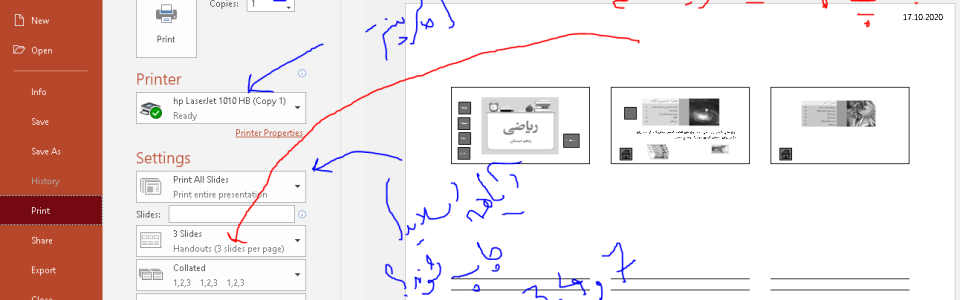

انتخاب تب File –> Print –> تنظیمات چاپ (اسلایدهای تک یا چندگانه). - استفاده از لایهها برای ترتیب اشیاء

راستکلیک روی شیء –> Send to Back یا Bring to Front. - درج کد QR به اسلاید

انتخاب تب Insert –> QR Code –> وارد کردن URL یا اطلاعات. - ایجاد لینک به اسلاید دیگر در پاورپوینت

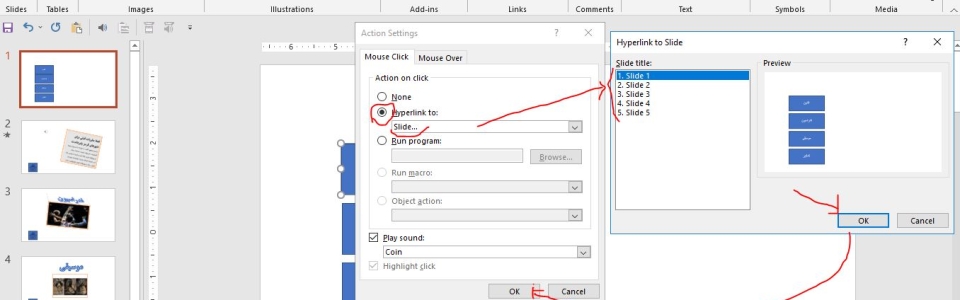

انتخاب متن یا تصویر –> راستکلیک –> Hyperlink –> انتخاب اسلاید مقصد.

آموزشگاه هوش مصنوعی

مایکروسافت پاورپوینت:

- ایجاد یک فایل پاورپوینت جدید.

- ذخیره فایل با فرمت .pptx یا .pdf.

- افزودن اسلاید جدید با قالببندی مختلف.

- تغییر پسزمینه اسلایدها.

- اضافه کردن متن و تنظیم فونت (نوع، اندازه، رنگ).

- اعمال قالبهای پیشفرض (Themes).

- تغییر ترتیب اسلایدها.

- استفاده از Slide Layouts برای تنظیم متن و تصاویر.

- اضافه کردن تصاویر به اسلاید.

- افزودن ویدیو به اسلاید.

- درج لینک (Hyperlink) به یک اسلاید دیگر یا وبسایت.

- استفاده از Bullet و Number برای لیستها.

- تنظیم افکتهای ساده برای انتقال بین اسلایدها (Transitions).

- استفاده از جدول برای سازماندهی دادهها.

- ایجاد و تنظیم نمودار (Chart).

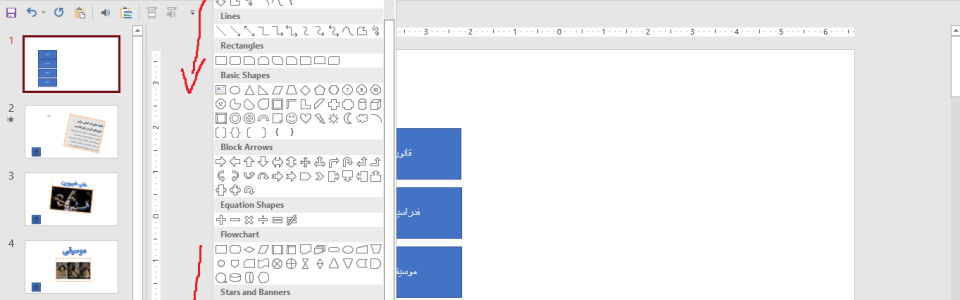

- استفاده از Shapes برای طراحی ساده.

- تغییر رنگ و تنظیمات خطوط (Outline).

- کار با ابزار Align برای تنظیم دقیق المانها.

- افزودن آیکونها و نمادها (Icons).

- تغییر اندازه و مکان اشیاء در اسلایدها.

متوسط

- استفاده از SmartArt برای نمایش اطلاعات بصری.

- اضافه کردن افکتهای حرکتی به متن یا تصاویر (Animations).

- تنظیم زمانبندی انیمیشنها (Animation Pane).

- ضبط صدای گوینده برای اسلایدها.

- اضافه کردن Header و Footer به اسلایدها.

- استفاده از ابزار Master Slide برای تنظیم قالب کلی.

- طراحی اسلایدهای چندرسانهای (ترکیب متن، تصویر، و صدا).

- استفاده از ابزار Format Painter برای کپی تنظیمات.

- افزودن یادداشت (Notes) برای هر اسلاید.

- تنظیم اندازه اسلاید (16:9 یا 4:3).

تمرین عملی اکسل و مرورتمرین عملی اکسل و مرور

تمرین عملی اکسل

تمرین: مدیریت فروش محصولات

فرض کنید یک فروشگاه سه محصول مختلف میفروشد و دادههای فروش ماهانهی آنها به شرح زیر است:

| ماه | kala1 (تعداد) | kala2 (تعداد) | kala3 (تعداد) |

| ژانویه | 120 | 80 | 150 |

| فوریه | 100 | 90 | 200 |

| مارس | 140 | 110 | 170 |

| آوریل | 130 | 100 | 180 |

اهداف تمرین:

- محاسبه مجموع فروش هر محصول.

- محاسبه میانگین فروش ماهانه برای هر محصول.

- شناسایی پرفروشترین محصول هر ماه.

- ایجاد نمودار فروش ماهانه برای هر محصول.

گام ۱: ورود دادهها

ابتدا دادههای جدول بالا را در اکسل وارد کنید.

گام ۲: محاسبه مجموع فروش هر محصول

- در سلول E2، فرمول زیر را وارد کنید:

=SUM(B2:B5)

این فرمول مجموع فروش kala1 را محاسبه میکند. آن را به سلولهای دیگر E3 و E4 کپی کنید تا مجموع فروش برای kala2 و ۳ نیز محاسبه شود.

گام ۳: محاسبه میانگین فروش ماهانه

- در سلول F2، فرمول زیر را وارد کنید:

=AVERAGE(B2:B5)

این فرمول میانگین فروش kala1 را محاسبه میکند. آن را به سلولهای دیگر F3 و F4 کپی کنید تا میانگین فروش برای kala2 و ۳ نیز محاسبه شود.

گام ۴: شناسایی پرفروشترین محصول هر ماه

- در سلول G2، فرمول زیر را وارد کنید:

=IF(MAX(B2:D2)=B2,”kala1″,IF(MAX(B2:D2)=C2,”kala2″,”kala3″))

این فرمول بررسی میکند که کدام محصول بیشترین فروش را در ژانویه داشته است. آن را به سایر سلولهای ستون G کپی کنید تا برای سایر ماهها نیز محاسبه شود.

گام ۵: ایجاد نمودار فروش ماهانه

- دادههای ستونهای A تا D (ماه و تعداد فروش محصولات) را انتخاب کنید.

- به بخش Insert بروید و نمودار Column Chart یا Line Chart را انتخاب کنید.

- نمودار را به دلخواه ویرایش کنید (اضافه کردن عنوان، رنگها و …).

\

چند تمرین دیگر برای تقویت مهارت کار با اکسل

تمرین ۱: مدیریت هزینهها و درآمدها

دادهها:

| ماه | هزینه (تومان) | درآمد (تومان) |

| ژانویه | 5000000 | 7000000 |

| فوریه | 4000000 | 6000000 |

| مارس | 4500000 | 7500000 |

| آوریل | 6000000 | 8000000 |

اهداف تمرین:

- محاسبه سود خالص هر ماه (درآمد – هزینه) در ستون جدید.

- شناسایی ماهی که بیشترین سود خالص را داشته است.

- محاسبه مجموع هزینهها و درآمدها در پایان جدول.

- ایجاد نمودار خطی برای نمایش سود خالص ماهانه.

گامها:

- در ستون D، فرمول زیر را وارد کنید:

=C2-B2

و آن را برای سایر ماهها کپی کنید.

- برای شناسایی ماه با بیشترین سود خالص، از تابع MAX و MATCH استفاده کنید:

=INDEX(A2:A5,MATCH(MAX(D2:D5),D2:D5,0))

- مجموع هزینهها و درآمدها را با تابع SUM در زیر ستونها محاسبه کنید.

- نمودار خطی برای ستونهای A و D ایجاد کنید.

تمرین ۲: نمرات دانشآموزان

دادهها:

| نام دانشآموز | ریاضی | علوم | زبان انگلیسی | معدل |

| علی | 18 | 15 | 17 | |

| رضا | 12 | 14 | 16 | |

| سارا | 20 | 19 | 18 | |

| مریم | 14 | 13 | 15 |

اهداف تمرین:

- محاسبه معدل هر دانشآموز (میانگین نمرات در ستون معدل).

- شناسایی دانشآموزی که بالاترین معدل را دارد.

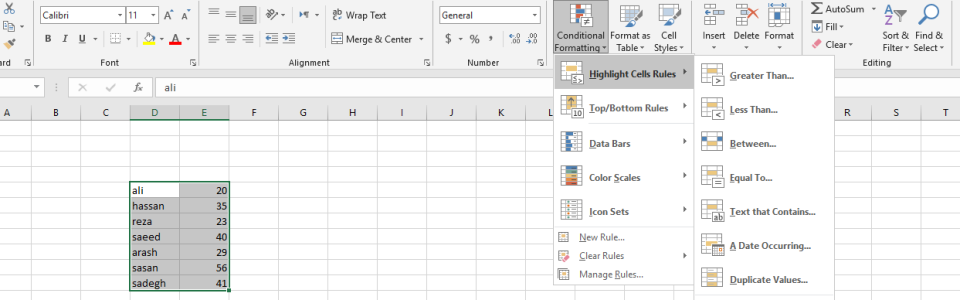

- هایلایت کردن نمرات زیر 12 با استفاده از Conditional Formatting

گامها:

- در ستون E، فرمول زیر را وارد کنید:

=AVERAGE(B2:D2)

و آن را برای سایر دانشآموزان کپی کنید.

- برای شناسایی بالاترین معدل:

=INDEX(A2:A5,MATCH(MAX(E2:E5),E2:E5,0))

- از منوی Conditional Formatting گزینه Highlight Cell Rules را انتخاب کرده و نمرات زیر 12 را قرمز کنید.

تمرین ۳: مدیریت انبار

دادهها:

| محصول | تعداد موجودی | قیمت واحد (تومان) | ارزش کل (تومان) |

| kala1 | 50 | 20000 | |

| kala2 | 30 | 50000 | |

| kala3 | 100 | 15000 | |

| محصول ۴ | 20 | 70000 |

اهداف تمرین:

- محاسبه ارزش کل موجودی برای هر محصول (تعداد × قیمت واحد).

- محاسبه مجموع ارزش کل موجودی انبار.

- شناسایی محصولی که بیشترین ارزش را دارد.

گامها:

- در ستون D، فرمول زیر را وارد کنید:

=B2*C2

و آن را برای سایر ردیفها کپی کنید.

- برای مجموع ارزش کل:

=SUM(D2:D5)

- برای شناسایی محصول با بیشترین ارزش:

=INDEX(A2:A5,MATCH(MAX(D2:D5),D2:D5,0))

تمرین ۴: تحلیل دادههای فروش

دادهها:

| ماه | محصول A | محصول B | محصول C |

| ژانویه | 100 | 150 | 200 |

| فوریه | 120 | 130 | 180 |

| مارس | 110 | 140 | 220 |

| آوریل | 130 | 160 | 210 |

اهداف تمرین:

- محاسبه مجموع فروش هر ماه.

- محاسبه درصد سهم هر محصول از کل فروش هر ماه.

- ایجاد نمودار دایرهای برای نمایش درصد سهم محصولات در ماه ژانویه.

گامها:

- در ستون D، مجموع فروش هر ماه را محاسبه کنید:

=SUM(B2:D2)

- در ستونهای جدیدE، F، G، درصد سهم هر محصول را محاسبه کنید:

=B2/$D2*100

برای سایر ستونها نیز این فرمول را اعمال کنید.

- برای نمودار دایرهای، دادههای ماه ژانویه را انتخاب کنید و از Insert > Pie Chart استفاده کنید.

تمرین ۵: تحلیل زمانی دادهها

دادهها:

| تاریخ | فروش (تومان) |

| 2025-01-01 | 5000000 |

| 2025-01-02 | 6000000 |

| 2025-01-03 | 5500000 |

| 2025-01-04 | 7000000 |

اهداف تمرین:

- محاسبه مجموع فروش.

- محاسبه میانگین فروش روزانه.

- ایجاد نمودار خطی برای نمایش روند فروش.

گامها:

- مجموع فروش را با تابع SUM محاسبه کنید.

- میانگین فروش را با تابع AVERAGE به دست آورید.

- نمودار خطی برای ستونهای A و B ایجاد کنید.

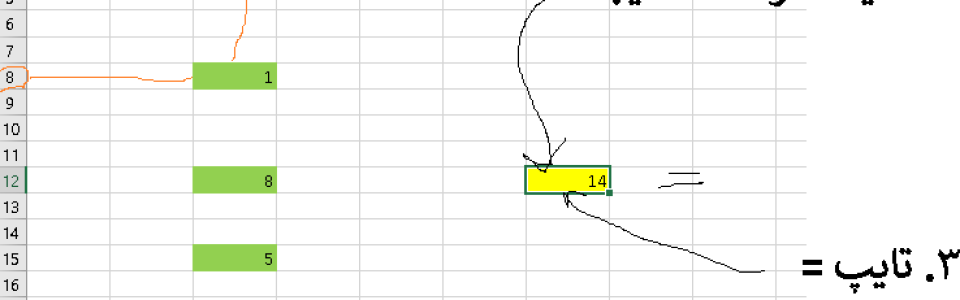

- ایجاد یک شیت جدید

کلیک روی تب + در پایین صفحه یا فشار دادن Shift+F11. - وارد کردن داده به یک سلول

انتخاب سلول –> تایپ داده مورد نظر. - انتخاب چندین سلول

کلیک و کشیدن موس یا استفاده از Shift + جهتنما. - کپی کردن دادهها

انتخاب سلولها –> Ctrl+C –> انتخاب محل جدید –> Ctrl+V. - انتقال دادهها به محل جدید

انتخاب سلولها –> Ctrl+X –> انتخاب محل جدید –> Ctrl+V. - حذف دادهها از یک سلول

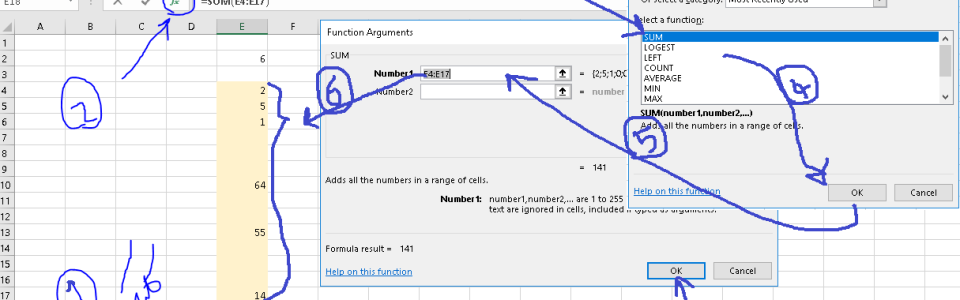

انتخاب سلول –> Delete. - استفاده از فرمول جمع (SUM)

انتخاب سلول –> تایپ =SUM(A1:A5) –> Enter. - استفاده از فرمول میانگین (AVERAGE)

انتخاب سلول –> تایپ =AVERAGE(A1:A5) –> Enter. - استفاده از فرمول شمارش (COUNT)

انتخاب سلول –> تایپ =COUNT(A1:A5) –> Enter. - ایجاد یک جدول (Table)

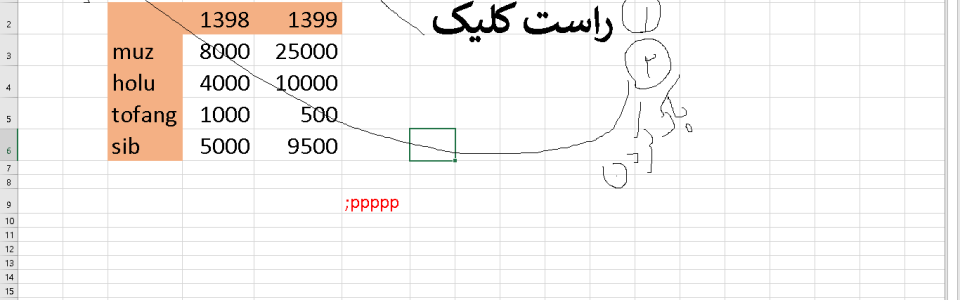

انتخاب دادهها –> انتخاب تب Insert –> Table. - ایجاد نمودار (Chart)

انتخاب دادهها –> انتخاب تب Insert –> انتخاب نوع نمودار. - افزودن فیلتر به دادهها

انتخاب دادهها –> انتخاب تب Data –> Filter. - مرتبسازی دادهها به ترتیب صعودی یا نزولی

انتخاب دادهها –> انتخاب تب Data –> Sort –> انتخاب گزینه مرتبسازی. - یافتن دادهها با استفاده از جستجو

فشار دادن Ctrl+F –> تایپ کلمه یا عدد مورد نظر. - فرمت کردن سلولها به عنوان درصد

انتخاب سلول –> راستکلیک –> Format Cells –> Percentage. - ایجاد یک سلول با تاریخ جاری

انتخاب سلول –> تایپ =TODAY() –> Enter. - ایجاد یک سلول با ساعت جاری

انتخاب سلول –> تایپ =NOW() –> Enter. - اعمال فرمت پولی (Currency) به سلولها

انتخاب سلول –> راستکلیک –> Format Cells –> Currency. - استفاده از شرطی (Conditional Formatting)

انتخاب دادهها –> انتخاب تب Home –> Conditional Formatting –> انتخاب نوع فرمت. - قرار دادن رنگ پسزمینه به سلولها

انتخاب سلولها –> راستکلیک –> Format Cells –> Fill –> انتخاب رنگ. - چرخاندن متن در یک سلول

انتخاب سلول –> راستکلیک –> Format Cells –> Alignment –> انتخاب Rotate Text. - محاسبه بیشترین مقدار (MAX)

انتخاب سلول –> تایپ =MAX(A1:A5) –> Enter. - فرمول جمع

=A2+A3

فرمول معدل:

=(A2+A3+A4)/3

- محاسبه کمترین مقدار (MIN)

انتخاب سلول –> تایپ =MIN(A1:A5) –> Enter. - استفاده از توابع IF

انتخاب سلول –> تایپ =IF(A1>10, “Yes”, “No”) –> Enter. - مخفی کردن ستون یا ردیف

راستکلیک روی شماره ستون یا ردیف –> Hide. - افزودن حاشیه به سلولها

انتخاب سلولها –> راستکلیک –> Format Cells –> Border –> انتخاب نوع حاشیه. - ایجاد یک پیوند (Hyperlink)

انتخاب سلول –> راستکلیک –> Hyperlink –> وارد کردن URL. - ایجاد یک فیلد جستجو با استفاده از VLOOKUP

انتخاب سلول –> تایپ =VLOOKUP(A1, B1:C5, 2, FALSE) –> Enter. - استفاده از فرمول CONCATENATE برای ترکیب متن

انتخاب سلول –> تایپ =CONCATENATE(A1, ” “, B1) –> Enter. - درج یک کاما در فرمول برای تعداد هزارگان

انتخاب سلول –> راستکلیک –> Format Cells –> Number –> انتخاب Thousands Separator. - تغییر عرض ستونها

انتخاب ستونها –> راستکلیک –> Column Width –> وارد کردن اندازه جدید. - تغییر ارتفاع ردیفها

انتخاب ردیفها –> راستکلیک –> Row Height –> وارد کردن ارتفاع جدید. - قرار دادن شماره ردیفها در یک ستون

تایپ=ROW()در سلول اول و کشیدن آن به پایین. - ایجاد یک سلول با محاسبه مجموع (SUM) از سلولهای مختلف

انتخاب سلول –> تایپ=SUM(A1, B2, C3)–> Enter. - استفاده از تابع COUNTA برای شمارش تعداد سلولهای غیرخالی

انتخاب سلول –> تایپ=COUNTA(A1:A5)–> Enter. - انتخاب سلول با استفاده از Go To

فشار دادن Ctrl+G –> وارد کردن مرجع سلول (مثلا A1) –> Enter. - مخفی کردن شیتها

راستکلیک روی نام شیت –> Hide. - نمایش شیتهای مخفی شده

راستکلیک روی هر شیت –> Unhide –> انتخاب شیت مخفی. - محاسبه تعداد کاراکتر در یک سلول با استفاده از LEN

انتخاب سلول –> تایپ=LEN(A1)–> Enter. - ایجاد تاریخ و زمان در یک سلول

تایپ=NOW()در سلول –> Enter. - استفاده از TEXT برای تغییر فرمت تاریخ

تایپ=TEXT(A1, "mm/dd/yyyy")–> Enter. - جدا کردن متن در یک سلول با استفاده از TEXT TO COLUMNS

انتخاب سلول –> انتخاب تب Data –> Text to Columns –> انتخاب Delimited. - ساخت یک نمودار با دادههای انتخابی

انتخاب دادهها –> انتخاب تب Insert –> انتخاب نوع نمودار. - درج یک خط افقی در بین دادهها

انتخاب یک سلول –> تایپ=REPT("-", 50)–> Enter. - ترتیب دادهها به صورت صعودی یا نزولی

انتخاب دادهها –> انتخاب تب Data –> Sort –> انتخاب گزینه مورد نظر. - استفاده از تابع IF برای مقایسه مقادیر

تایپ=IF(A1>10, "Yes", "No")–> Enter. - برجسته کردن سلولهایی که شرایط خاصی دارند (Conditional Formatting)

انتخاب دادهها –> انتخاب تب Home –> Conditional Formatting –> انتخاب نوع شرط. - استفاده از فرمول SUBTOTAL برای محاسبات با فیلترها

انتخاب سلول –> تایپ=SUBTOTAL(9, A1:A5)–> Enter. - پیدا کردن اولین یا آخرین سلول در یک ستون با استفاده از INDEX

تایپ=INDEX(A1:A5, 1)برای اولین یا=INDEX(A1:A5, COUNTA(A1:A5))برای آخرین. - ایجاد یک فیلتر برای دادهها

انتخاب دادهها –> انتخاب تب Data –> Filter –> فعال کردن فیلتر.

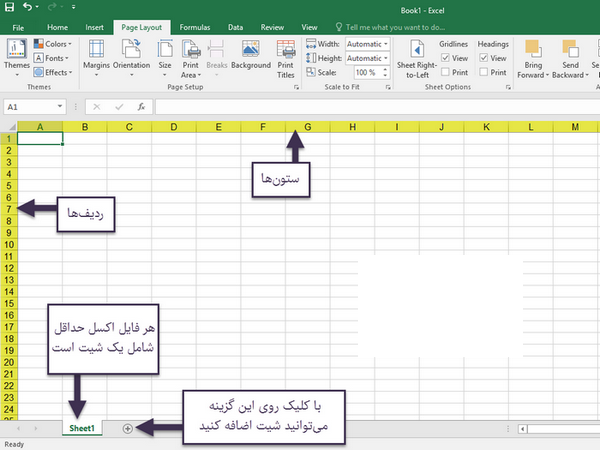

۴- واژهپرداز Word۴- واژهپرداز Word

Microsoft Word

مؤلفين:

داريوش عليپور، مينوش حيدري

آموزشگاه هوش مصنوعی

tirotir.ir

فهرست

1-1 آشنايی با تعريف واژه پرداز 9

1-2 آشنايي با اجراي برنامه Word.. 9

1-3 آشنايی با محيط اصلي Microsoft Word.. 9

1-3-3 نوارهاي ابزارToolbars. 10

1-3-3-1 نوار ابزار استاندارد. 10

1-3-5 محيط تايپ (Text Area). 12

1-3-6 نوار وضعيت (Status Bar). 12

1-3-7 نوارهاي پيمايش افقي(Horizontal) و عمودي(Vertical). 12

1-4 آشنايي با ايجاد سند جديد. 13

1-5 آشنايي با چگونگی باز نمودن سند موجود. 13

1-6 آشنايي با ذخيره کردن سند فعلي با فرمتهای گوناگون. 14

1-7 آشنايی با اصول ويرايش متن.. 14

1-7-1 آشنايي با چگونگی انتخاب متن (Highlighting Text ). 15

1-7-2 شناسايي اصول کپی، بريدن، چسباندن و حذف متن.. 16

1-7-7 رنگ زمينه قلم (Highlight). 17

1-7-8 رنگ قلم (Font Color). 17

1-7-9 آشنايي با استفاده از دستور برگشت… 17

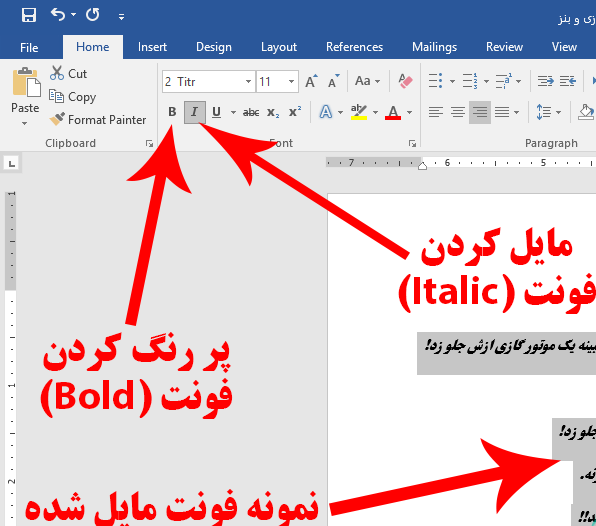

1-7-10 آشنايي با نحوه ضخيم و پررنگ کردن متن انتخاب شده Bold)). 17

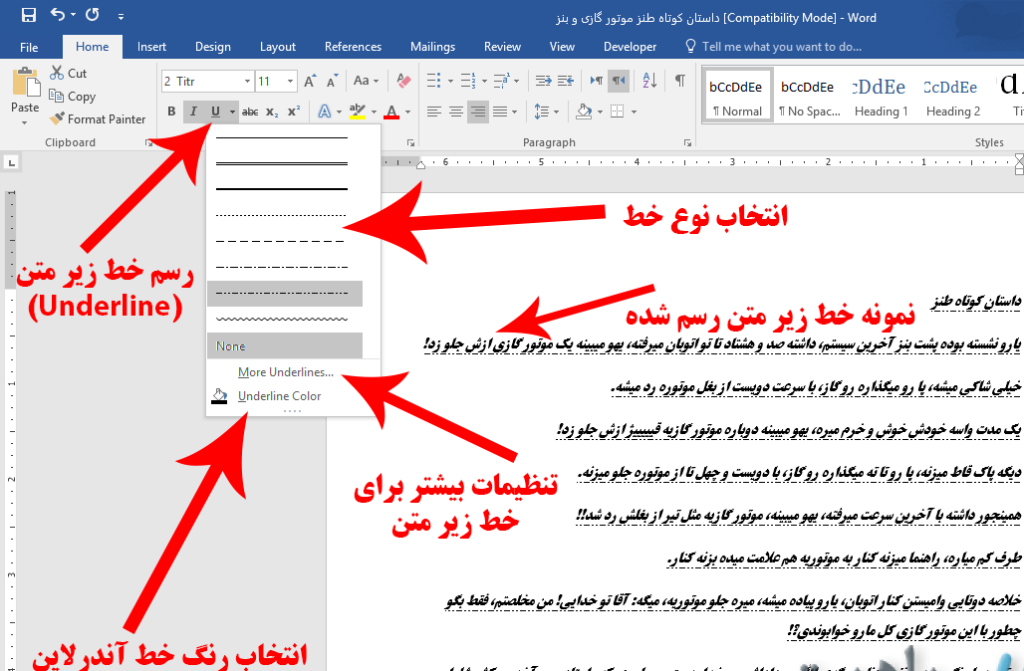

1-7-11 آشنايي با نحوه زير خط دار کردن متن انتخاب شده (Underline). 17

1-7-12 آشنايي با نحوه مايل و ايتاليك کردن متن انتخاب شده (Italic). 17

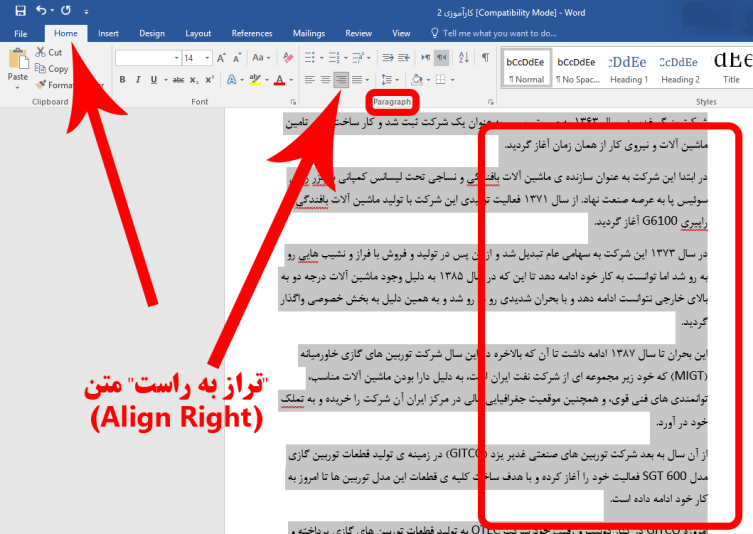

1-7-13 آشنايي با ابزار چيدمان.. 18

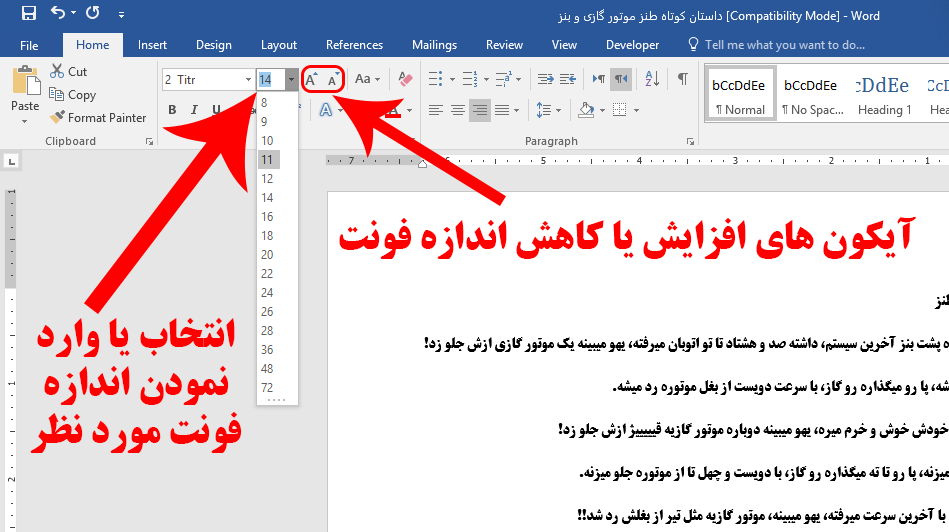

1-8 شناسايي اصول تنظيم قلم و اندازه قلم. 18

1-8-2 برگه Character Spacing (برگه دوم از كادر Font). 19

1-8-3 برگه Text Effects (برگه سوم از كادر Font). 20

1-9 آشنايي با چگونگی استفاده از پيوند در مکان مناسب(Hyperlink) 20

1-10 آشنايي با حالتهای مختلف نمايش سندDocument View.. 20

1-11 آشنايي با ابزارهای بزرگ نمايي و کوچک نمايي سند(Zoom) 21

1-12 آشنايي با چگونگی تايپ يک پاراگراف جديد. 21

1-13 فرو رفتگی و بيرون رفتگی ابتدای يك پاراگراف.. 22

1-14 آشنايي با تغيير فاصله بين سطرها Line Spacing) ) 22

1-15 آشنايي با درج صفحه گسترده در سند. 23

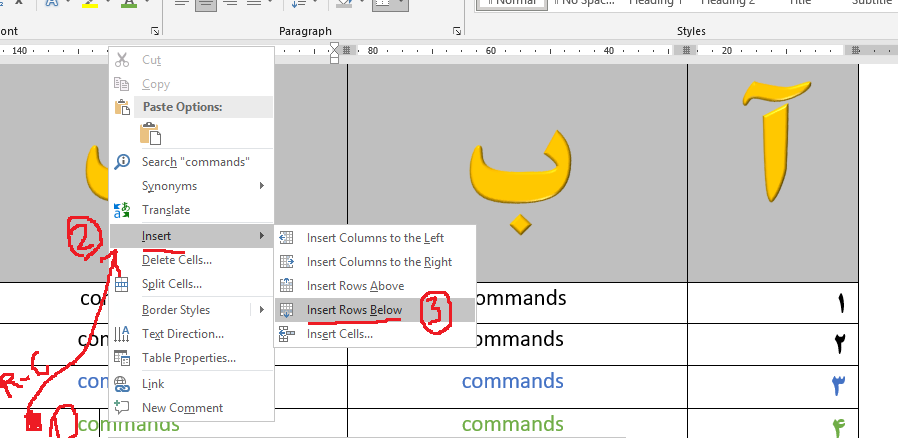

1-16 شناسايي اصول ايجاد جدول و عمليات بر روی آن. 23

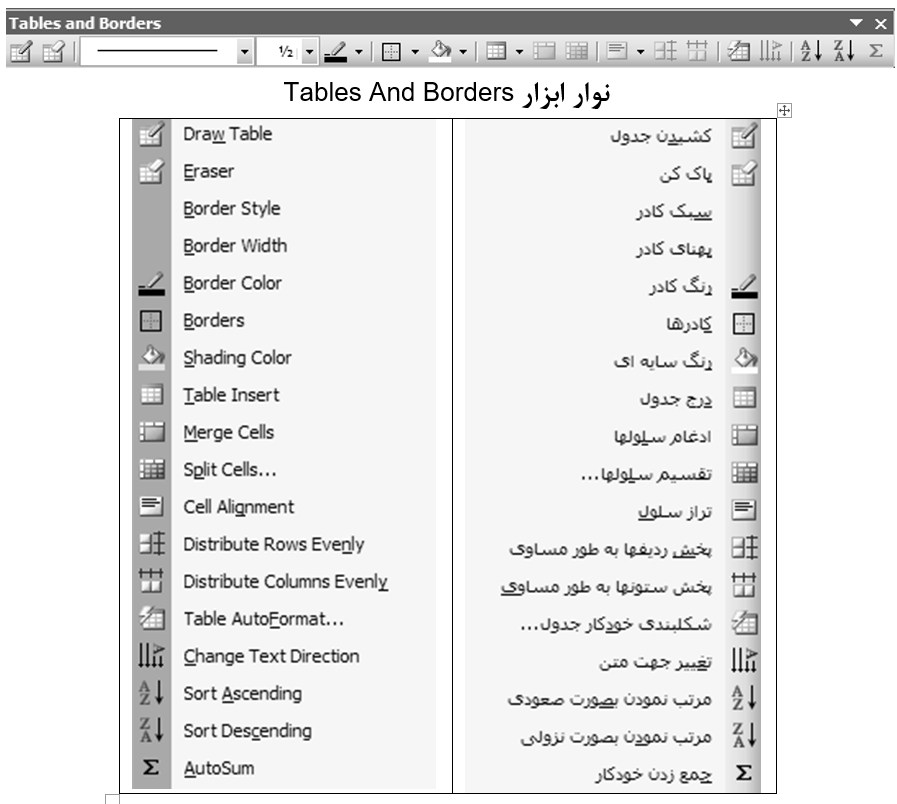

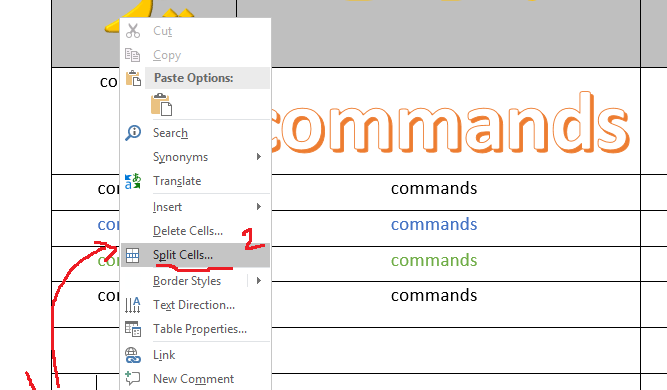

1-16-1 نمايش نوار ابزار جدولها و كادرها 24

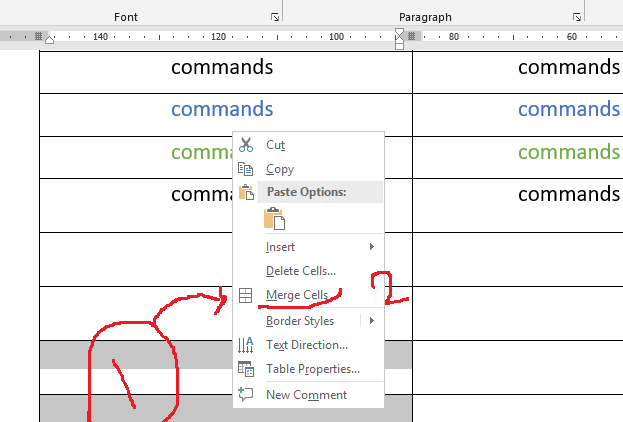

1-16-3 ادغام چند خانه از جدول در يک خانه. 26

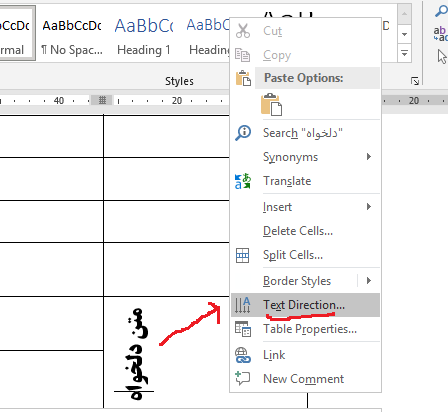

1-16-5 تايپ عمودی در خانه های جدول.. 26

1-16-7 اضافه کردن ستون و سطر جديد.. 26

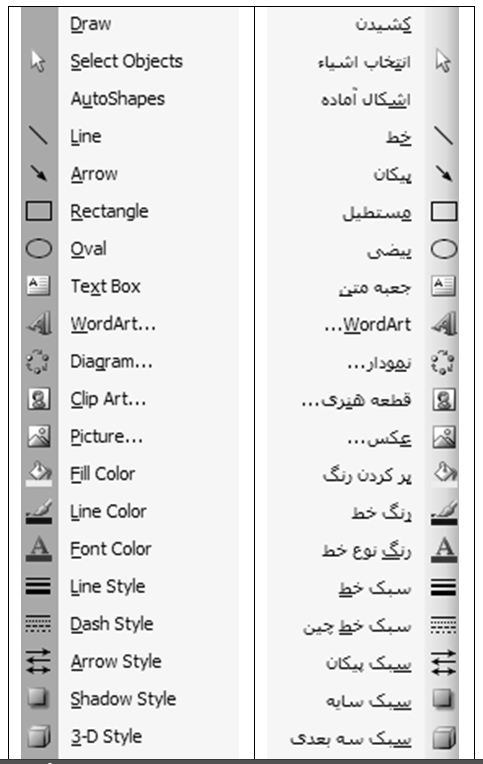

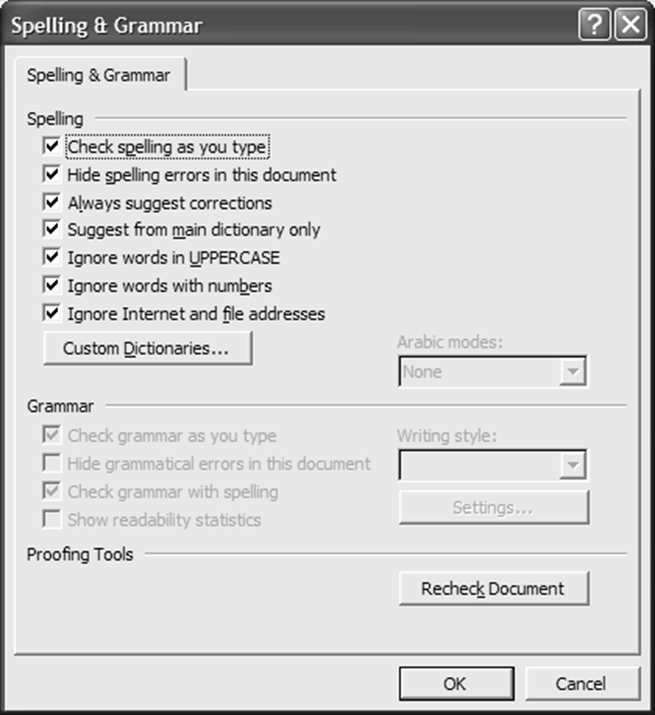

1-17 آشنايي با چگونگی کار دکمه های نوار ابزار ترسيمات (Drawing) 27

1-18 آشنايي با چگونگی استفاده از برنامه راهنما (Help) 28

1-19 آشنايي با چگونگی استفاده از ابزار بررسی کننده املايي و دستوری.. 30

1-20 آشنايي با استفاده از فهرستهای اعداد و علامتها 31

1-21 آشنايي با چگونگی ايجاد صفحات جديد در سند فعلی.. 34

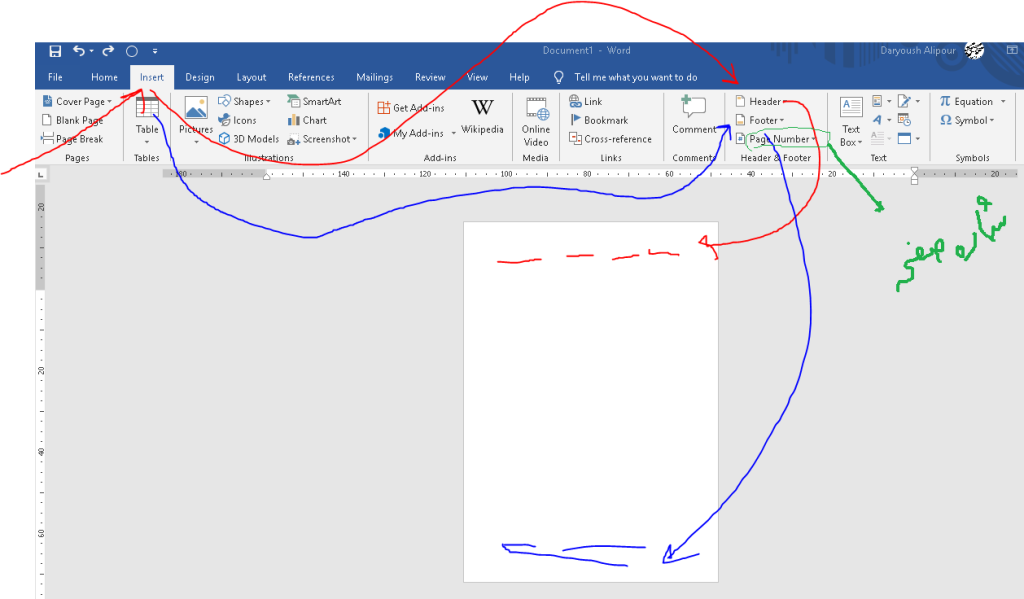

1-22 آشنايي با اضافه کردن سرصفحه و پاورقی (Header And Footer) 34

1-23 آشنايي با شماره بندي صفحات سند. 35

1-24 آشنايي با قرار دادن كاراكترهاي ويژه در سند. 36

1-25 آشنايي با درج تصوير، نمودار و اشياء ديگر. 36

1-26 آشنايي با چگونگی تنظيم قرار گرفتن متن و تصوير. 37

1-27 آشنايي با اضافه کردن کادر به سند و تنظيمات كادرها و سايه ها 37

1-28 آشنايي با استفاده از مجموعه های مختلف چند فاصله (Tab) 38

1-29 آشنايي با پيش نمايش چاپ (Print Priew) 40

1-30 آشنايي با تنظيمات صفحه و حاشيه سند. 41

1-30-4 شناسايي اصول چاپ سند (Print). 43

1-30-4-1 آشنايي با گزينه های اصلی چاپ… 43

1-31 شناسايي اصول انتخاب الگوی مناسب (Template) 45

1-32 آشنايي با بکار بردن يک شيوه يا سبك (Style) 45

1-33 آشنايي با اصول عمليات ادغام پستي (Mail Merge) 46

1-35 پاكت نامه ها و برچسب ها(Envelopes And Labels ) 52

1-36 شمارش كلمات(Word Count…) 52

1-38 ايجاد يادداشت(Comment) 52

1-39 ستون بندي متن (Columns) 52

1-40 انتقال قالب بندي(Format Painter) 53

1-42 برنامه جايگزينی کلمات (Replace ) 54

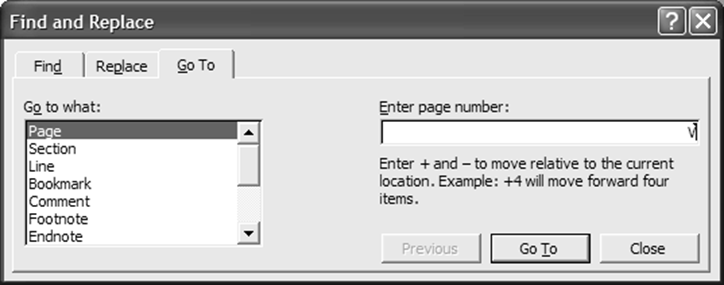

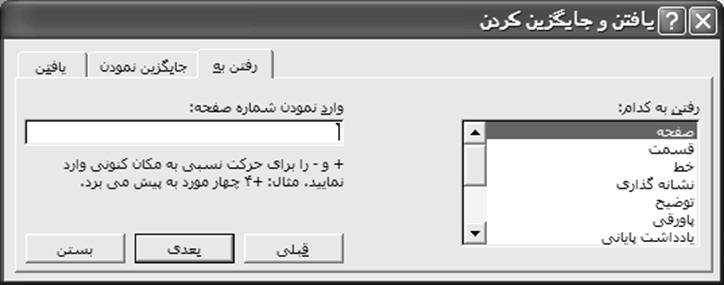

1-43 آشنايي با نحوه جابجايي سريع مکان نما در سند (Go To) 55

1-45 برخي كليدهاي ميان بر مربوط به برنامه Microsoft Office Word.. 57

“براي اين كه حدود امكانات را كشف كنيم، تنها راهش اين است كه آنها را پشت سر گذاشته و به قلمرو غيرممكن ها وارد شويم” آرتور سي كلارك

مقدمه

گواهينامه بين المللی کاربری کامپيوتر International Computer Driving Licence)) دوره استانداردي مي باشد كه در بيش از 35 كشور جهان به رسميت شناخته شده و دارندگان اين مدرك، جهت اشتغال و كاريابي در اولويت قرار مي گيرند. هدف ICDL ارتقاي سطح تواناييهاي كاربران در فن آوري اطلاعات و بالا بردن سطح كيفي و كمي کارايي در ادارات، موسسات و شركتها مي باشد. مدرک ICDL نشانه داشتن دانش فني لازم و توانمندی در مهارتهای کامپيوتری مي باشد. بديهي است كه اخذ مدرک ICDL به کار فرمايان و مديران مراكز كاري اطمينان می دهد که اطلاعات و دانش دارندگان اين مدرک کاملا بروز و معتبر است .

مهارتهای ICDL عبارتند از :مفاهيم اوليه فن آوری اطلاعات(IT) – استفاده ازکامپيوترومديريت فايلها – اطلاعات و ارتباطات( اينترنت و پست الکترونيک ) – واژه پرداز Word – صفحه گسترده Excel – اسلايدسازي و نمايش تبليغاتیPowerpoint – پايگاه داده Access

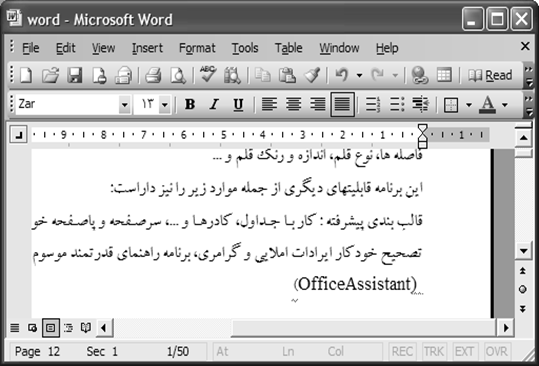

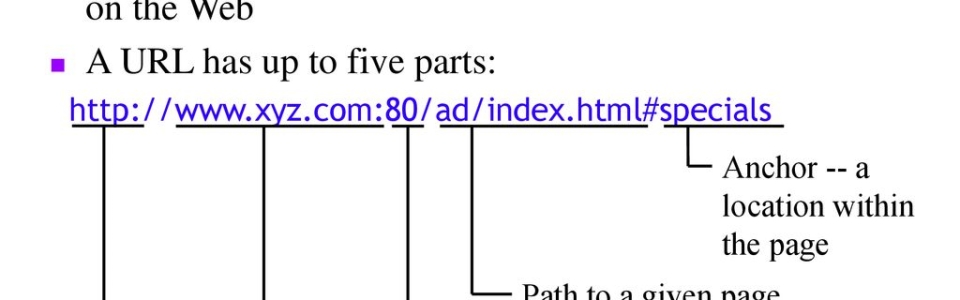

در اين كتاب، مطابق جديدترين استانداردهاي فني و حرفه اي، مهارت Word كه بخشي از مهارتهاي درجه يكِ اين دوره ميباشد، تقديم شده است. در اكثر ادارهها، سازمانها و شركتها، از اين مجموعه به عنوان يك ابزار اصلي استفاده ميشود، در بين كاربران خانگي هم طرفداران زيادي دارد. تمامي محصولات Office اين قابليت را دارند كه فايلهاي خود را به قالب HTML (فرمت فايلهاي اينترنت) درآورند تا در محيط وب نيز قابل نمايش باشند. اين مجموعه نرم افزاري، مورد استفاده ميليونها كاربر در كشورهاي مختلف بوده و آشنايي با برنامه هاي آن از ضروريات محسوب مي گردد. در ادامه بطور مختصر به شرح اين برنامه ها می پردازيم:

– Word : برنامه مخصوص واژه پردازي مي باشد كه براي ايجاد نامه ها، گزارش ها، روزنامه ها، خبرنامه ها و آگهي ها ايده آل است. برخی از قابليتهای آن عبارتند از: پاراگرافبندي، صفحهآرايي، ساختن جدول، ايجاد سرصفحه و پاصفحه (Header and Footer) ، تصحيح املايي و دستوري جمله ها .

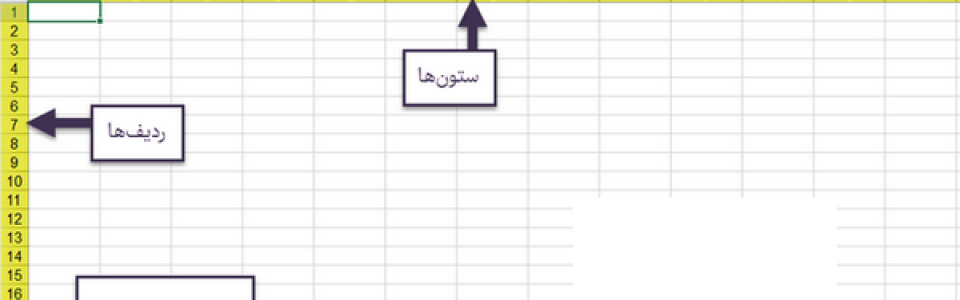

– Excel : از برنامه هاي معروف صفحه گسترده مي باشد كه براي كار با اعداد و ارقام، فرمولها، نمودارها و توابع كاربرد دارد. در اين برنامه تمامی محاسبههاي رياضي از جمع و تفريق گرفته تا واريانس و انحراف معيار قابل انجام است. ايجاد فرمول، مرتب سازی ستونها و سطرها، رسم انواع نمودارهاي ميلهاي، منحني، سلسله مراتبي، هرمي و… برخي از امكانات اين نرمافزار است.

– Powerpoint : برنامه اي ساده و جالب براي اسلايد سازي و نمايشهاي تبليغاتي می باشد. اين برنامه برای ايجاد فايلهاي Presentation طراحي شده است. ميتوان اسلايدهايي در رابطه با سخنراني يا مطلبی که قرار است جايي ارائه دهيم را تهيه كرده و از طريق ويدئو پروژكتور، آنها را روي پرده نمايش دهيم. ميتوان به اسلايدهاي خود حرکت و حالت هايي جالب اختصاص داد، انواع گرافيك و انيميشن را در آنها به كار برد و با اضافه كردن صداهاي گوناگون، آنها را جذابتر ساخت، صفحههاي مختلف را به هم پيوند داده و به راحتي بين اسلايدها حركت نمود.

– Access : از برنامه هاي بانك اطلاعاتي (پايگاه داده ها) محسوب شده و جهت سازماندهي اطلاعات مثل ليست تماس، كلكسيون موسيقي،ايجاد فرم هاي مخصوص درج اطلاعات و گزارش گيري كاربرد دارد. از طريق اين برنامه می توان به راحتي و در عرض چند دقيقه بانك اطلاعاتي دلخواه خود را ايجاد نمود. بانك اطلاعاتي، يك مجموعه از اطلاعات است. به عنوان مثال كليهي اطلاعات پرسنلي (از قبيل نام، نام خانوادگي، شماره شناسنامه…) معلمان و دانشآموزان يک مدرسه را می توان در يک بانک اطلاعاتی درون کامپيوتر نگهداري نمود.

برخي برنامه هاي ديگر اين مجموعه عبارتند از : Publisher، Outlook و …

قبول داريم که مجموعه حاضر عاری از کاستی ها و ايرادات نيست. اميدواريم با انتقادات و پيشنهادات خود در برطرف نمودن ايرادات و اشكالات اين اثر و ارسال پيام به نشانی tirotir2@gmail.com به ما ياري رسانيد.

مؤلفين

بخش نخست

توانايي کار با واژه پرداز Microsoft Word

1-1 آشنايی با تعريف واژه پرداز

– تايپ Typing : براي ايجاد يك سند، متن دلخواه را تايپ نموده و نتيجه كار را ذخيره(Save) مي سازيم.

– ويرايشEditing : عمليات تغيير، حذف، كپي، جابجايي را ويرايش گويند. (اين عمليات در ماشين تايپ ممكن نيست)

– قالب بنديFormatting : مي توان ظاهر مطالب تايپ شده را تغيير داد. مواردي از قبيل: فاصله ها، نوع قلم، اندازه و رنگ قلم، كار با جداول، كادرها.، سرصفحه و پاصفحه، چاپ، تصحيح خودكار غلط های املايي و گرامري و …

1-2 آشنايي با اجراي برنامه Word

از منوي Start گزينه All Programs و پس از آن Microsoft Office و نهايتاً Microsoft Office Word را كليك مي كنيم. با دابل كليك روي آيكنِ برنامه نيز مي توان آن را اجرا نمود.

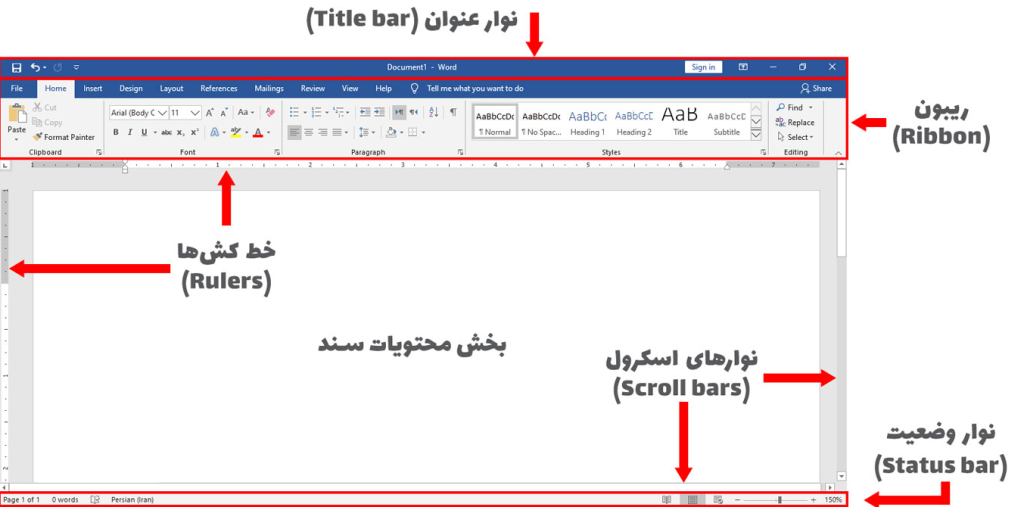

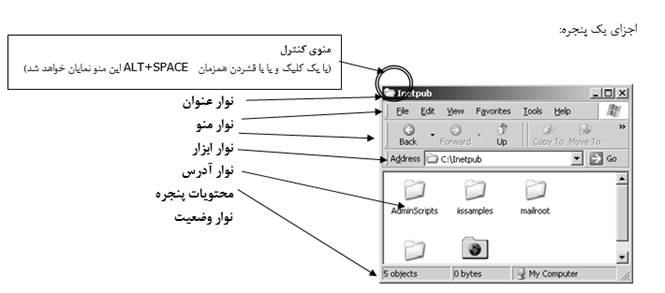

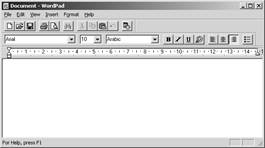

1-3 آشنايی با محيط اصلي Microsoft Word

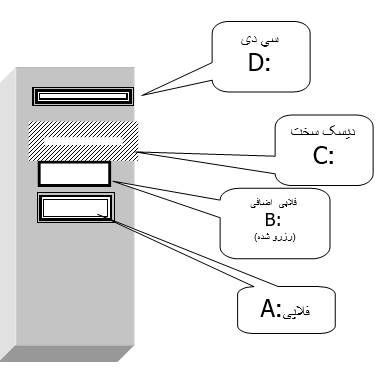

با توجه به شكل ، ابتدا با مهمترين قسمتهاي اين برنامه، بطور مختصر آشنا شده و سپس به جزييات بيشتري خواهيم پرداخت.

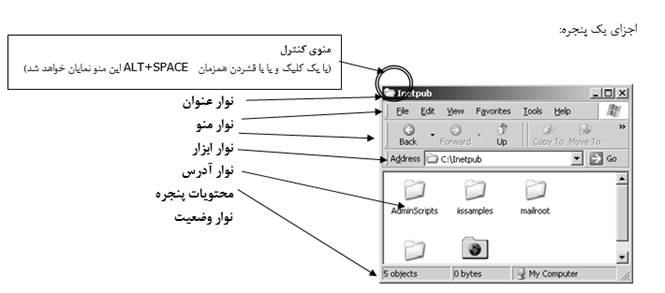

1-3-1 نوار عنوانTitle Bar

بالاترين نوار در محيط اين برنامه مي باشد. نام برنامه “Microsoft Word” و سپس نام پيش فرض براي سند باز شده فعلي”Document1” مشاهده مي گردد.

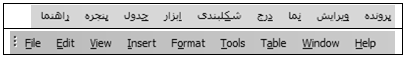

1-3-2 نوار منو Menu Bar

در زير نوار عنوان قرار داشته و ليستي از فرمانها را كه هر كدام از آنها نيز، يك ليست فرعي دارند، نمايش مي دهد. برخي از مهمترين اين فرمانها، براي راحتي كار، در نوار هاي ابزار برنامه قرار داده شده اند، كه بعداً به تفصيل شرح داده خواهند شد.

توسط اشاره گر ماوس و يا با فشردن كليد Alt و سپس كليدهاي جهت نما نيز قادريم گزينه دلخواه را در منو اجرا كنيم.

1-3-3 نوارهاي ابزارToolbars

برخي از مهمترين فرمانها بصورت آيكن در نوارهاي ابزار قرار داده شده است. معمولاً دو نوار ابزار زير در محيط برنامه بوده و وجود آنها بطور كلي لازم مي باشد.

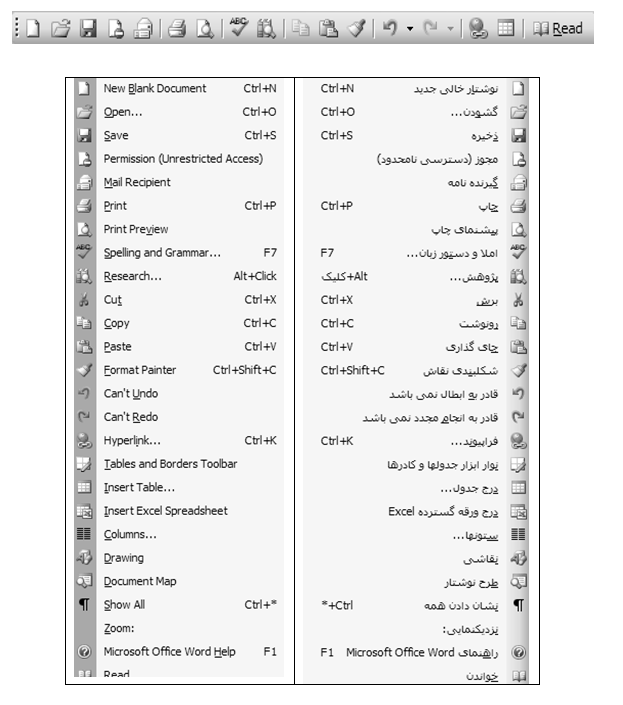

1-3-3-1 نوار ابزار استاندارد

نوار ابزار استاندارد، در بالاي محيط اصلي برنامه Word قرار داشته و شامل فرمانهايي از قبيل ايجاد سند، باز نمودن سند، ذخيره، چاپ و … مي باشد.

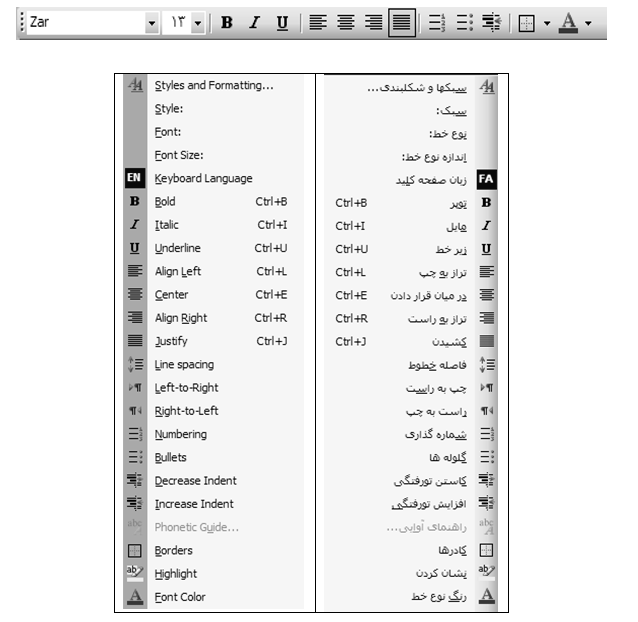

1-3-3-2 نوار ابزار Formatting

اين نوار ابزار شامل فرمانهاي بسيار مهمي مي باشد كه براي قالب بندي و فرم دهي به مطالبِ موجود در سندِ فعلي كاربرد دارند. قبلاً يادآور شده ايم كه بهتر است متن خود را ابتدا بطور كامل نوشته و ذخيره نموده و سپس آنرا قالب بندي و ويرايش نماييم. و البته مي توان قبل از شروع به نوشتن قالب خود را انتخاب و تنظيم نموده و سپس به تايپ بپردازيم.

اگر بعد از نوشتن، بخواهيم هر قسمتي از متن را قالب بندي و ويرايش نماييم، ابتدا بايد آن را انتخاب (بلوكه )نموده و سپس با استفاده از دكمه هاي نوار ابزار قالب بندي، تغييرات دلخواه را اعمال نماييم.

با راست كليك روي يكي از نوارهاي ابزار و يا از طريق گزينه Toolbars از منويView میتوان نوارهاي ابزار را پنهان و يا آشكار ساخت.

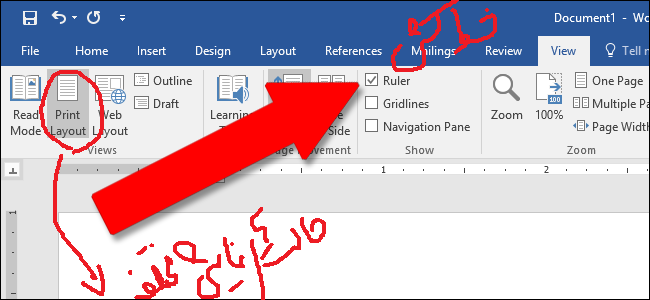

1-3-4 خط كشRuler

خط كش در زير نوارهاي ابزار و بالاي محيط تايپ مشاهده مي شود و براي تنظيم سريع متن سند كاربرد دارد. براي آشكار و يا مخفي ساختن خط كش مي توانيم از منوي View گزينه Ruler را انتخاب نماييم.

1-3-5 محيط تايپ (Text Area)

محيط بزرگ پايين خط كش را “Text Area” گويند.در اين منطقه به تايپ پرداخته و مطالب دلخواه را قرار مي دهيم. علامت چشمك زن گوشه بالا و راست يا چپ را مكان نما گويند. مطالب در محل فعلي مكان نما درج خواهند شد.در ضمن پس از ايجاد سند و تايپ متن دلخواه توسط گزينه Exit از منوي File برنامه Word خارج مي شويم. در صورتيكه نتيجه عمليات را ذخيره نكرده باشيم، پيام زير ظاهر خواهد شد:

“Do You Want To Save Changes To Document1?”

اگر Yesرا انتخاب نماييم، سند ذخيره شده ولي اگرNo را انتخاب نماييم، آنگاه سند بدون ذخيره شدن بسته خواهد شد!

1-3-6 نوار وضعيت (Status Bar)

اين نوار در پايين ترين قسمت محيط برنامه قرار دارد، و اطلاعات مهمي را درباره محل فعلي مكان نما، به نمايش مي گذارد.

در اين نوار ابزار با توجه به شكل بعد، اطلاعاتي درباره موارد زير داريم:

( Page ) شماره صفحه اي كه اكنون روي آن قرار داريم و فعال است.

( Sec) قسمت يا بخشي از سند كه مكان نما در روي آن قرار دارد.

( 1/1 ) دو عدد با علامت مميز / در بين آنها، نشان مي دهند ما اكنون در كدام صفحه (1 ) و مجموعاً كل سندمان چند صفحه است.

بقيه اعداد مثلا” At 1″ Ln.1 Col 1 نشان مي دهد اشاره گر ما اكنون در چه فاصله اي و چه سطر و ستوني قرار دارد.

| شماره صفحه فعلي |

| تعداد كل صفحات/شماره صفحه فعلي |

| شماره بخش |

| فاصله از بالاي صفحه |

| شماره ستون |

| شماره سطر فعلي |

1-3-7 نوارهاي پيمايش افقي(Horizontal) و عمودي(Vertical)

اين نوارها به ما امكان مي دهند تا سندي كه محتويات آن بيشتر از يك صفحه مي باشد را پيمايش نماييم. براي اين كار مي توان دكمه لغزنده موجود در نوار را به طرفين Drag نمود و يا اينكه روي دكمه هاي ابتدا و انتهاي نوار كليك مي كنيم.

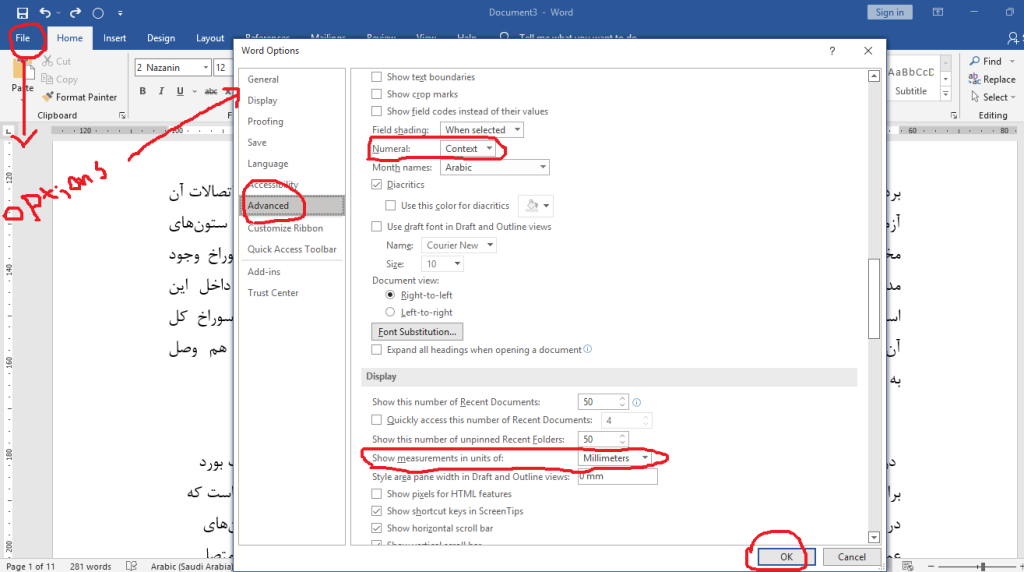

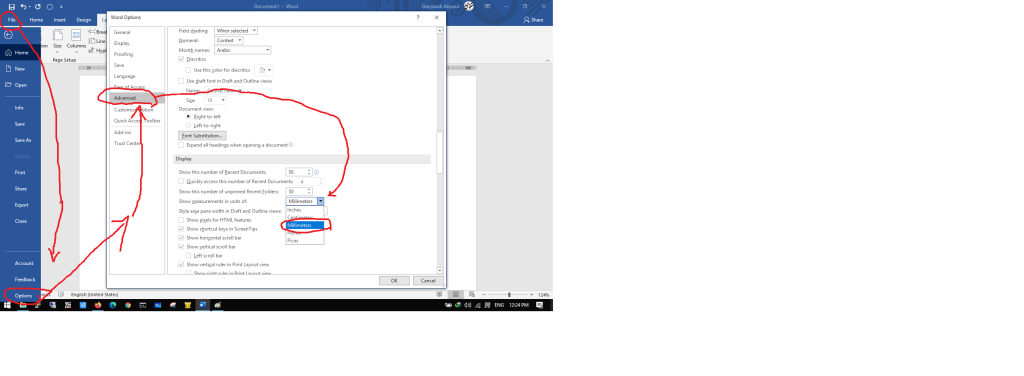

مي توان نوار وضعيت، نوارهاي پيمايش افقي و عمودي را از منوي Toolsو گزينه Options و نهايتاً گزينه View آشكار ويا مخفي ساخت.

1-4 آشنايي با ايجاد سند جديد

روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه New… را از منوي File انتخاب مي كنيم. ( با فشردن كليدهاي Ctrl + N نيز مي توان اين عمليات را انجام داد)

هر گاه گزينه New… را از منوي File برگزينيم،كادري باز مي شود كه مي توان توسط گزينه هاي آن كادر، يك سند نمونه با قالب بندي قبلاً تنظيم شده را انتخاب كرد، و سند فعلي خود را بر مبناي آن نمونه ايجاد نمود.

| ايجاديك سند جديد ايجاد يك سند جديد به كمك الگوها |

اگر از منوي View گزينه Task Pane علامت دار باشد، در آنصورت ليستي براي انجام وظايف،در سمت راست اين برنامه مشاهده خواهد شد. در حالت معمولی اگر گزينه Getting Started را در بالای ليست وظيفه (Task Pane)انتخاب کنيم، آنگاه يک ليست فرعی شامل برخی دستورات ظاهر خواهد شد.

1-5 آشنايي با چگونگی باز نمودن سند موجود

روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه Open… را از منوي File انتخاب مي كنيم. (با فشردن كليدهاي Ctrl + O نيز مي توان اين عمليات را انجام داد)

1-6 آشنايي با ذخيره کردن سند فعلي با فرمتهای گوناگون

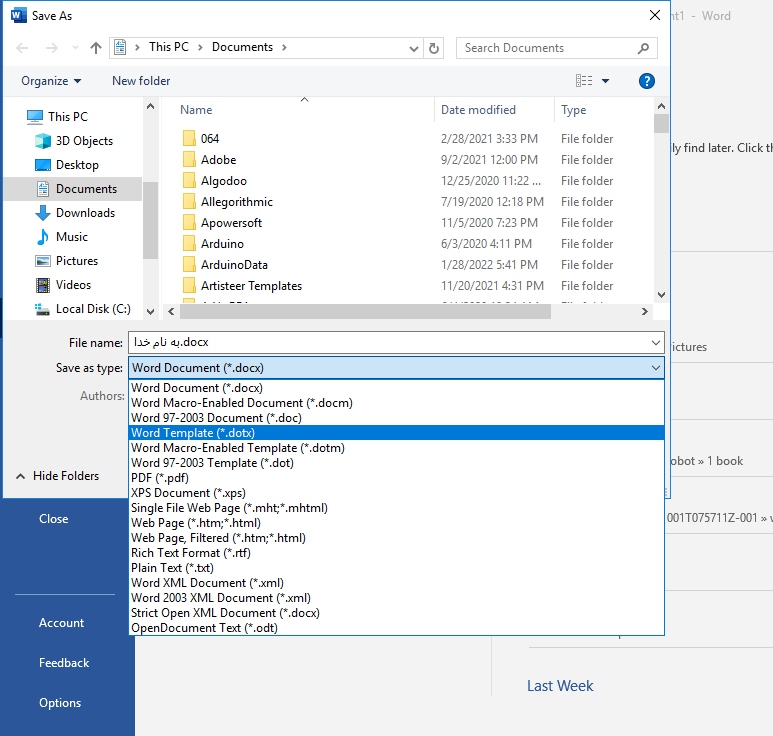

روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه Save را از منوي File انتخاب مي كنيم(با فشردن كليدهاي Ctrl + S نيز مي توان اين عمليات را انجام داد). اگر بخواهيم از سند فعلي يك رونوشت با نامي متفاوت ويا در محلي ديگر تهيه نماييم، از منوي File گزينه Save As را انتخاب نموده و در كادر باز شده، تنظيمات لازم را انجام مي دهيم.

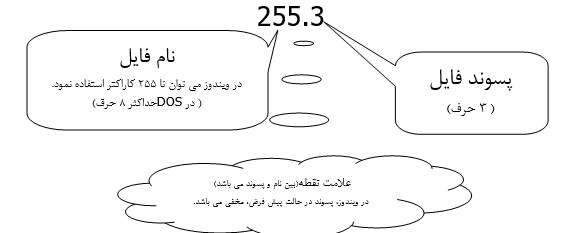

الف) در قسمت بالا (:Save In) محل ذخيره شدن را تعيين مي نماييم.

ب) در قسمت پايين (File Name) نامي را براي سند تايپ مي كنيم.

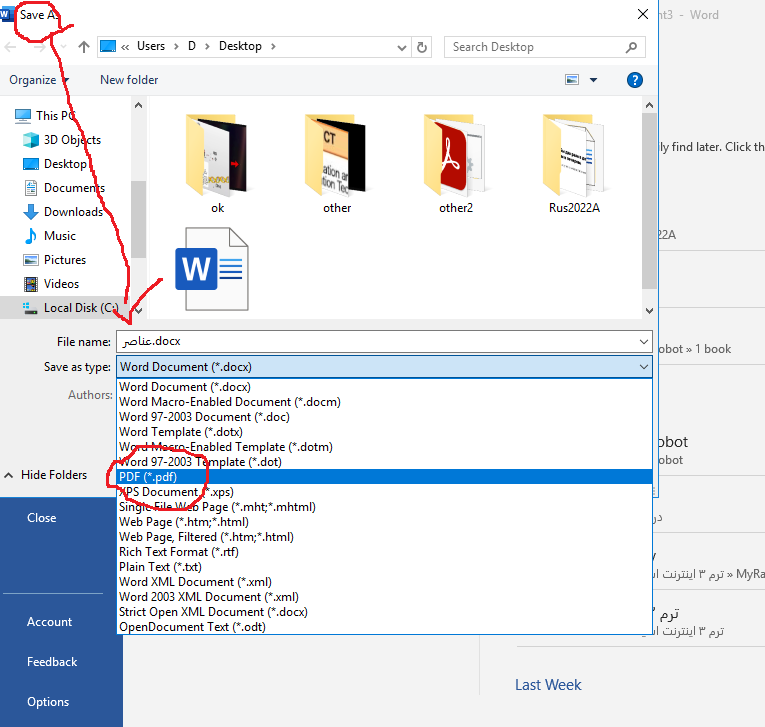

ج) در پايين ترين ليست بازشونده(Save As Type) نوع قالب بندي و پسوند سند را تعيين مي سازيم.

اگر قالب بندي سند را از .Doc به .Htm يا .Html تغيير دهيم آنگاه آن سند، براي قرار دادن در وب و اينترنت، مناسب خواهد بود.

1-7 آشنايی با اصول ويرايش متن

با استفاده از منوی Edit و بسياری از دکمه های نوار ابزار استاندارد وقالب بندی می توان عمليات ويرايشی مناسب را روی متن دلخواه اجرا نمود. در ادامه بدليل اهميت موضوع، مطالبی درباره تغيير زبان صفحه کليد، چگونگی انتخاب متن و عمليات کپی، بريدن، چسباندن، حذف متن و … را به تفصيل شرح خواهيم داد.

هر گاه ويندوز علاوه بر زبان انگليسي، قابليت فارسي نويسي و يا هر زبان ديگري داشته باشد، مي توان از طريق هاي مختلفي نوع زبانِ صفحه كليد را تغيير داد. مثلاً در نوار ابزار قالب بندي، دكمه چپ به راست(Left To Right) را كليك مي كنيم تا زبان صفحه كليد انگليسي شود. و يا دكمه راست به چپ (Right To Left) را براي فارسي شدن انتخاب مي كنيم.

همچنين مي دانيم كه در بسياري از مواقع، از دكمه هاي ) Ctrlيا (Altو Shift چپ براي انگليسي شدن و دكمه هاي Ctrl (يا Alt) و Shift راست براي فارسي شدن استفاده مي شود.

1-7-1 آشنايي با چگونگی انتخاب متن (Highlighting Text )

براي عمليات ويرايشي و قالب بندي مي بايست ابتدا متن موردنظر را به حالت انتخاب درآورد. به عنوان مثال اگر قصد داشته باشيم سطري از متنِ سند را در قسمتي ديگر از سند كپي نماييم، مي بايست قبل از هرچيز، آن سطر را به حالت انتخاب درآوريم. متن انتخاب شده برای قبول دستورات ويرايشی، رنگی و متمايز می شود.

چند روش براي انتخاب متن دلخواه از يك سند وجود دارد:

- مكان نما را در ابتدا يا انتهاي عبارت دلخواه قرار داده و كليد Shift را نگه مي داريم و سپس به كمك كليدهاي جهت نما به طرفين، حركت نموده و آن متن را به حالت انتخاب درمي آوريم.

- با دابل كليك روي كلمه موردنظر مي توان آن را به انتخاب درآورد.

- اشاره گر ماوس را به ابتدا يا انتهاي متن موردنظر انتقال داده و دكمه چپ را نگه داشته و ماوس را به سمت دلخواه حركت مي دهيم.

| متن انتخاب شده |

1-7-2 شناسايي اصول کپی، بريدن، چسباندن و حذف متن

گاهی لازم است قسمت زيادی از متن به جای ديگر از سند کپی، جابجا و يا حذف گردد. در اين هنگام می توان بدون دوباره کاری، از دستورات ساده ای به شرح زير استفاده نمود.

1-7-3 برش متن(Cut)

هر گاه قصد داشته باشيم متني را انتقال داده و جابجا نماييم، ابتدا آن متن را انتخاب كرده و سپس از نوار ابزار دكمه را انتخاب مي كنيم. يا اينكه روي آن متن راست كليك نموده و گزينه Cut را بر مي گزينيم. (با فشردن كليدهاي Ctrl + X نيز مي توان اين عمليات را انجام داد)

1-7-4 كپي متن (Copy)

هر گاه قصد داشته باشيم متني را كپي نماييم، ابتدا آن متن را انتخاب كرده و سپس از نوار ابزار دكمه را انتخاب مي كنيم. يا اينكه روي آن متن راست كليك نموده و گزينه Copy را بر مي گزينيم. (با فشردن كليدهاي Ctrl + C نيز مي توان اين عمليات را انجام داد)

1-7-5 چسباندن متن(Paste)

هنگامي كه موضوعي را Copy يا Cut كنيم آن موضوع در حافظه موقت قرار می گيرد، مي توان يک نسخه از آن موضوع را در محل موردنظر قرارداد.

اگر بخواهيم متن Cut يا Copy شده را در قسمتي از سند بچسبانيم، ابتدا مكان نما را به محل موردنظر(مقصد) مي بريم و سپس از نوار ابزار دكمه را انتخاب مي كنيم. يا اينكه در آن محل، راست كليك نموده و گزينه Paste را بر مي گزينيم(با فشردن كليدهاي Ctrl + V نيز مي توان اين عمليات را انجام داد). البته مي توان مطلب Cut يا Copy شده را در اسناد ديگر و حتي در اسناد برخي برنامه هاي مرتبطِ ديگر، قرار داد.

در ضمن عمليات Copy و Cut را از منوی Edit نيز می توان انجام داد.

1-7-6 حذف متن

ابتدا متن موردنظر را انتخاب نموده و سپس دکمه Delete را روی صفحه کليد فشار می دهيم و يا اين که از منوی Edit گزينه Clear را انتخاب نموده و يکی از دستورات موجود در منوی فرعی را اجرا می کنيم:

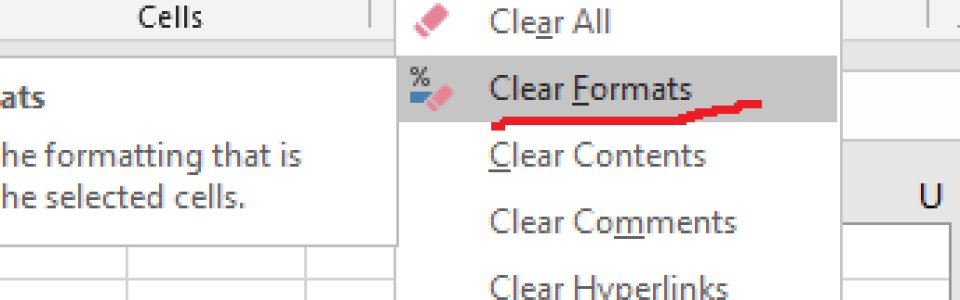

- Formats : فقط قالب بندی متن انتخاب شده حذف می گردد.

- Contents : کل متن انتخاب شده حذف خواهد شد.

1-7-7 رنگ زمينه قلم (Highlight)

براي انتخاب و Highlight كردن متن و تنظيم رنگي براي زمينه آن، دكمه در نوار ابزار قالب بندي كاربرد دارد.

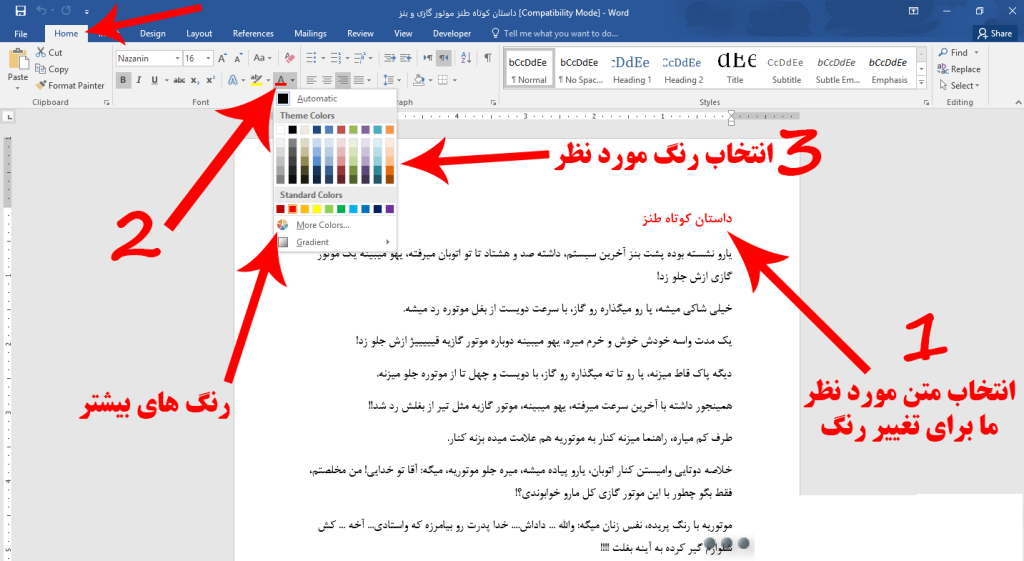

1-7-8 رنگ قلم (Font Color)

دكمه در انتهاي سمت راست نوار ابزار قالب بندي براي تغيير رنگ قلم مي باشد.

1-7-9 آشنايي با استفاده از دستور برگشت

هر گاه درعمليات تايپ، ويرايش و قالب بندي كاري انجام داده و بخواهيم آن كار ناديده گرفته شده و متن به حالت قبل برگردد، دكمه لغو عمليات قبلي(Undo ) را در نوار ابزار استاندارد انتخاب مي كنيم (با فشردن كليدهاي Ctrl + Z نيز مي توان اين عمليات را انجام داد).

در ضمن برای ناديده گرفتن و لغو عمليات Undo دكمه انصراف از لغو ( Redo) را در نوار ابزار كليك مي كنيم.

1-7-10 آشنايي با نحوه ضخيم و پررنگ کردن متن انتخاب شده Bold))

دكمه در نوار ابزار قالب بندي، متن انتخاب شده را ضخيم و پررنگ مي سازد. (Ctrl + B)

1-7-11 آشنايي با نحوه زير خط دار کردن متن انتخاب شده (Underline)

دكمه در نوار ابزار قالب بندي براي زير خط دار كردن متن انتخابي مي باشد. (CTRL+U)

1-7-12 آشنايي با نحوه مايل و ايتاليك کردن متن انتخاب شده (Italic)

دكمه در نوار ابزار قالب بندي براي مايل كردن متن انتخابي مي باشد. (CTRL+I)

به عنوان مثال با توجه به مطالب فوق، سطرهايي را تايپ نموده و به حالتهاي معمولي،ضخيم، زيرخط دار و مايل در مي آوريم:

| اين سطر در حالت معمولي است. اين سطر در حالت ضخيم است. اين سطر در حالت زير خط دار است. اين سطر در حالت مايل است. |

1-7-13 آشنايي با ابزار چيدمان

برای تنظيم و مرتب نمودن متن در نوار ابزار قالب بندي تنظيمات زير امکان پذير مي باشد.

| چپ چين شدن متن | Align Left | |

| وسط چين شدن متن | Center | |

| راست چين شدن متن | Align Right | |

| همتراز از دو طرف | Justify |

هرگاه در جايي از يك سطر يا پاراگراف كليك نموده و يكي از دكمه هاي فوق را فشار دهيم، متن آن قسمت، نسبت به لبه كاغذ، مرتب و تراز خواهد شد.

مثال) در متن زير سطر اول وسط چين، سطر دوم راست چين و سطر سوم چپ چين مي باشد.

به نام خدا

توانا بود هركه دانا بود

ز دانش دل پير برنا بود

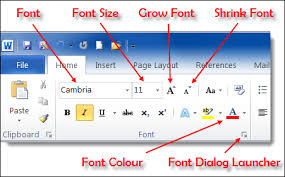

1-8 شناسايي اصول تنظيم قلم و اندازه قلم

براي تغيير سبك، نوع و اندازه قلم ابتدا متن موردنظر را انتخاب نموده و سپس توسط دكمه اول اين نوار ابزار(Style) سبك قلم را تغيير داده و توسط دكمه دوم(Font) نوع قلم را تغيير مي دهيم و به كمك دكمه سوم (Font Size) اندازه قلم را تنظيم مي سازيم.

| انتخاب يك سبك و حالت براي قلم |

| نوع قلم |

| اندازه قلم |

| تغيير زبان |

به عنوان مثال سطري را تايپ نموده و به حالت انتخاب درآورده و سپس توسط دكمه هاي نوار ابزار قالب بندي(مطابق شكل قبل) ويرايش لازم را انجام مي دهيم

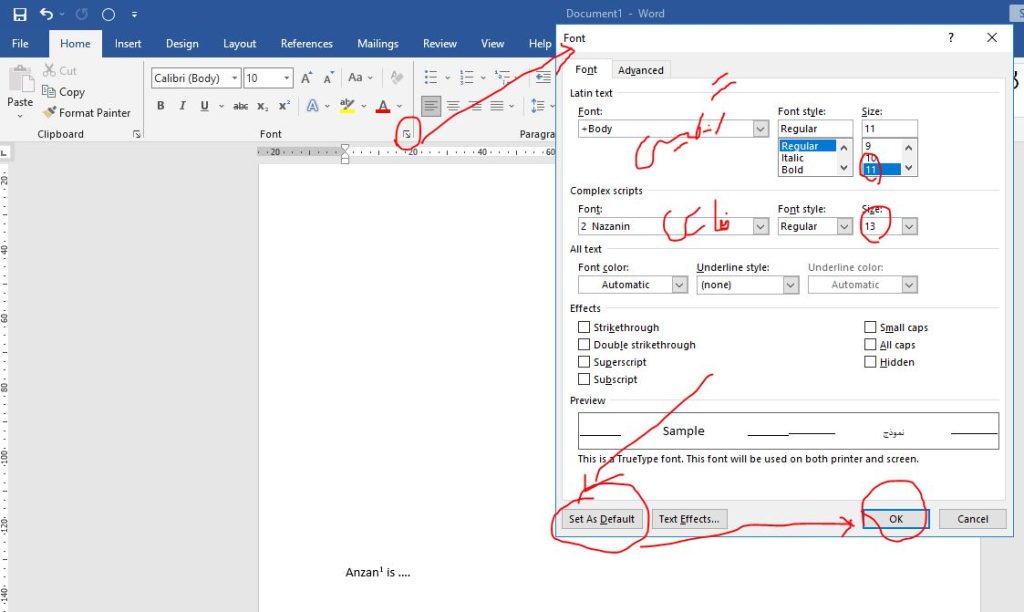

1-8-1 سربرگ Font از کادر Format

همچنين از طريق منوي Format و گزينه Font… نيز مي توان ظاهر قلم را تغيير داد. به شكل بعد و توضيحات آن توجه فرماييد.

اگر پس از تنظيمات دلخواه، دكمه را در پايين و چپ انتخاب كنيم، آنگاه تنظيمات انجام شده هميشگي و دايمي خواهند شد.

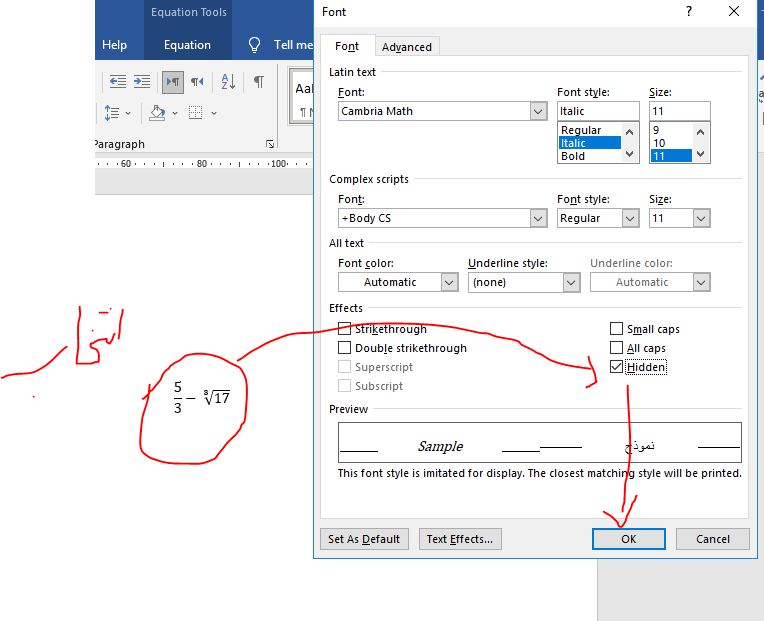

توجه شود كه در قسمت مياني كادر فوق(Effects)، مي توان با انتخاب گزينه هاي زير، اعداد و يا متن انتخاب شده فعلي را بالا نويس (حالت توان) و يا پايين نويس(حالت انديس) نمود.

Superscript : بالا نويس (حالت توان) مثل اعداد2 و 5 در عبارت 32+45 = ?

(البته از كليدهاي تركيبي Ctrl+Shift+(+) نيز ميتوان استفاده نمود.)

Subscript: پايين نويس(حالت انديس) مثل اعداد 2 و 10 در عبارت (110101)2 = (?)10

(البته از كليدهاي تركيبي Ctrl+(+) نيز ميتوان استفاده نمود.)

1-8-2 برگه Character Spacing (برگه دوم از كادر Font)

در صفحه محاوره اي اين برگه، توسط قسمت Scale ميتوان كاراكترهاي قلم را فشرده ساخت يا باز نمود. قسمت Spacing در اين برگه، فاصله بين حروف يك كلمه را تغيير داده وكم و يا زياد مي كند. و قسمت Position براي جابجايي متن سند نسبت به خط اصلي مي باشد. (Lowered) پائين تر و (Raised) بالاتر از خط اصلي .

1-8-3 برگه Text Effects (برگه سوم از كادر Font)

اگر متن خود را بلوك كرده و يكي از گزينه هاي اين كادر محاوره اي را انتخاب كنيم آنگاه دور متن انتخابي ما جلوه هاي متحرك و جالبي نمايان ميشود. البته اين Animation و جلوه ها ارزش چاپي ندارند.

1-9 آشنايي با چگونگی استفاده از پيوند در مکان مناسب(Hyperlink)

اگر بخواهيم متني شبيه صفحات وب بوده و داراي پيوند باشد، و با كليك در آن متن صفحه و برنامه جديدي ظاهر گردد، از دكمه استفاده مي كنيم.

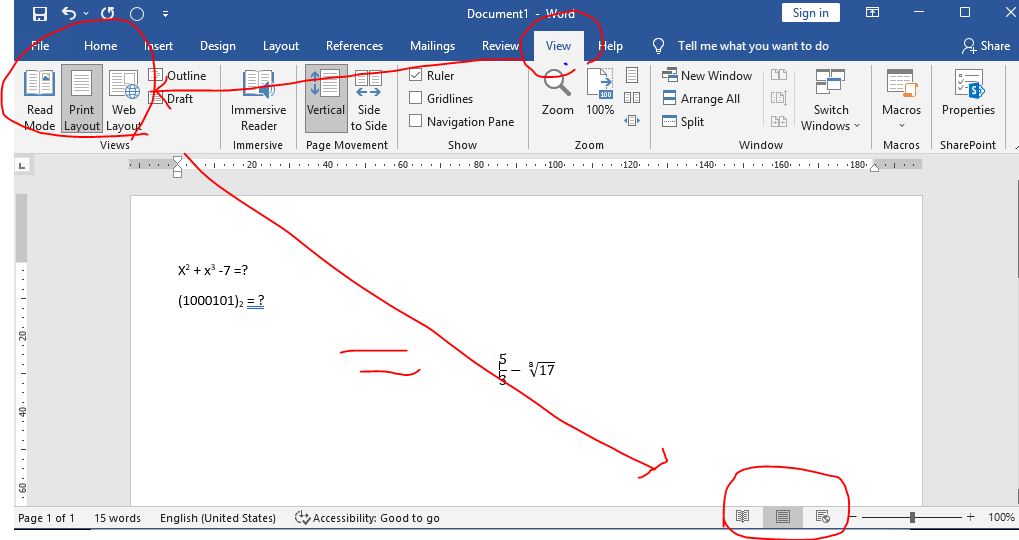

1-10 آشنايي با حالتهای مختلف نمايش سندDocument View

در اين برنامه مي توان از منوي View يكي از حالات نمايشي زير را تنظيم نمود:

Normal, Web Layout, Print Layout, Reading Layout, Outline

در اين قسمت بطور مختصر هر كدام از حالات نمايشي فوق شرح داده مي شود:

– Normal : در اين نما قالب بندی متنِ سند در حالت معمولي نمايش داده خواهد شد.

– Web Layout : نمايش سند بصورتي كه در برنامه مرورگر وب مشاهده خواهد شد.

– Print Layout : در اين حالت سند به همان شكلي كه قرار است چاپ شود، نمايش داده خواهد شد.

–Reading Layout : اين حالت برای مطالعه سند مناسب است.

– Outline : در اين حالت طرح کلی سند شامل عناوين اصلی و فرعی نمايش داده خواهد شد.

در ضمن در گوشه پايين و چپِ محيط برنامه Microsoft Word دكمه هايي براي تغيير وضعيت نمايشي در دسترس است(با عملكردي مشابه با گزينه هاي مشروح فوق)

1-11 آشنايي با ابزارهای بزرگ نمايي و کوچک نمايي سند(Zoom)

در انتهاي سمت راست نوار ابزار استاندارد، ليست باز شونده اي در دسترس است ( ) كه از طريق آن مي توان بزرگ نمايي سند را تغيير داد. مثلاً هر گاه اين گزينه، روي %200 تنظيم باشد، سند دوبرابر بزرگتر مشاهده خواهد شد. راه ديگر اين است كه مي توان از منوي File گزينه Zoom را انتخاب نمود و در كادر كوچك بازشده، درصد بزرگنمايي را مشخص ساخت.

1-12 آشنايي با چگونگی تايپ يک پاراگراف جديد

از نظر برنامه Microsoft Word هر گاه كليد Enter فشرده شود،پاراگراف فعلي خاتمه پذيرفته و پاراگراف جديدي آغاز خواهد شد. پاراگراف می تواند يک حرف، يک کلمه يا چندين سطر باشد.

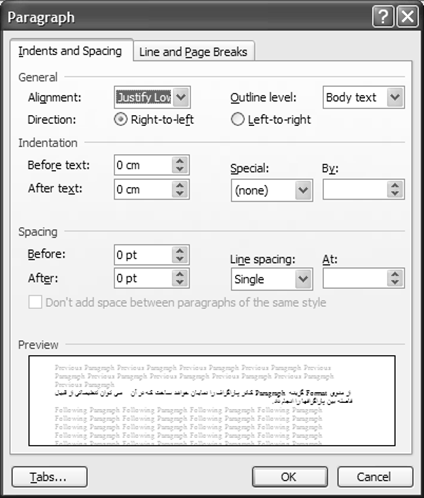

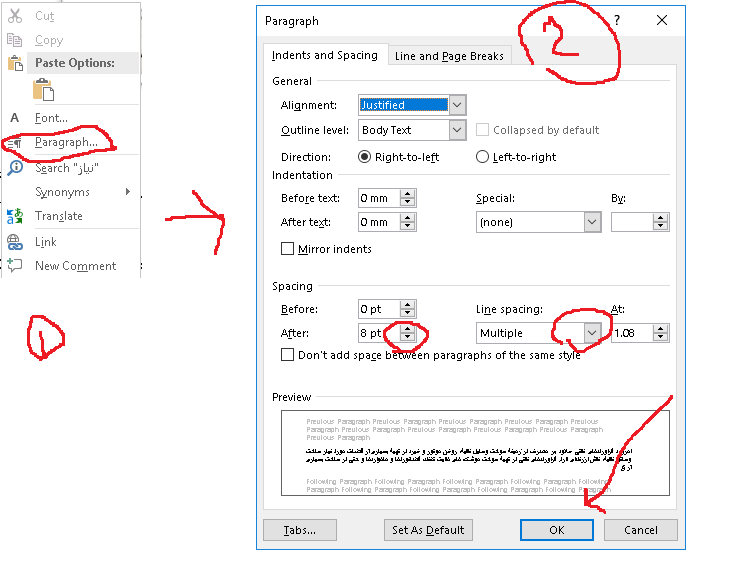

از منوي Format گزينه Paragraph كادر پاراگراف را نمايان خواهد ساخت كه در آن مي توان تنظيماتي از قبيل فاصله بين پاراگرافها را انجام داد.

براي نمايش اطلاعاتي از قبيل تعداد پاراگرافها و فاصله ها در سند، مي توان دكمه را در نوار ابزار استاندارد کليک نمود.

1-13 فرو رفتگی و بيرون رفتگی ابتدای يك پاراگراف

دكمه در نوار ابزار قالب بندی جهت تو رفتگي يك پاراگراف كاربرد دارد. برای بيرون رفتگی پاراگراف، در سطر دلخواهي كه نسبت به حاشيه چپ يا راست تو رفته است كليك نموده وسپس دكمه را در نوار ابزار قالب بندي انتخاب مي كنيم تا فاصله آن متن نسبت به حاشيه تغيير نمايد. اگر فاصله متن نسبت به حاشيه كاغذ زياد شود، آن متن تورفتگي يافته و اگر فاصله آن كم شود، آن متن بيرون رفتگي يافته است.

| ميزان تو رفتگي قبول داريم که مجموعه حاضر عاری از کاستی ها و ايرادات نيست، اما می تواند قدم مثبتی در جهت ارتقا دانش فني علاقمندان تلقي شود. انشاءا… مفيد واقع گردد. |

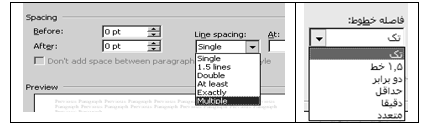

1-14 آشنايي با تغيير فاصله بين سطرها Line Spacing) )

اين فرمان ميزان فضاي بين سطرهاي يك پاراگراف را تنظيم مي كند. در قسمت پايين كادر Paragraph از منوي Format مي توان فاصله بين سطرها را تنظيم نمود. به عنوان نمونه، اگر Double را انتخاب كنيم فاصله بين سطرهاي انتخاب شده فعلي، دو برابر خواهد شد و اگر 1.5 Lines را برگزينيم، فاصله بين سطرها يك و نيم برابر خواهد شد.

در ادامه همين بحث، يك پاراگراف را با فاصله هاي متفاوتِ بين سطرها،با ذكر كليدهاي تركيبي مربوطه نشان داده ايم:

| الف)براي اينكه فاصله بين سطرها در حالت معمولي باشد، پس از انتخاب سطرها، كليدهايCtrl + 1 را روی صفحه كليد مي زنيم: |

| هرگز هيچ هدفي را رها مكنيد، مگر اينكه ابتدا قدم مثبتي در جهت تحقق آن برداشته باشيد. هم اكنون لحظه اي فكر كنيد و اولين قدمي را كه بايد در جهت رسيدن به هدف برداريد مشخص سازيد. براي اينكه پيشرفت كنيد، چه قدمي را بايد امروز برداريد؟ حتي يك قدم كوچك شما را به هدف نزديكتر مي كند. |

| ب) براي اينكه فاصله بين سطرها 1.5 برابر باشد، پس از انتخاب سطرها، كليدهايCtrl + 5 را روی صفحه كليد مي زنيم: |

| هرگز هيچ هدفي را رها مكنيد، مگر اينكه ابتدا قدم مثبتي در جهت تحقق آن برداشته باشيد. هم اكنون لحظه اي فكر كنيد و اولين قدمي را كه بايد در جهت رسيدن به هدف برداريد مشخص سازيد. براي اينكه پيشرفت كنيد، چه قدمي را بايد امروز برداريد؟ حتي يك قدم كوچك شما را به هدف نزديكتر مي كند. |

| ج) براي اينكه فاصله بين سطرها 2 برابر باشد، پس از انتخاب سطرها، كليدهايCtrl + 2 را روی صفحه كليد مي زنيم: |

| هرگز هيچ هدفي را رها مكنيد، مگر اينكه ابتدا قدم مثبتي در جهت تحقق آن برداشته باشيد. هم اكنون لحظه اي فكر كنيد و اولين قدمي را كه بايد در جهت رسيدن به هدف برداريد مشخص سازيد. براي اينكه پيشرفت كنيد، چه قدمي را بايد امروز برداريد؟ حتي يك قدم كوچك شما را به هدف نزديكتر مي كند. |

1-15 آشنايي با درج صفحه گسترده در سند

دكمه (Insert Microsoft Excel Worksheet) را در نوار ابزار انتخاب مي كنيم تا يك صفحه گسترده از برنامه اكسل در محل مكان نما ايجاد شود.

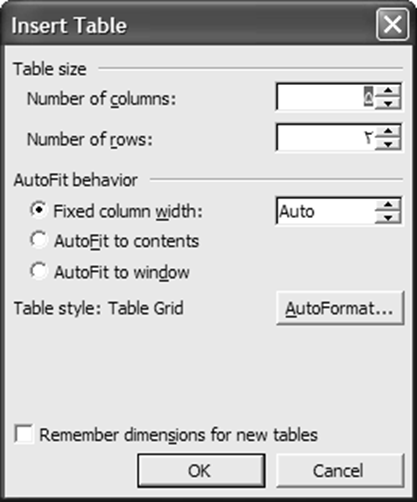

1-16 شناسايي اصول ايجاد جدول و عمليات بر روی آن

براي رسم سريع جدول از دكمه Insert Table استفاده مي گردد. هر گاه اين دكمه نگه داشته شود، در آن صورت كادر كوچكي ظاهر مي شود و ما در اين كادر، تعداد سطرها و ستونهاي جدول را مشخص مي سازيم.

رسم جدولي با سه سطر و دو ستون

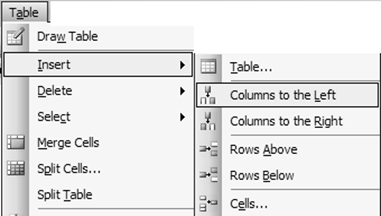

در ضمن مي توان از منوي Table ، براحتي از طريق گزينه Insert جدول دلخواه را ساخت، تغيير داد و مديريت نمود. به شكل بعد توجه نماييد كه 2 سطر و 5 ستون تعيين شده است.

| تعداد ستون تعداد سطر قالب بندي خودكار جدول |

1-16-1 نمايش نوار ابزار جدولها و كادرها

براي رسم جدول و تغييرات در آن، مي توانيم دكمه را انتخاب نموده و توسط دكمه هاي اين نوار، تنظيمات دلخواه را انجام دهيم.

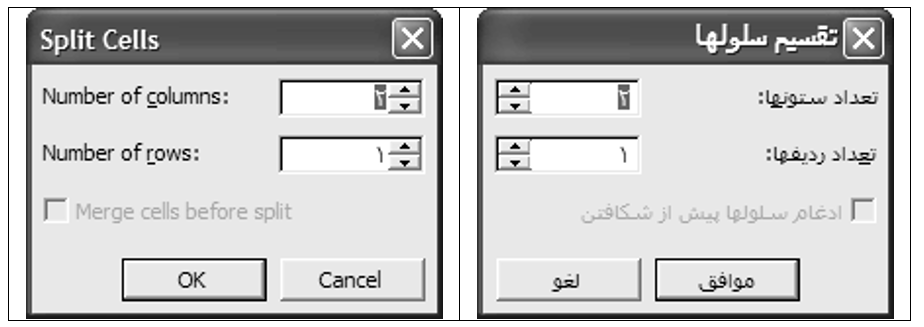

1-16-2 تقسيم يک خانه از جدول به چند خانه

با راست كليك در يك خانه از جدول و انتخاب گزينه Split… مي توان آن خانه را به چند خانه (در چند سطر و ستون) تجزيه و تقسيم نمود.كادري مشابه شكل بعد ظاهر شده و ما قادر خواهيم بود كه تنظيم نماييم خانه مذكور به چند سطر (Row) و چند ستون (Column) تجزيه شود.

پس از آن كه با استفاده از منوي Table يك جدول با تعداد سطر و ستون دلخواه (مثلاً 2 سطر و 5 ستون) ايجاد نموديم، آنگاه خانه اي از جدول را كه مي خواهيم به چند خانه تجزيه كنيم را انتخاب نموده و سپس روي اين خانه انتخاب شده، راست كليك نموده و گزينه Split را کليک می کنيم تا كادر شكل بعد نمايان شود. در اين كادر تنظيم مي كنيم كه خانه انتخاب شده به چند ستون و سطر تجزيه شود.

( در اين كادر تنظيم شده كه خانه انتخاب شده به 2 ستون و 1 سطر تجزيه گردد)

| اين خانه ابتدا انتخاب شده و سپس به 2 ستون و يک سطر تجزيه(Split) شده است. |

1-16-3 ادغام چند خانه از جدول در يک خانه

براي ادغام و يكي نمودن چند خانه در يك خانه، ابتدا آن خانه ها را انتخاب نموده و سپس روي يكي از خانه هاي انتخاب شده، راست كليك نموده و گزينه Merge… را بر مي گزينيم.

| جدولي با 4 ستون و 3 سطر انتخاب خانه هاي دلخواه ادغام خانه هاي انتخاب شده |

اين خانه ها ابتدا انتخاب شده و سپس ادغام(Merge) شده اند.

þبراي ادغام و حذف خانه هايي از جدول،مي توان از نوار ابزار Borders & Tables پاك كن (Eraser) را انتخاب نمود و سپس روی خطی که وسط دو خانه قرار گرفته کليک كرد تا آن خط حذف شود.در ارتباط با عمليات ادغام و حذف راه ديگر اين که از منوی Table گزينه Splite يا Merge را انتخاب کنيم.

1-16-4 تغيير اندازه جدولها

براي اينكه بتوانيم عرض و يا ارتفاع خانه هاي جدول خود را كم و زياد كنيم ، كافيست تا اشاره گر را بين دو خانه قرار داده و هنگامي كه اشاره گر به شكل يك فلش دو طرفه شود با كشيدن خط به يك سمت ديگر،تغييرات موردنظر را انجام دهيم.

1-16-5 تايپ عمودی در خانه های جدول

براي تايپ عمودي در جدول ابتدا خانه مورد نظر را انتخاب نموده سپس از منوي Format، گزينه Text Direction را انتخاب مي سازيم.

1-16-6 قالب بندی خودکار

اگر از منوي Table گزينه Table Auto Format (قالب بندي خودكار) را انتخاب كنيم خواهيم توانست از طرح هاي آماده برنامه Wordبراي ايجاد جدول استفاده كنيم.

1-16-7 اضافه کردن ستون و سطر جديد

جهت اضافه كردن يك ستون می توان از منوي Table گزينه Insert و سپس Colmuns… را انتخاب نمود . و براي اضافه كردن يك سطر يا رديف می توان از منوي Table گزينه Insert و سپس Rows… را انتخاب كرد. و براي اضافه كردن خانه می توان از منوي Table گزينه Insert و سپس Cells… را انتخاب نمود .

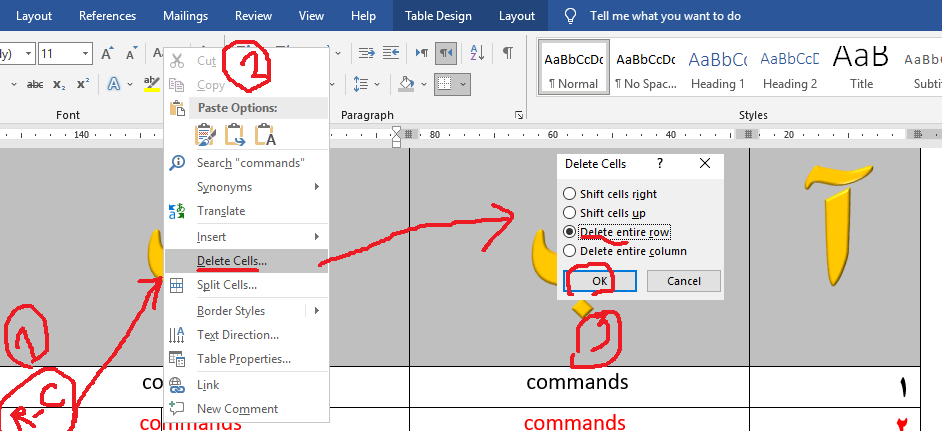

1-16-8 حذف ستون يا سطر

براي حذف يك ستون ، يك رديف يا يك خانه می توان از منوي Table گزينه Delete را بكار برد.

1-17 آشنايي با چگونگی کار دکمه های نوار ابزار ترسيمات (Drawing)

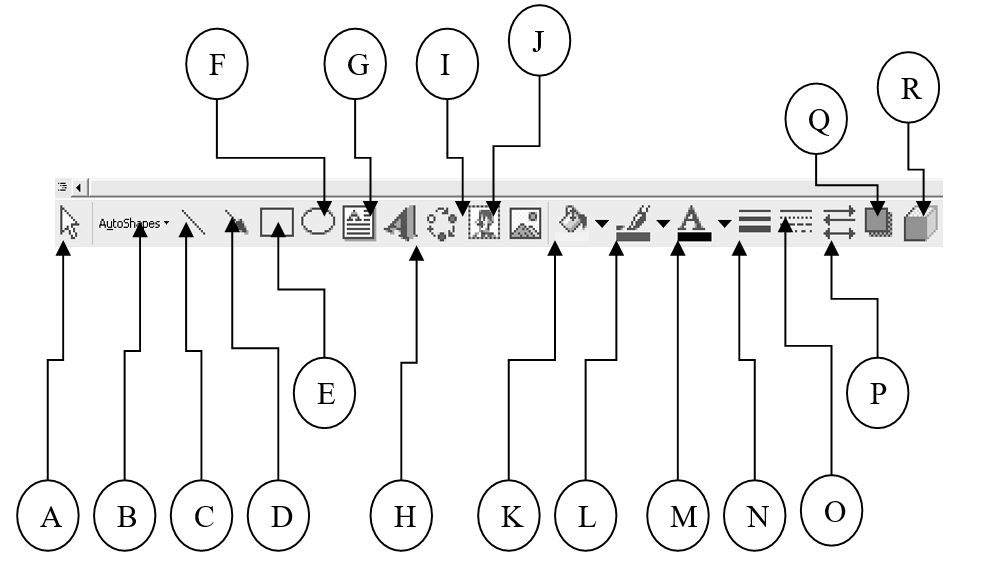

دكمه براي نمايان ساختن نوار ابزار ترسيمات كاربرد دارد. توضيحات مربوط به اين نوار در ادامه شرح داده شده است.

- برای رسم برخي اشكال هندسي آماده(Autoshapes) به کار می رود.

B– برای رسم خط(Line) استفاده می شود.

C– برای رسم پيكان(فلش) (Arrow) کاربرد دارد.

D– برای رسم مستطيل (Rectangle) کاربرد دارد.

E– برای رسم بيضي (Oval) به کار می رود.

F– برای رسم جعبه متني (Text Box) استفاده می شود.

G– برای رسم كلمات هنري با جلوه هاي ويژه (Wordart) استفاده می شود.

H– برای رسم نمودار استفاده می شود.

I– برای درج اشكال گرافيكي آماده استفاده می شود.

J– برای درج تصوير (Insert Picture) استفاده می شود.

K– برای رنگ كردن داخل اشكال هندسي انتخابی (Fill Color) استفاده می شود.

L– برای رنگ كردن خطوط اشكال هندسي انتخابی (Line Color) استفاده می گردد.

M– برای رنگ قلم (Font Color) استفاده می شود.

N– برای تغيير ضخامت خطوط اشكال هندسي انتخابی (Line Style) استفاده می شود.

O– برای تغيير نوع و حالت خط اشكال هندسي انتخابی (Dash Style) استفاده می شود.

P– برای تغيير حالت پيكان(فلش) (Arrow Style) استفاده می شود.

Q– برای سايه دار كردن اشكال (Shadow Style) استفاده می شود.

R– برای سه بعدي نمودن اشكال (3-D Style) استفاده می شود.

1-18 آشنايي با چگونگی استفاده از برنامه راهنما (Help)

با انتخاب گزينه از منوی Help و يا با فشردن دكمه در نوار ابزار استاندارد كادري باز مي شود كه بايد در آن سوال خود را مطرح نمود،تا برنامه درباره آن سوال توضيحاتي را در صورت وجود نمايش دهد. بنابراين عبارتي را در آن تايپ نموده و Enter را انتخاب مي كنيم. كه در صورت وجود آن عبارت در كتابچه راهنماي برنامه، ليستي ظاهر شده و سرفصل مربوط به آن عبارت را ظاهر مي سازد، كه با كليك روي يكي از موارد موجود در ليست، مطلب مربوط به آن عبارت نمايان مي شود. در صورت نياز مي توانيم آن مطلب را Copy نموده و در سند Paste نماييم.

به عنوان مثال درباره Table اطلاعاتي را جستجو مي کنيم:

پس از تايپ اين کلمه در قسمت تعيين شده، کليد Enter را فشار داده و يا دکمه را در مقابل اين قسمت انتخاب می سازيم. تا در کتابچه راهنما، ليستی از عنوان های مربوط به Table نمايان شود.

هر گاه يکی از اين عنوان ها را انتخاب سازيم، توضيحات و مطلب مرتبط با آن عنوان مشاهده خواهد شد.

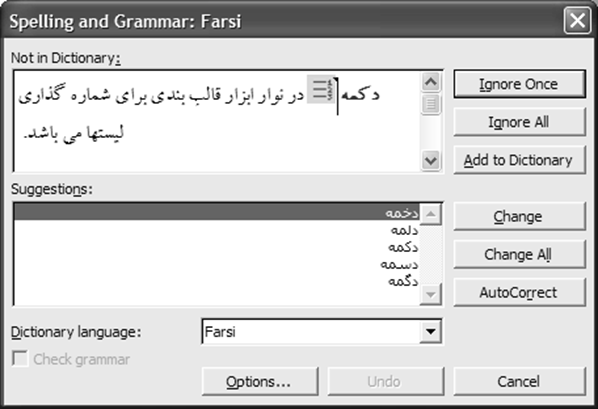

1-19 آشنايي با چگونگی استفاده از ابزار بررسی کننده املايي و دستوری

براي تصحيح خودكار ايرادات املايي و دستوري مي توان روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه Spelling And Grammar را از منوي Tools انتخاب كرد (با فشردن كليد F7 نيز مي توان اين عمليات را انجام داد).

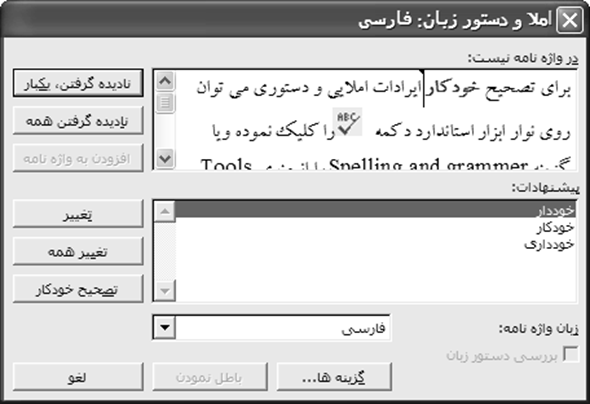

مي توان در اين برنامه از طريق دكمه Options… در پايين، كادر تنظيمات مربوط به Spelling and Grammar را ظاهر ساخته و گزينه ها ي دلخواه را غير فعال ساخته و در صورت نياز مجدداً آنها را فعال نمود.

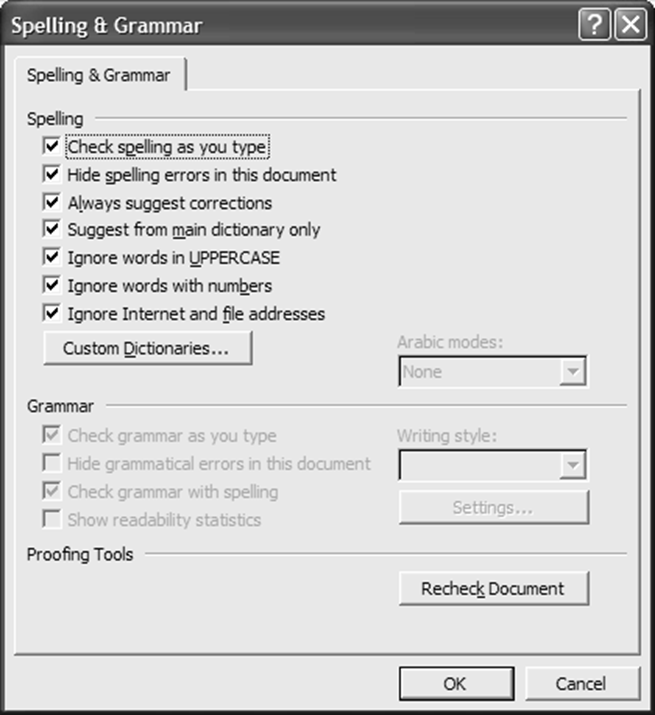

در شكل بعد گزينه هاي Spelling and Grammar را با توضيح فارسي ملاحظه نماييد.

اين برنامه يك ديكشنري هوشيار دارد كه در هنگام تايپ، طبق اين فرهنگ لغت املاي مطالب تايپ شده را چك نموده و اگر غلط ديكته داشته باشد زير آن كلمه خط قرمز و اگر غلط گرامري وجود داشته باشد خط سبز رنگ مي كشد. از طريق دکمه اين امکان وجود دارد که فرهنگ لغت ديگری را تعيين نمود و يا اين که از اين عمليات صرف نظر کرد.

1-20 آشنايي با استفاده از فهرستهای اعداد و علامتها

دكمه در نوار ابزار قالب بندي براي شماره گذاري ليستها (Numbering) مي باشد. در ضمن از منوي Format گزينه Bullets And Numbering… كادر مربوط را جهت تغيير نوع شماره گذاري و تنظيمات بيشتر نمايان خواهد ساخت. در برگه Numbered يكي از حالتها را انتخاب نموده و كادر را تاييد مي كنيم.

برای نمونه به چند نوع شماره گذاري زيرتوجه می کنيم:

| پوريا سينا پدرام | پورياسيناپدرام |

| پورياسيناپدرام | پورياسيناپدرام |

دكمه در نوار ابزار قالب بندي براي علامت گذاري ليستها (Bullets) مي باشد. در ضمن از منوي Format گزينه Bullets And Numbering… كادر مربوط را جهت تغيير نوع شماره گذاري و تنظيمات بيشتر نمايان خواهد ساخت. در برگه Bulleted يكي از حالتها را انتخاب نموده و كادر را تاييد مي كنيم.

به عنوان مثال به چند نوع علامت گذاري زير توجه می کنيم:

| پورياسيناپدرام | پورياسيناپدرام |

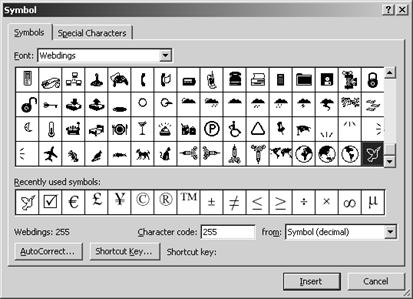

نكته) براي اينكه علامتهاي جالب و سفارشي بسازيم، بايد مراحل زير را انجام دهيم:

در برگه Bulleted از كادر Bullets And Numbering… يك علامت را انتخاب مي كنيم تا طي مراحلي، علامت ديگري را جايگزين آن سازيم.

- دكمه را در پايين و راست اين برگه انتخاب مي كنيم.

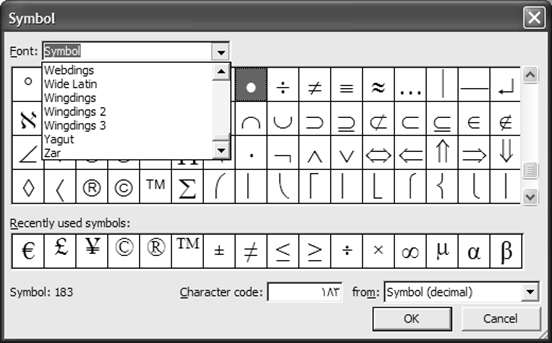

دكمه Character را براي انتخاب نوع علامت كليك مي كنيم. از ليست باز شونده بالاي كادر بعد، نوعي قلم(مثلاً Webdings ) را انتخاب مي نماييم:

دکمه Font امکان تنظيم قالب بندی قلم(نوع، اندازه، رنگ و …) را در اختيار قرار می دهد.

5) در ميان علائم نمايان شده، شكل و علامت دلخواه را انتخاب نموده و كادرها را بترتيب تاييد مي كنيم.

| مثال) غزال تارا شادي نگار ساينا |

1-21 آشنايي با چگونگی ايجاد صفحات جديد در سند فعلی

هر گاه مطالب تايپ شده از اندازه صفحه تجاوز نمايد، اين برنامه بطور هوشمند صفحه جديدی ايجاد می کند. اما بطور کلی براي ايجاد صفحه جديد در سند فعلی، ابتدا مكان نما را به جايي از صفحه كه مي خواهيم، صفحه جديدي از آن نقطه به بعد ايجاد شود منتقل نموده و از منوي Insert گزينه Break را انتخاب و Ok را كليك مي نماييم و يا اينكه از كليدهاي تركيبيِ Ctrl و Enter استفاده مي كنيم.

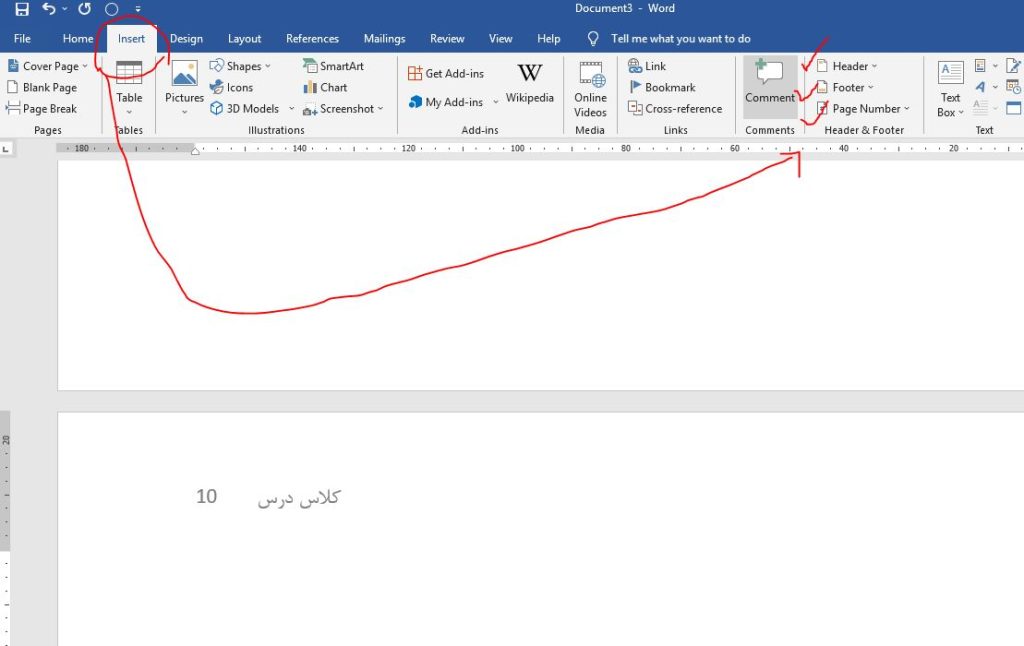

1-22 آشنايي با اضافه کردن سرصفحه و پاورقی (Header And Footer)

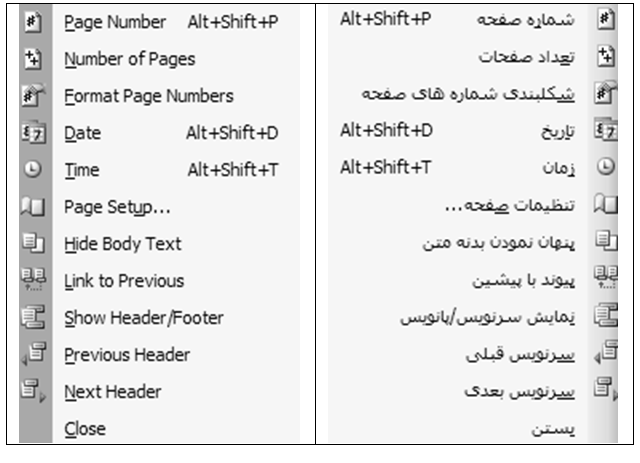

هرگاه از منوي View گزينه Header And Footer را انتخاب نماييم، كادر نقطه چيني در بالاي صفحه و همچنين نوار ابزاري خاص نمايان مي شود كه در كادر نقطه چيني مطلب دلخواه را به عنوان سرصفحه تايپ مي كنيم.

دكمه هاي اين نوار ابزار در تنظيم سرصفحه و پاصفحه كاربرد دارند.

A – برای درج يك متن و عبارت آماده استفاده می شود.

B– برای شماره بندي صفحات استفاده می شود.

C – برای درج تعداد كل صفحات سند فعلي استفاده می شود.

D – برای تغيير قالب شماره بندي صفحات استفاده می شود.

E – برای درج تاريخ فعلي سيستم استفاده می شود.

F – برای درج ساعت فعلي سيستم استفاده می شود.

G – برای دسترسی به كادر تنظيمات صفحه استفاده می شود.

H – برای مخفي کردن متن سند در زمان تنظيم سرصفحه و پاصفحه استفاده می شود.

I – برای اعمال تنظيمات مشابه صفحه قبل سند در صفحه جاری استفاده می شود.

J – برای انتقال مكان نما بين سرصفحه و پاصفحه جهت تنظيم هركدام استفاده می شود.

K– برای جابجايي بين صفحات زوج و فرد (صفحات قبل و بعد) استفاده می شود.

L– برای بستن نوار ابزار فوق و برگشت به محيط معمولي برنامه استفاده می شود.

1-23 آشنايي با شماره بندي صفحات سند

از منوي Insert گزينه Page Numbers را انتخاب نموده ودر كادر باز شده از ليست بازشونده بالايي تعيين مي كنيم كه شماره صفحات بالا يا پايين باشند. از ليست بازشونده پاييني تعيين مي نماييم كه شماره صفحات چپ يا راست باشند.

| تنظيم اينكه صفحه اول نيز، شماره صفحه داشته باشد. |

اگر دكمه …Format در كادر فوق را فشار دهيم، كادري باز خواهد شد كه در آن قادريم نوع شماره را تغيير دهيم و همچنين تنظيم نماييم كه عمليات شماره بندي اين سند، از چه شماره اي آغاز شود.

| تعيين اينكه شماره گذاري سند فعلي، از عدد خاصي شروع شود. |

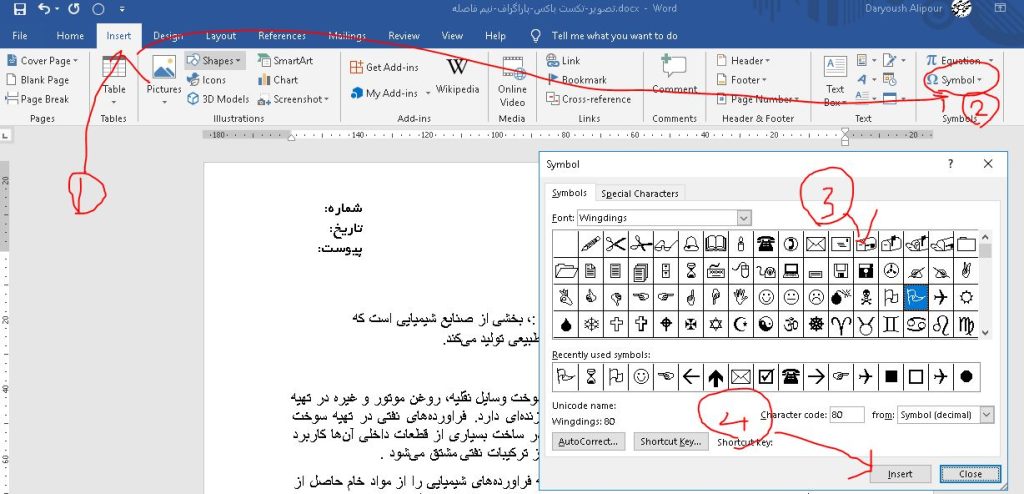

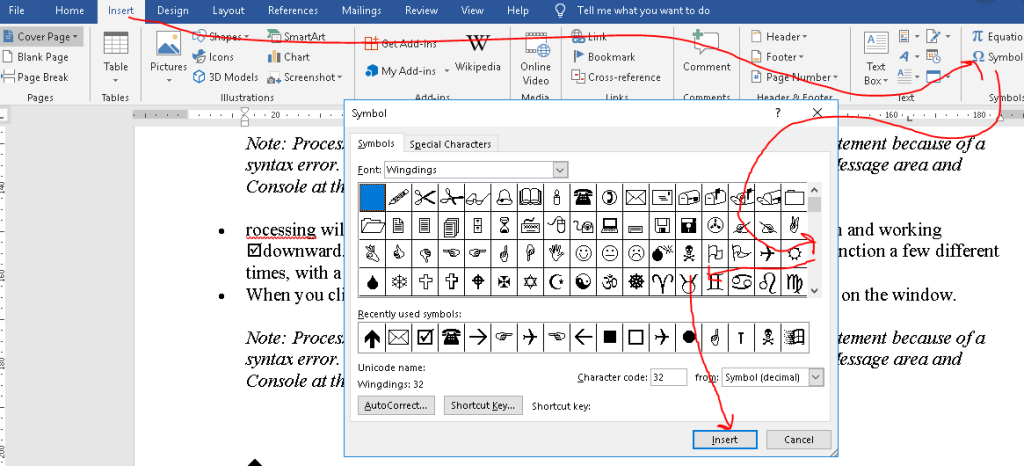

1-24 آشنايي با قرار دادن كاراكترهاي ويژه در سند

از منوي Insert گزينه Symbol را انتخاب نموده و از ليست باز شونده بالاي كادر باز شده، نوعي قلم را انتخاب نموده و از جدول كاراكترها، يكي را كليك نموده و سپس دكمه Insert را در پايين كادر مي زنيم تا كاراكتر انتخاب شده در محل فعلي مكان نما درج گردد.

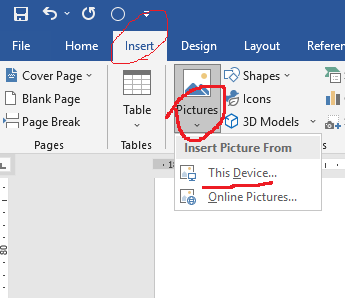

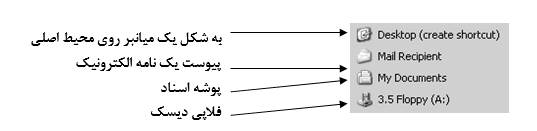

1-25 آشنايي با درج تصوير، نمودار و اشياء ديگر

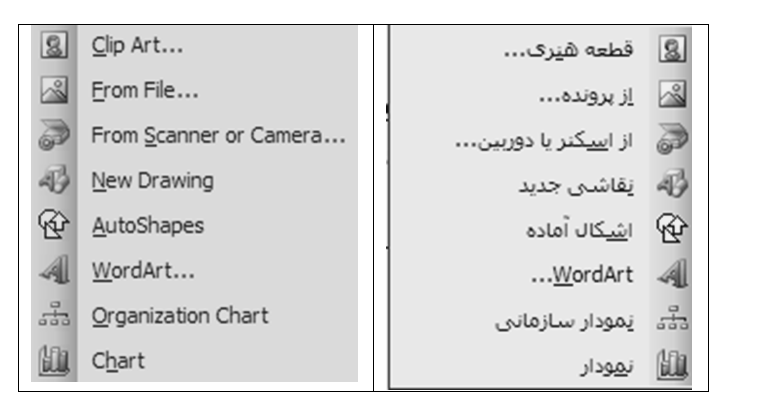

برای درج تصاوير مختلف از منوي Insert گزينه Picture و پس از آن با توجه به شکل بعد يکی از گزينه ها را انتخاب می سازيم.

- برای درج برخی شکلهای آماده و موجود در خود برنامه بايد گزينه Clip Art را كليك كرده و شكل دلخواه را در كادر بعد انتخاب سازيم.

- براي درج يك تصوير از درايوهاي مختلف ديسك، فلاپي و Cd بايد:

Insertمنوي è Picture گزينهèFrom File… گزينه

- در ج نمودار در سند:

Insert منوي è Picture گزينه èChart گزينه

- درج نمودار سازماني در سند:

Insert منوي è Picture گزينه èOrganization Chart گزينه

- درج موضوعات ديگر مثلاً Media Clip :

Insert منوي è Object… گزينه èموضوع موردنظر

اگر قصد داشته باشيم تصاوير و موضوعات درج شده را تغيير اندازه دهيم، ابتدا آن موضوع را كليك نموده و سپس به كمك نقاط كليدي گوشه هاي آن، اندازه اي دلخواه را براي آن شكل،تنظيم مي كنيم.

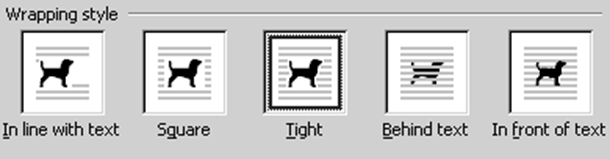

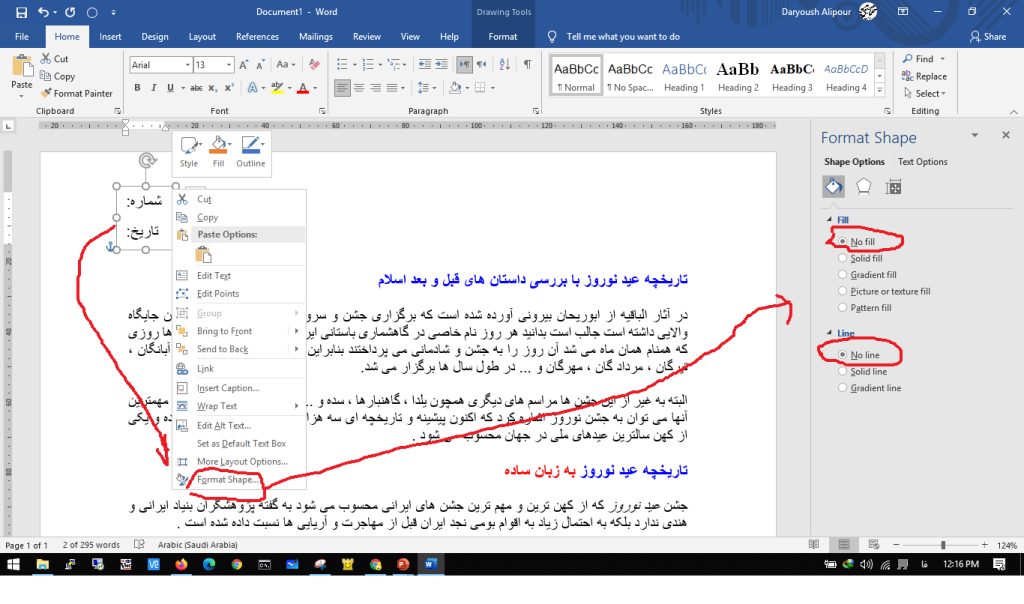

1-26 آشنايي با چگونگی تنظيم قرار گرفتن متن و تصوير

با راست كليك روي شكل درج شده، و انتخاب گزينه Format… ، كادري نمايان مي شود كه در آن كادر مي توان چگونگي قرار گرفتن متن سند و تصوير را تعيين نمود. (توسط برگهLayout )

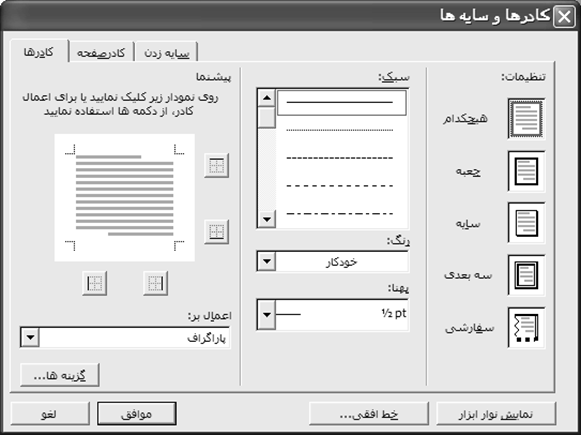

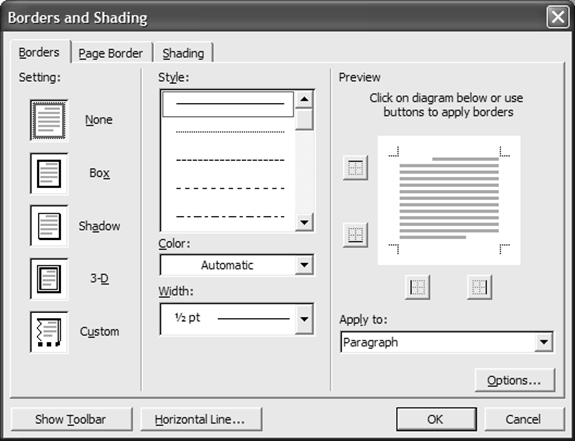

1-27 آشنايي با اضافه کردن کادر به سند و تنظيمات كادرها و سايه ها

از طريق منوی Format و گزينه Borders And Shading برنامه مربوط به تنظيمات كادرها و سايه ها (Borders And Shading) را ظاهر می سازيم. اين برنامه داراي سه برگه است:

– برگه Borders : برای تنظيم نوع، رنگ و عرض خطوط جدول و همچنين تنظيم کادر در اطراف متن به کار می رود.

– برگهPage Border : برای تنظيم يك كادر و حاشيه براي كل صفحه می باشد.

– برگه Shading : برای تغيير رنگ زمينه و سايه زدن خانه هاي انتخاب شده استفاده می شود.

1-28 آشنايي با استفاده از مجموعه های مختلف چند فاصله (Tab)

برای تنظيم فاصله و پرش كليد Tab مي توان روي دكمه كوچكِ گوشه راست يا چپِ خط كش كليك نموده و نوع Tab را ، تعيين ساخت و سپس در جايي از خط كش كليك كرد تا فاصله پرشTab نيز تنظيم گردد.

اين دكمه ها عبارتند از:

چپ چين (Left Tab)

وسط چين(Center Tab)

راست چين(Right Tab)

مرتب نمودن نوشته هاي آن ستون با توجه به مميز اعشار(Decimal Tab)

خط جداكننده (Bar Tab)

راه ديگر اين است كه از منوي Format گزينه Tabs… را انتخاب كنيم.

| تعيين ميزان پرش كليد Tab |

| تعيين نحوه قرار گرفتن و مرتب شدن متني كه پس از فشردن كليد Tab تايپ خواهد شد. |

| تعيين نوع خط راهنماي Tab(فاصله Tab اول تغيير كرده ومثلاً نقطه چيني خواهد شد) |

| تعيين ميزان پيش فرض و اوليه پرش كليد Tab |

| ذخيره Tab |

@ به عنوان مثال در شكل بعد، ابتدا چند نوع Tab متفاوت ساخته و سپس شروع به تايپ متن نموده و براي اعمالِ فاصله از كليد Tab در صفحه كليد استفاده نمودهايم.

| A |

| B |

| C |

| D |

| E |

A – چپ چين B – راست چين C – وسط چين

D – خط فاصل E – اعشار

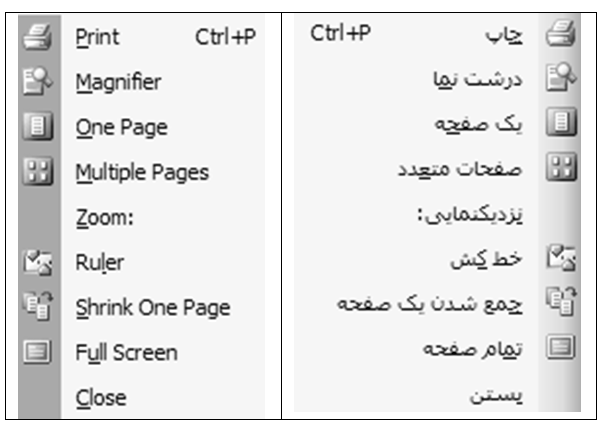

1-29 آشنايي با پيش نمايش چاپ (Print Priew)

روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه Print Preview را از منوي File انتخاب مي كنيم. در حالت پيش نمايش چاپ، نوار ابزار زير نمايان خواهد شد:

A – براي چاپ سند (Print) اين مورد را انتخاب مي كنيم.

B – ابزار بزرگ نمايي ( اگر فعال نباشد، مي توان در اين حالت نمايشي ، سند را ويرايش نمود.)

C – براي اين كه يك صفحه مشاهده شود، از اين گزينه استفاده مي شود.

D – براي اين كه چند صفحه مشاهده شود، از اين گزينه استفاده مي شود.

E – برای تنظيم درصد بزرگ نمايي استفاده می شود.

F – برای نمايش يا عدم نمايش خط كش می باشد.

G – برای جمع و جور نمودن سند (با حذف فضاهاي خالي زايد و با تغيير اندازه برخي مطالب) از اين گزينه استفاده مي شود.

H – برای نمايش تمام صفحه سند می باشد.

I– برای بستن اين حالت نمايشي و برگشت به حالت نمايشي معمولي از اين گزينه استفاده مي شود. ( كليد Esc )

J – نمايش اطلاعاتي درباره وضعيت قالب بندي متني كه با كليك مشخص مي نماييم.

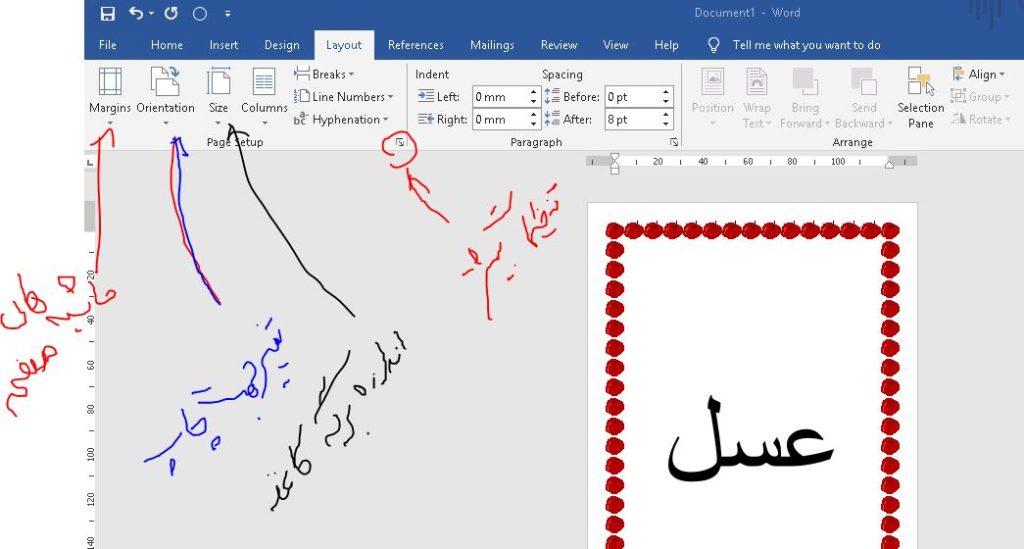

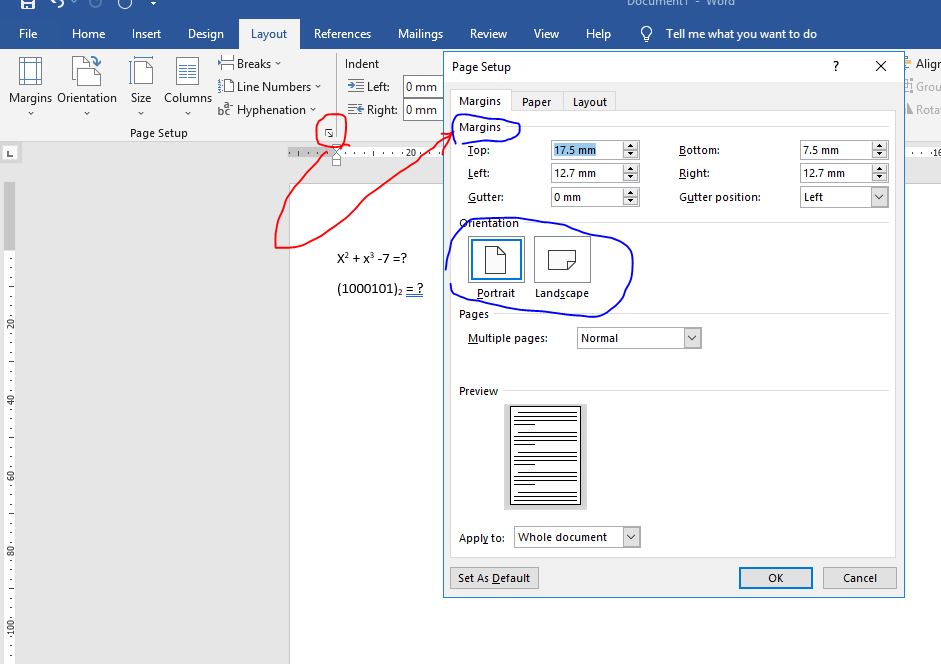

1-30 آشنايي با تنظيمات صفحه و حاشيه سند

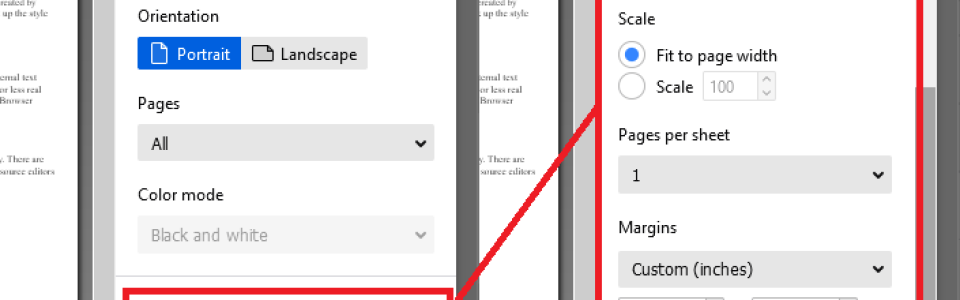

مي توان از طريق گزينه Page Setup از منوي File تنظيمات مهمي را براي چاپ انجام داد. كادر باز شده داراي 3 برگه مربوط به حاشيهها، كاغذ و چيدمان بوده كه به شرح هر يك ميپردازيم:

1-30-1 برگه Margins

هنگامي كه شروع به تايپ مي نماييم، از لبه كاغذ شروع نكرده بلكه يك مقدار فاصله از بالا، راست ، چپ و پائين بطور خودكار ايجاد مي گردد. اين بدين معني است كه در قسمتهـــاي كناري كاغذ (حاشيه) چيزي تايپ نمي شود.

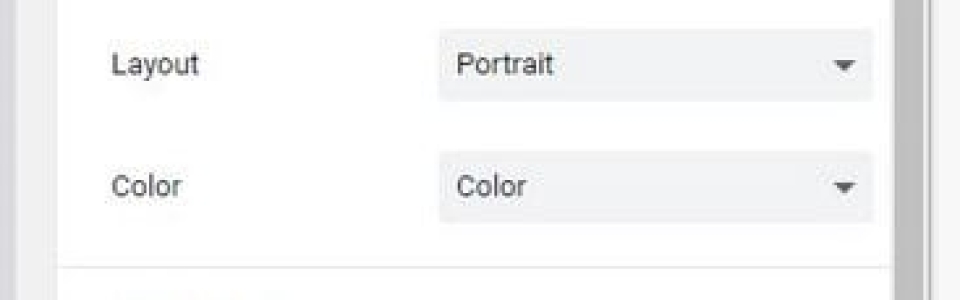

در اين برگه مي توان فواصل و حاشيه هاي طرفين صفحه را تنظيم نمود و همچنين جهتِ چاپِ كاغذ را تعيين ساخت كه مطالب در طول كاغذ ويا در عرض آن چاپ شوند.

| تعيين جهت كاغذ (مطالب در طول كاغذ چاپ شوند يا در عرض آن) تنظيم فواصل و حاشيه هاي طرفين صفحه ( مثلاً متن سند نسبت به لبه بالايي كاغذ چقدر فاصله داشته باشد) |

1-30-2 برگه Paper

در اين برگه تعيين نوع و اندازه ابعاد كاغذ امكان پذير است. (مثلاً كاغذ A4)

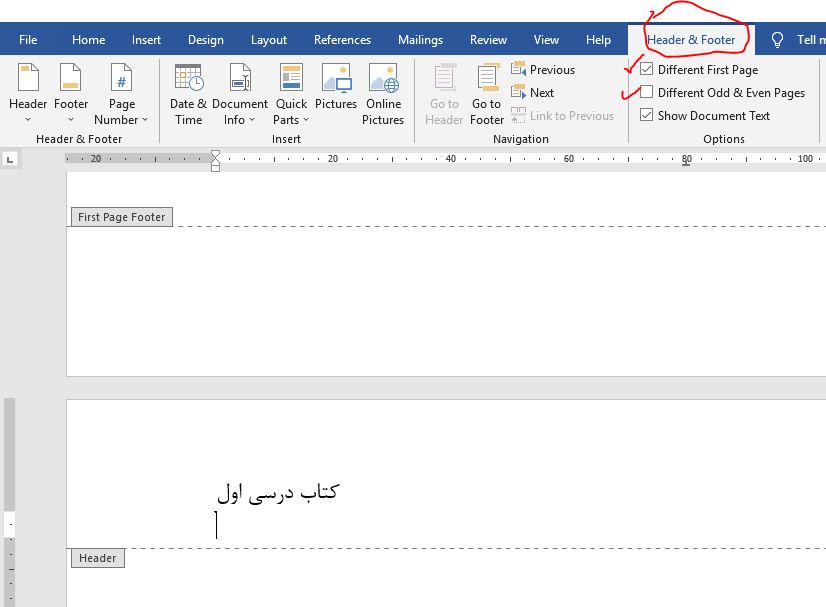

1-30-3 برگه Layout

در اين برگه مي توان تنظيم نمود كه صفحه اولِ يك سندِ چند صفحه اي، سرصفحه، پاصفحه و شماره صفحه نداشته باشد همچنين اينكه سرصفحه و پاصفحه مربوط به صفحاتِ زوج و فرد با هم متفاوت باشد. در برگه Layout اين كادر، گزينه براي اين است كه سرصفحه و پاصفحه در صفحات زوج و فرد فرق داشته باشند. اگر اين گزينه را علامت نزنيم، سرصفحه و يا پا صفحه هاي صفحات زوج و فرد همانند يكديگر خواهند بود، مثلاً اگر در صفحه 1 كلمه “آنزان ” را به عنوان سرصفحه و يا پا صفحه تايپ كنيم ، اين كلمه عيناً در تمام صفحات زوج و فرد تكرار ميگردد. اما اگر گزينه فوق را تيك بزنيم، در اينصورت سرصفحات و يا پا صفحات ، زوج با فرد متفاوت خواهند بود، يعني اينكه ميتوان براي صفحات زوج يك سرصفحه و يا پا صفحه و براي صفحات فرد سرصفحه و يا پاصفحه ديگري تنظيم نمود.

و در همين برگه، گزينه اگر علامت دار باشد، آنگاه صفحه نخست سند، سر صفحه و پاصفحه نخواهد داشت. به عبارتی ديگر می توان تنظيم نمود که سرصفحه و پاصفحه در صفحه نخست متفاوت با بقيه صفحات باشد.

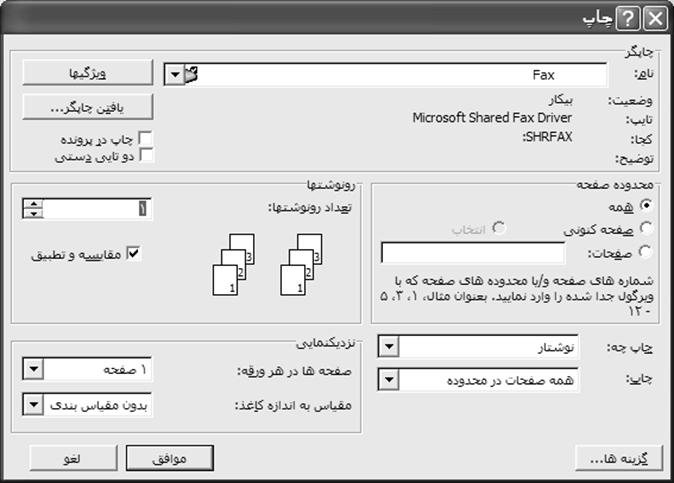

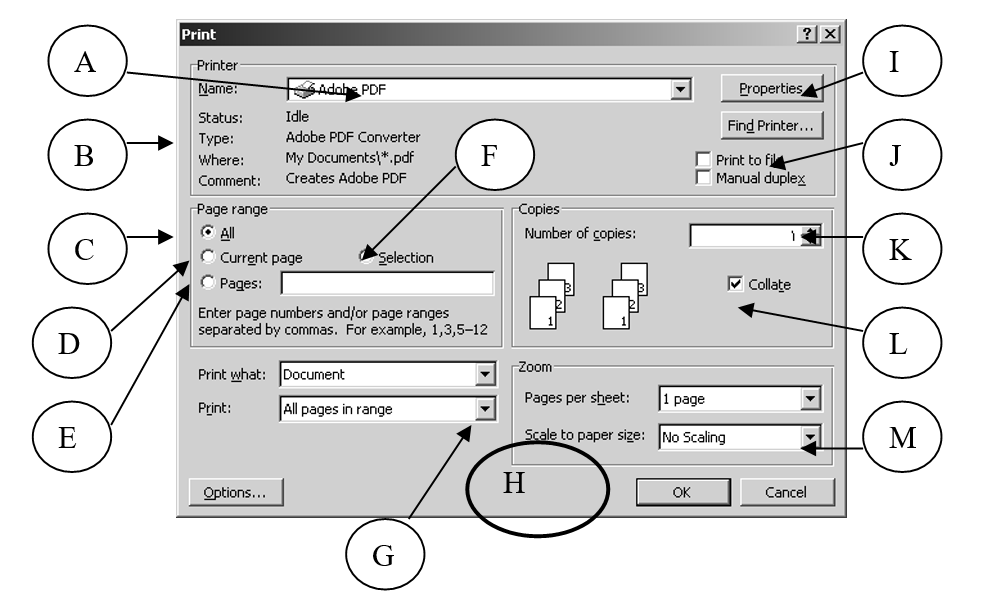

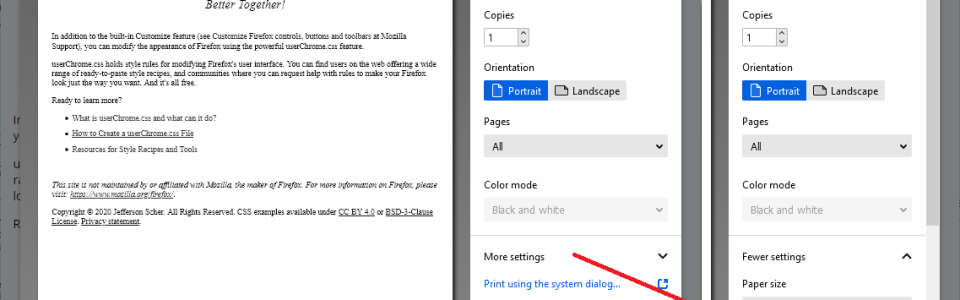

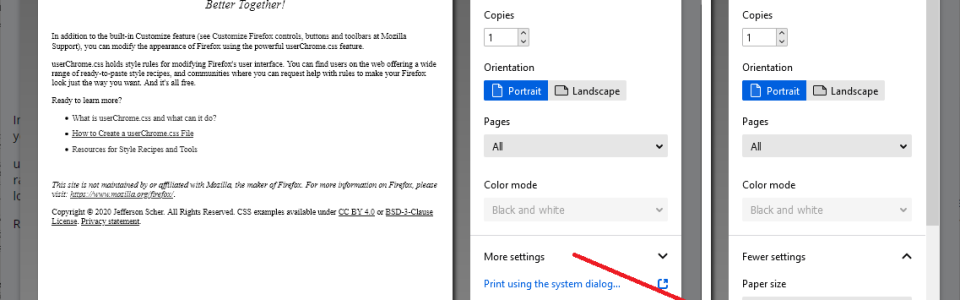

1-30-4 شناسايي اصول چاپ سند (Print)

روي نوار ابزار استاندارد دكمه را كليك نموده ويا گزينه Print… را از منوي File انتخاب مي كنيم. (با فشردن كليدهاي Ctrl + P نيز مي توان اين عمليات را انجام داد)

اگر چاپگر آماده بوده و مشكل خاصي وجود نداشته باشد، آنگاه تمامی صفحات سند فعلي بطور كامل به چاپ خواهند رسيد.

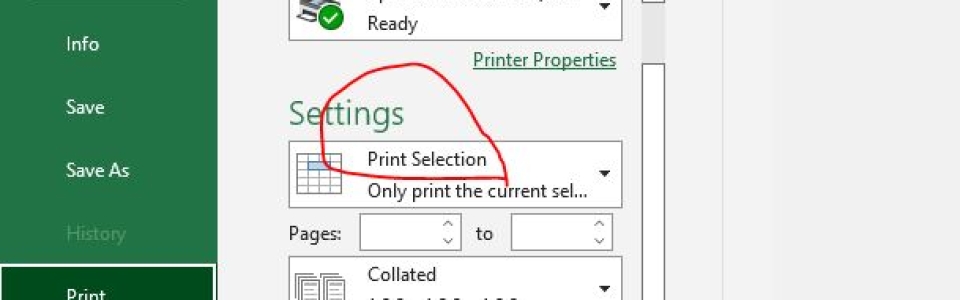

1-30-4-1 آشنايي با گزينه های اصلی چاپ

در صورتيكه از منوي File گزينه Print… را انتخاب كنيم، كادري ظاهر شده و به ما امكانات و اختيارات زيادي را در چاپ، ارايه مي نمايد.

A– برای انتخاب نوع چاپگر می باشد. مثلاً در صورتي كه در يك سيستم، نوعي چاپگر ليزريِ سياه وسفيد و همچنين يك چاپگر رنگي نصب باشد، از اين ليست بازشونده قادر خواهيم بود، چاپگر دلخواه را انتخاب نماييم.

B– اطلاعاتي درباره چاپگر نمايش می دهد.

C– برای اين که همه صفحات سند چاپ شوند.

D– فقط صفحه فعلي (كه مكان نما در آنجاست) چاپ شود.

E– صفحات خاصي چاپ گردند مثلاً صفحات: 1,3,5-12 (يعني صفحات اول و سوم و صفحات پنجم تا دوازدهم به چاپ رسند)

F– فقط متنی که هم اکنون در حالت انتخاب است چاپ شود.

G– تعيين اينكه همه صفحات چاپ شوند يا فقط صفحات فرد و يا فقط صفحات زوج

H– برای چاپ نهايي سند استفاده می گردد.

I– برای تنظيم خصوصيات چاپگر در صورت لزوم استفاده می شود.

J– برای چاپ سند درون يك فايل مخصوص و ذخيره آن روي ديسك استفاده می شود.

K– تعيين تعداد نسخه اي كه مي خواهيم به چاپ رسد (تعداد كپي)

L– هنگام چاپ يك سند چند صفحه اي در چند سري، مي توان تنظيم نمود كه :

الف) تمام صفحات يك سري بطوركامل چاپ شوند و بعد يك سري ديگر و بعد …

(هر گاه گزينه Collate علامت دار باشد)

ب) ابتدا صفحه نخست به تعداد لازم به چاپ رسد و بعد صفحه دوم و بعد …

(هر گاه گزينه Collate علامت دار نباشد)

M– چاپ سند در صفحه اي با مقياس ديگر(چنين امكاني در دستگاههاي فتوكپي نيز وجود دارد)

با توجه به آشنايي با كادر مخصوص چاپ مي توان اين موارد راتنظيم نمود: تعيين نام چاپگري كه مي خواهيم اين سند را چاپ نمايد (در صورت داشتن چند چاپگر متفاوت)، تعدادكپي(چاپ) از صفحات (Number Of Copies ) در اين قسمت با انتخابAll تنظيم مي كنيم كه تمامي صفحات سند را از صفحه اول تا آخر چاپ نمايد و با انتخاب Current Page فقط صفحه اي كه همينك مكان نما در آن است،و فعال است را چاپ نمود و …

1-31 شناسايي اصول انتخاب الگوی مناسب (Template)

Word داراي الگوهاي تعريف شده براي بسياري نامه هاي اداري و تجاري ، صفحات اوليه فاكس، توضيحات و غيره مي باشد . ما ميتوانيم الگوهاي خاص خود را ايجاد كرده و يا الگوهاي موجود را بدلخواه تغيير داده و اصلاح نماييم. براي استفاده از يك الگوي موجود مي بايست از منـــوي File گزينه New را انتخاب نمود و در سمت راست ليست وظيفه ظاهر شده ، گزينه On My Computer را انتخاب كرد تا کادر مربوط ظاهر گردد و نهايتاً در سربرگ دلخواه از اين کادر، گزينه اي از موارد موجود را انتخاب ساخته و گزينه Document را در ناحيه پايين به انتخاب در آورده و دكمه Ok كليك كرد. اگر به جاي Document گزينه Template را انتخاب كنيم،الگوي جديدي ساخته خواهد شد.

براي ايجاد الگوي جديد، روش ديگر ايناست كه ابتدا سند مورد نظر را تايپ و ويرايش نموده و سپس از منوي File گزينه Save As را انتخاب نمود و از ليست باز شونده Save As Type در قسمت پايين، گزينه Document Template را انتخاب نمود.

1-32 آشنايي با بکار بردن يک شيوه يا سبك (Style)

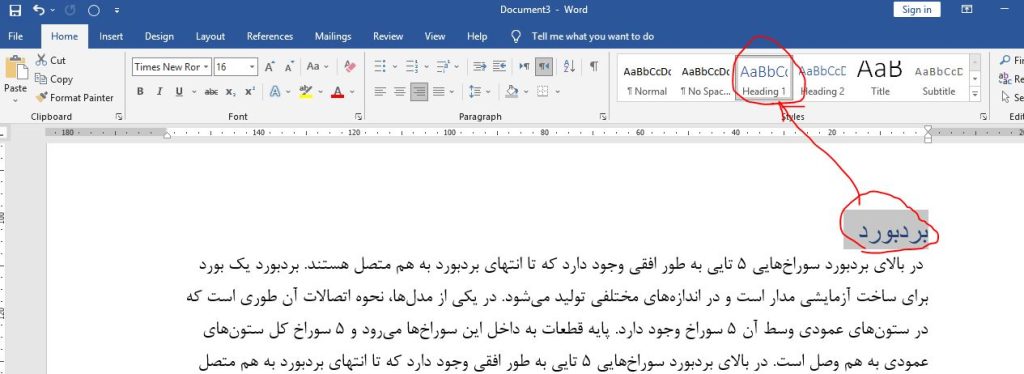

سبك مجموعه اي از قالب بنديها براي اعمال بر روي متون بصورت يكجا مي باشد. براي استفاده از يكي از انواع سبك موجود در ابتداي نـــوار ابزار Formatting روي فلش رو به پائين Style كليك مي كنيم تا ليستي از سبك ها مشاهده شوند.

از اين ليست سبك مورد نظر را با كليك كردن روي نام آن، انتخاب مي نماييم تا اين سبك به متن انتخاب شده اعمال شود. براي ايجاد سبك جديد از منوي Format گزينه Styles And Formatting راكليك نموده و دكمه را انتخاب مي كنيم تا كادر محاوره اي New Style ظاهر شود، سپس در قسمت Name نام سبك جديد را وارد مي كنيم و در قسمت پايين دكمه Format راكليك كرده و قالب بندي و تغييرات دلخواه را از گزينه هاي مشاهده شده انتخاب كرده (مثلاً گزينه Font ) و Ok راكليك مي كنيم.

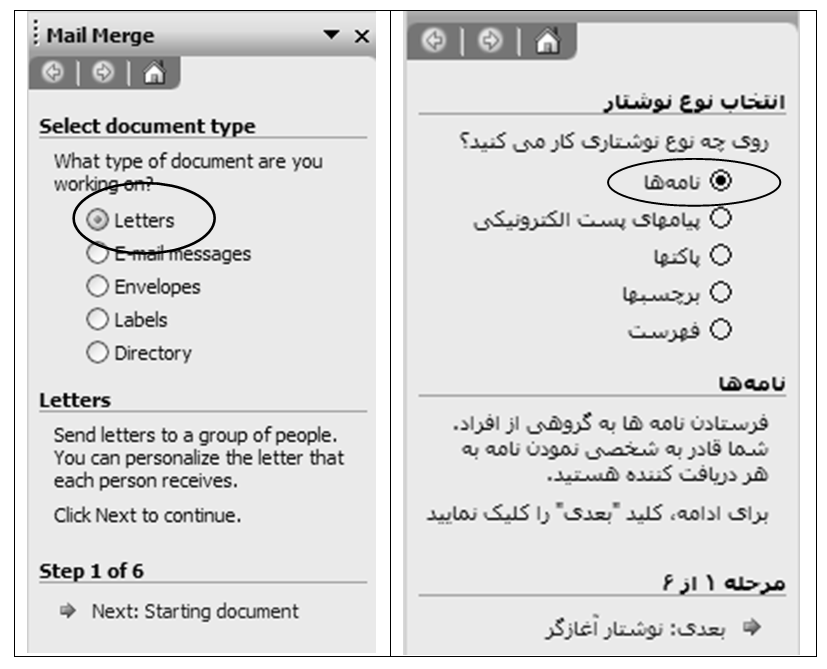

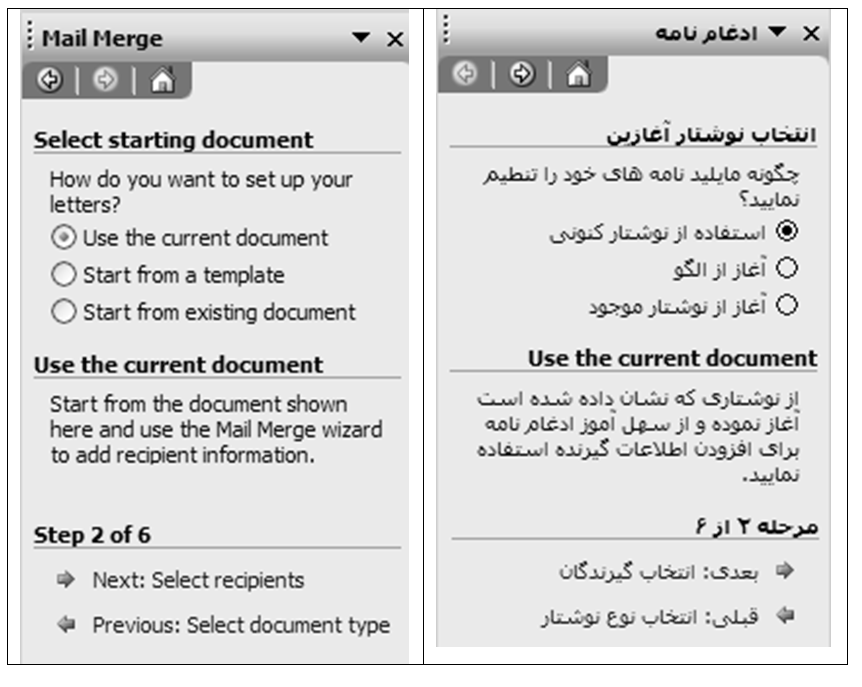

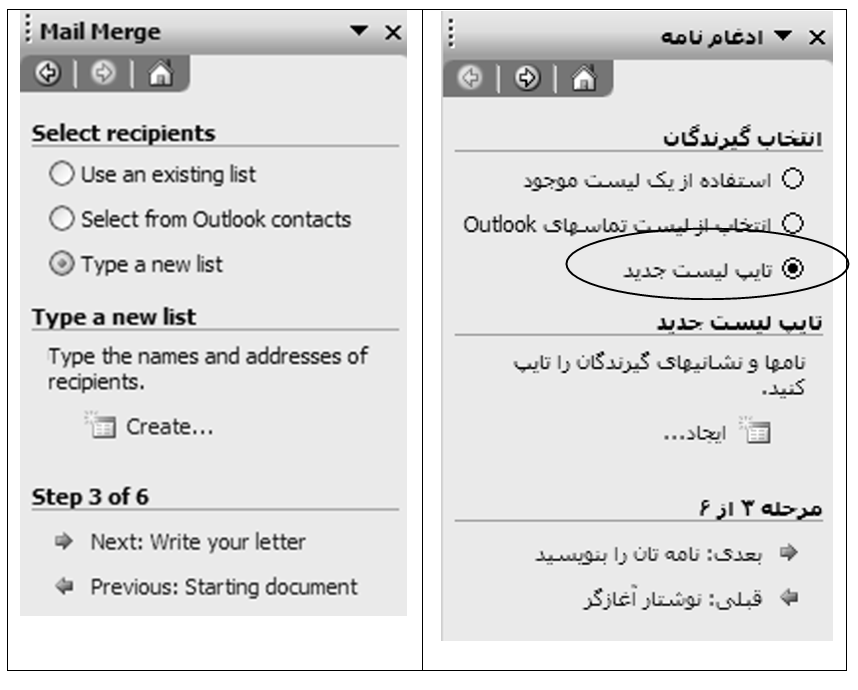

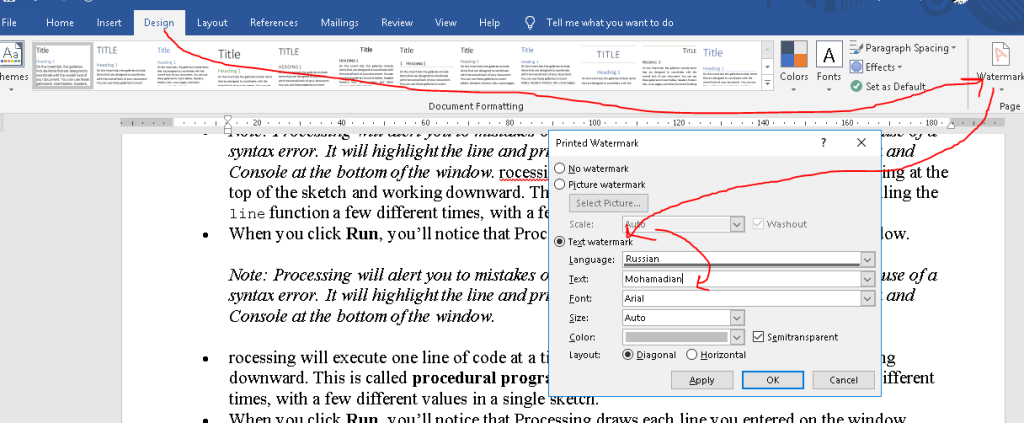

1-33 آشنايي با اصول عمليات ادغام پستي (Mail Merge)

گاهی لازم است که نامه ای با متن واحد (ولی با ذکر برخی اطلاعات مربوط به گيرنده نامه) به تعداد زياد و براي چند نفر ارسال شود. در اين هنگام از برنامه ادغام پستی Ms-Wordاستفاده می کنيم، اين برنامه برای تکثير نامه ها و يا برچسب و كاتالوگ كمك شاياني در اختيار قرار می دهد.

برنامه ادغام پستی از سه جزء تشکيل می شود. نامه فرم (شامل متن مشترک نامه ها می باشد)، منبع داده (فايلی که حاوی اطلاعات متغير می باشد) و فيلد ادغام (تنظيمات تعيين کننده اين که کدام اطلاعات متغيير در چه جايي از متن نامه قرار گيرد)

در اينجا با استفاده از راهنمای قدم به قدم برنامه ادغام پستی Ms-Wordيک نامه تبريک را برای ارسال به دوستان خود تنظيم می کنيم. براي اين منظور به روش زير عمل مي نماييم:

1. از منوی Toolsگزينه Letters And Mailings و سپس گزينه Mail Merge را انتخاب می کنيم. ليست مخصوص اين فرمان نمايان خواهد شد:

مورد دلخواه را از اين ليست انتخاب نموده و براي انجام عمليات ادغام، گزينه را كليك می نماييم.

2. از كادر بعد گزينه نخست را انتخاب مي نماييم:

گزينه را كليك می نماييم.

3. اگر ليست گيرندگان نامه را در گذشته تهيه و تنظيم نکرده باشيم، گزينه سوم را از ليست بعدی انتخاب می کنيم.

4. گزينه Create را در همين ليست انتخاب می کنيم تا کادر New Address List ظاهر شود. سپس به تايپ مشخصات گيرنده های نامه می پردازيم.

برای ايجاد يک رکورد جديد و درج مشخصات گيرنده ديگر نامه از دکمه New Entry کمک می گيريم. و برای تغيير اساسی بانک اطلاعاتی خود، دكمه Customize را انتخاب نموده و بانك اطلاعاتي خود را به دلخواه تنظيم می كنيم. در اينصورت كادري ظاهر شده و به ما اين امكان را مي دهد كه فيلدهاي بانك اطلاعاتي را تغيير دهيم (ايجاد، حذف، تغييرنام و …)

5. کادر New Address List را می بنديم. در اين صورت کادر Save ظاهر خواهد شد که در اين کادر نامی را برای سند تعيين نموده و آن را ذخيره می کنيم.

6. کادر بعدی نمايان شده و امکان اعمال تنظيمات ديگری را در اختيار قرار می دهد.

تغييرات دلخواه را انجام داده و کادر را تاييد نموده و سپس گزينه Next را در پايين ليست وظيفه كليك می نماييم.

7. در ليست بعد کادر مربوط به تعيين نوع نامه ظاهر خواهد شد که يکی از گزينه ها را انتخاب کرده (مثلاً Greeting Line) و Next را کليک می کنيم.

8. در کادر بعد پيش نمايش يکی از نامه ها را مشاهده نموده و در صورت لزوم تغيرات دلخواه را اعمال کرده و دکمه Next را کليک می کنيم.

9. کادر مربوط به آخرين عمليات ادغام نمايش داده خواهد شد.

نهايتاً براي انجام نهايي عملياتِ ادغام و مشاهده نتيجه ، يكي از گزينه هاي زير را انتخاب مي كنيم:

1-34 مفهوم Book Mark

مي توان محلي در سند را براي مراجعات بعدي و انتقال سريع اشاره گر به آن، نامگذاري (علامت گذاری) نمود. به اين نام و علامت ها Book Mark گويند. براي ايجاد Book Mark ابتدا اشاره گر را به محل دلخواه از سند منتقل نموده و بعد از منوي Insert گزينه Book Mark را انتخاب مي كنيم و در قسمت Book Mark Name نامي را تايپ كرده(نام بايد با يك حرف الفبا آغاز شود) و دكمه Add راكليك مي كنيم. براي استفاده از Book Mark و انتقال اشاره گر به محل دلخواه به توضيحات 8-1 قسمت Go To رجوع شود.

1-35 پاكت نامه ها و برچسب ها(Envelopes And Labels )

از منويTools و منوي فرعي Letters And Mailings مي توان برنامه Envelopes And Labels را اجرا كرد و در برگه هاي مربوط، در قسمت مشخص شده، متن دلخواه را براي پاكت نامه ها و برچسب ها تنظيم نمود.

1-36 شمارش كلمات(Word Count…)

مي توان از برنامه Word گزارشي از تعداد صفحات،كلمات،كاراكترها و… را درخواست نمود. براي اين كار از منوي Tools گزينه Word Count.. را انتخاب مي كنيم.

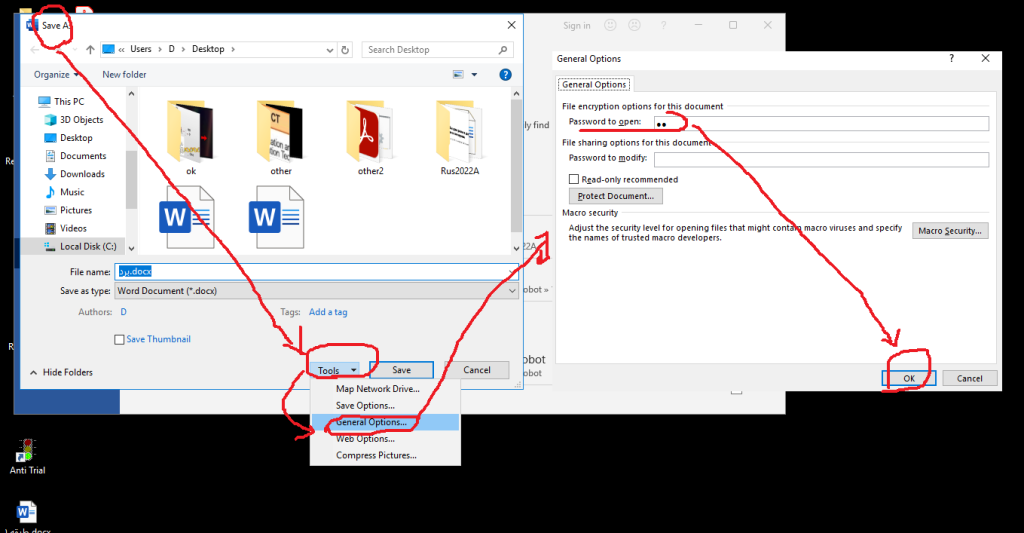

1-37 اعمال كلمه رمز

در كادر Save As مي توان براي سند فعلي خود يك رمز تهيه نمود تا ديگران بدون آن كلمه رمز نتوانند آن سند را باز نموده و يا ويرايش كنند. با توجه به شكل بعد بايد در كادر Save As از منوي Tools گزينه Security Options را اجرا نمود و سپس Password را در قسمت Open يا Modify تايپ نمود.

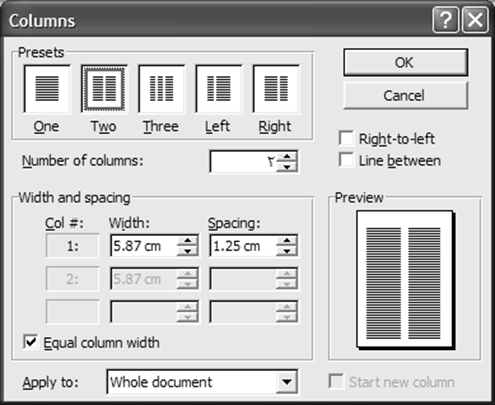

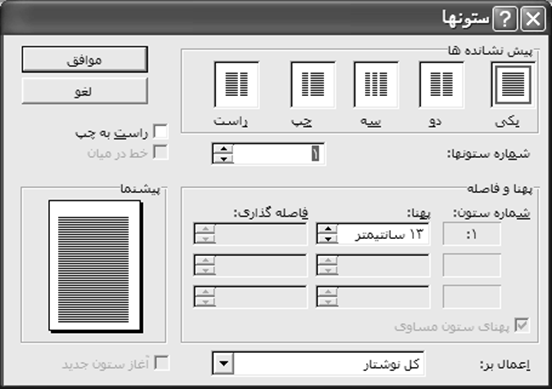

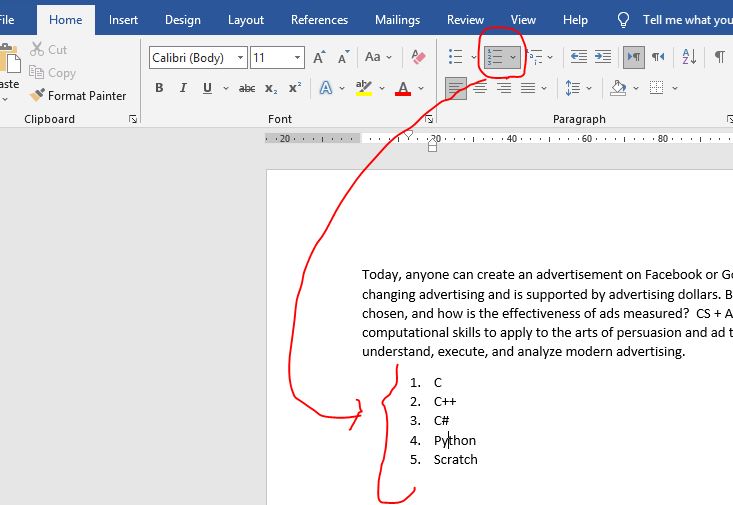

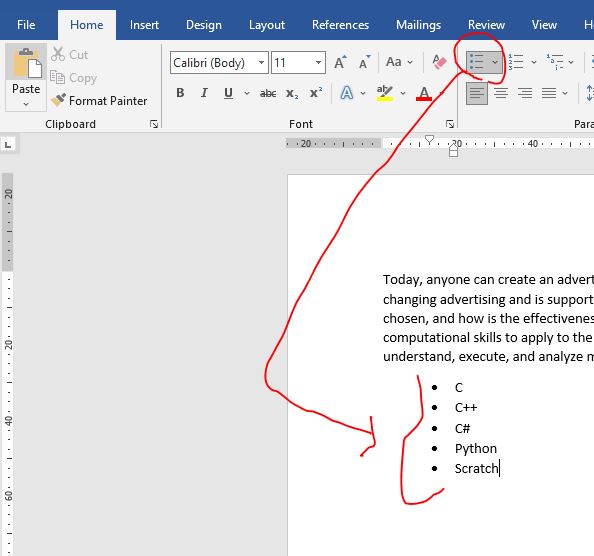

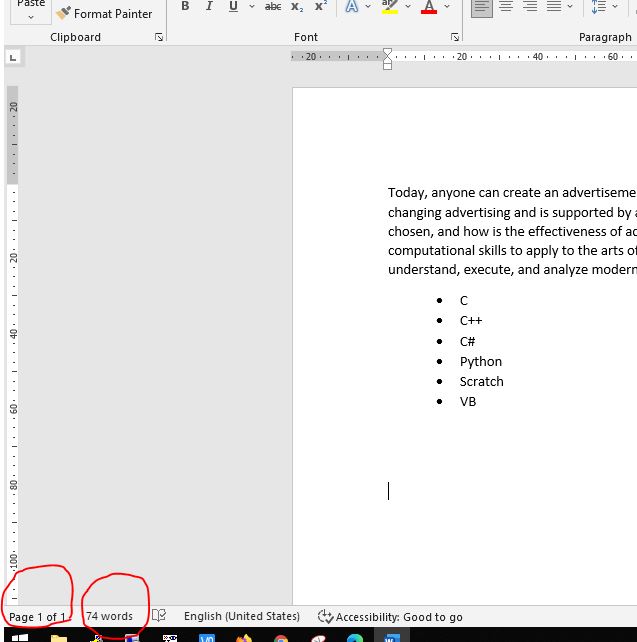

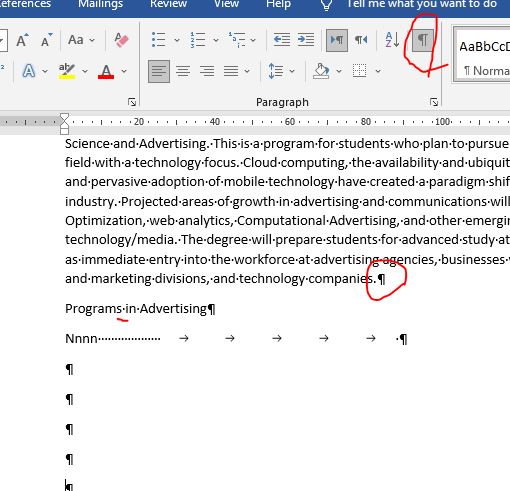

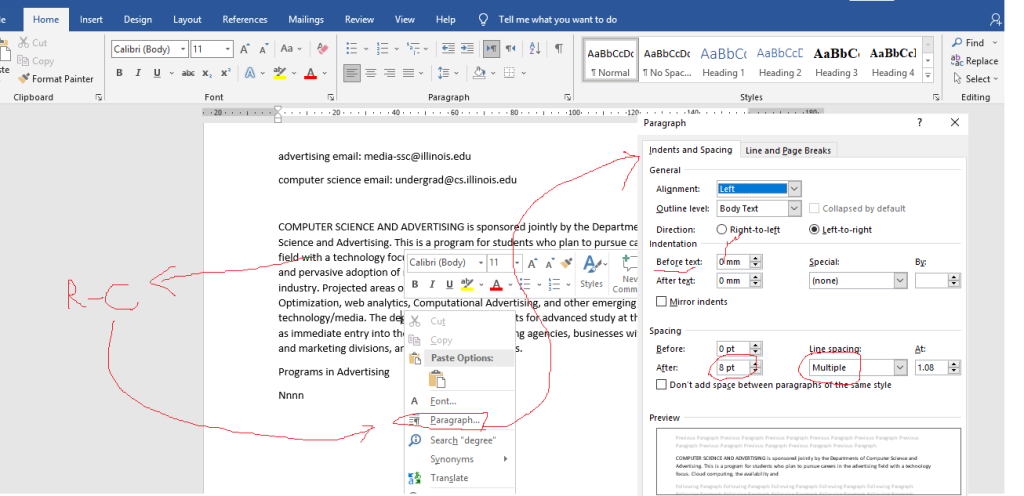

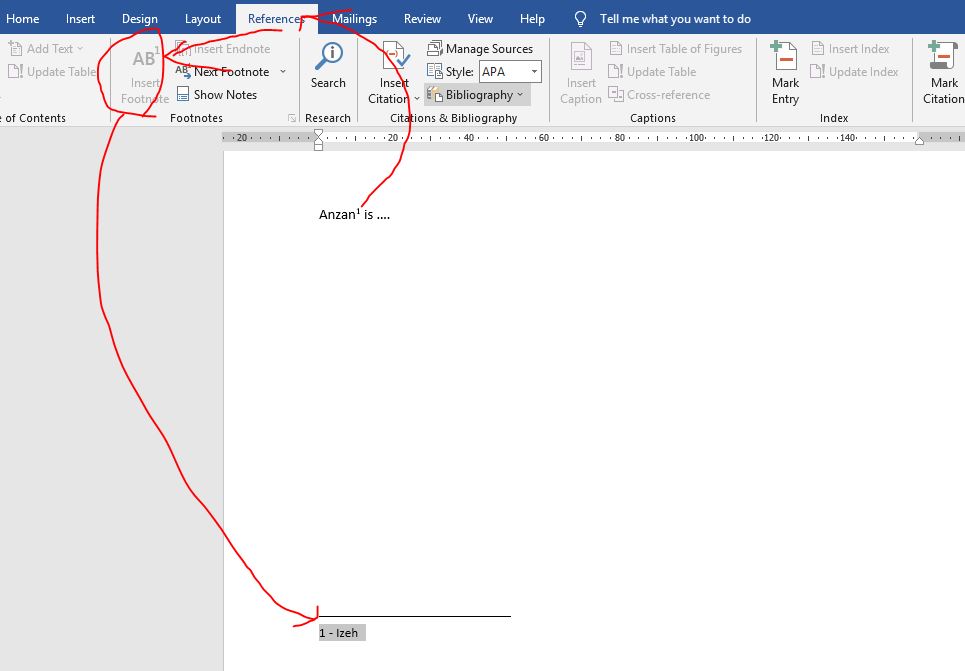

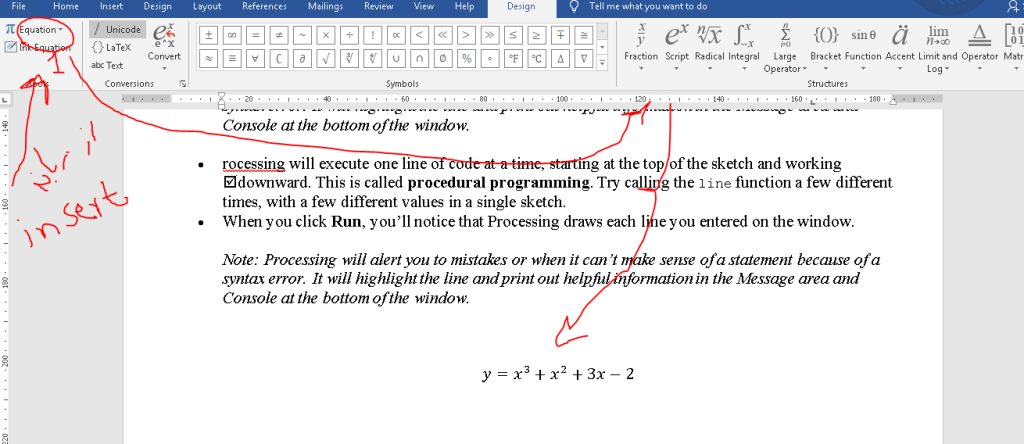

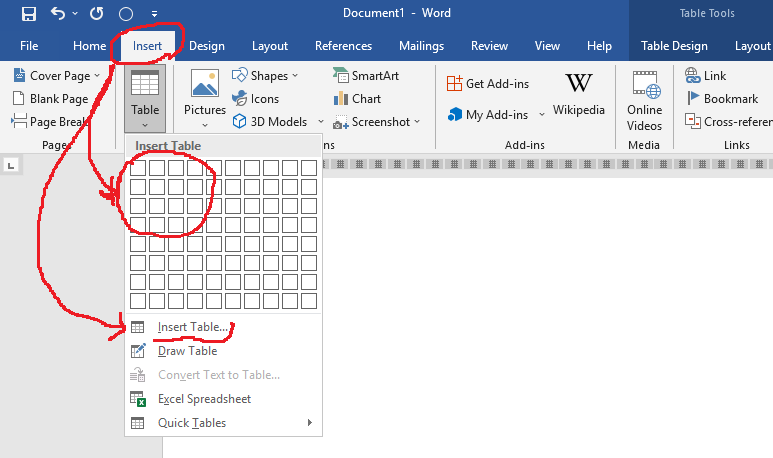

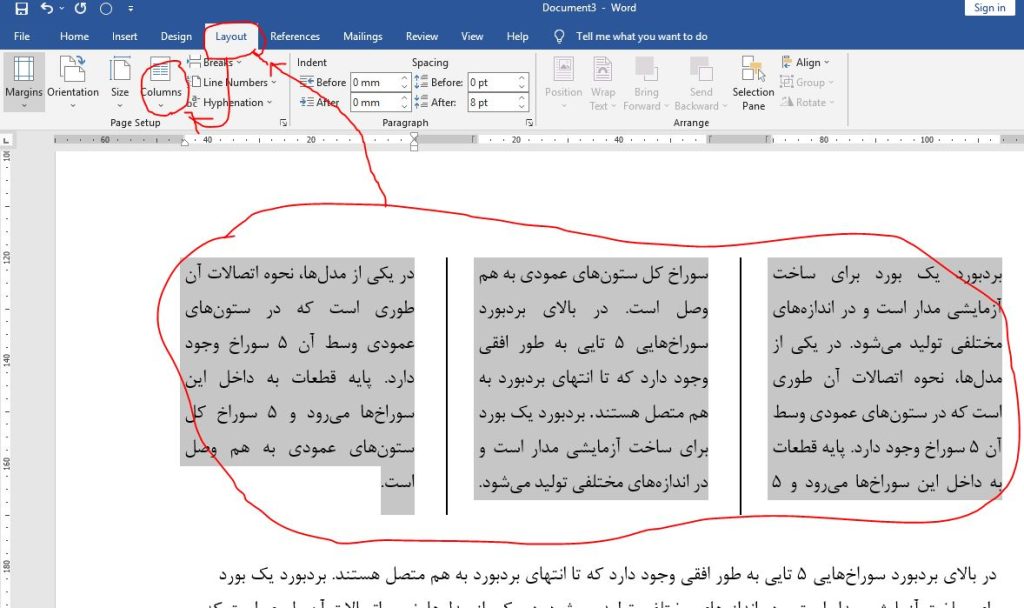

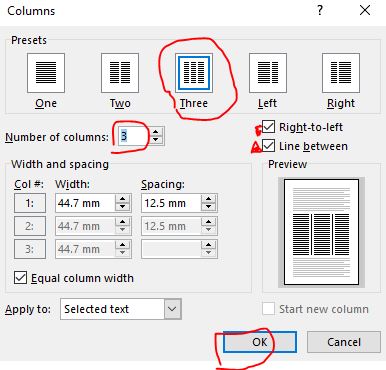

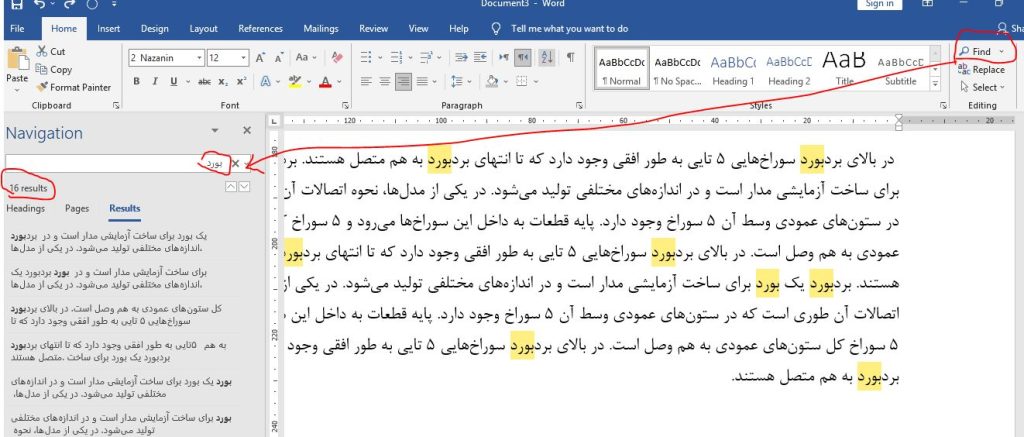

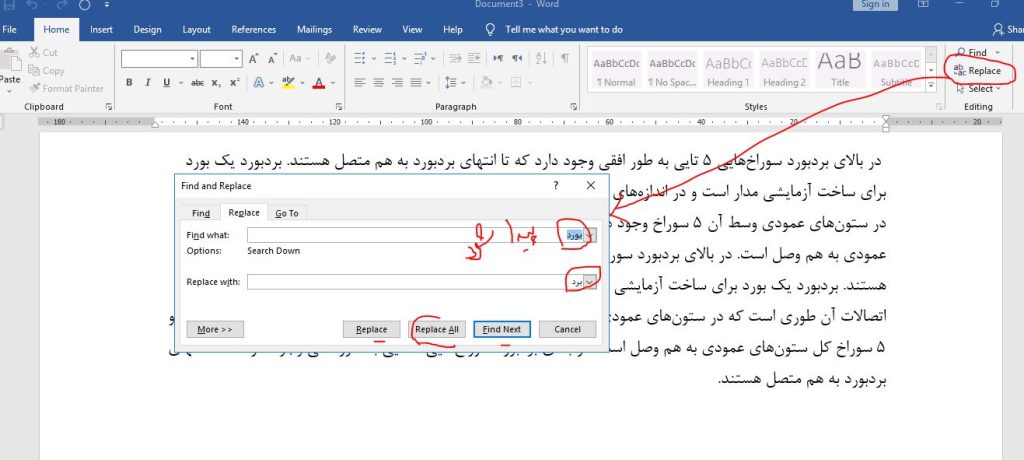

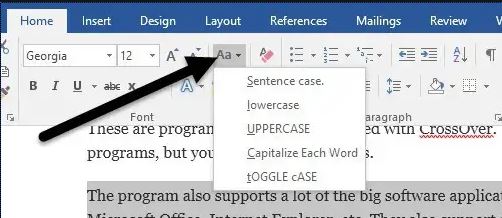

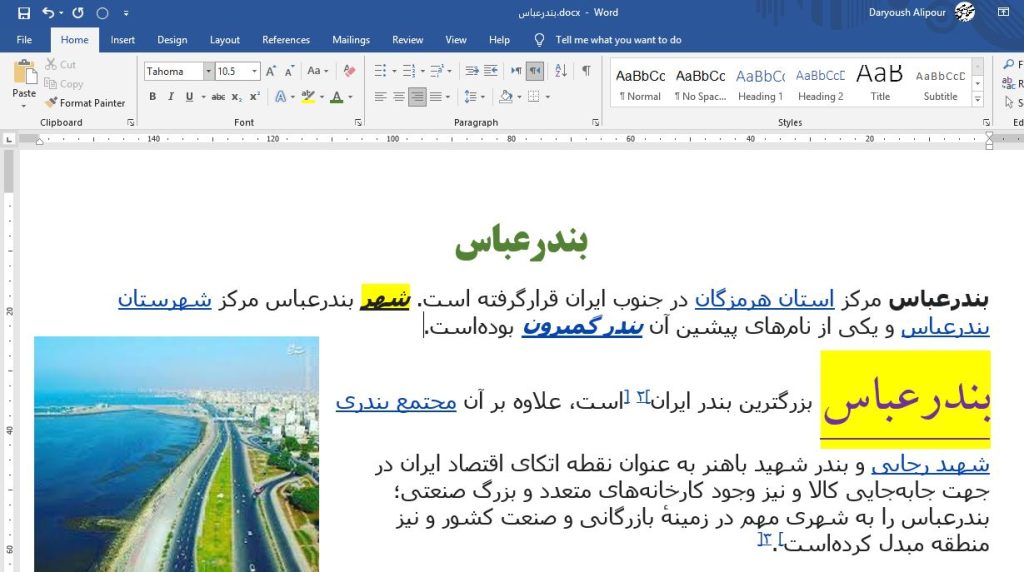

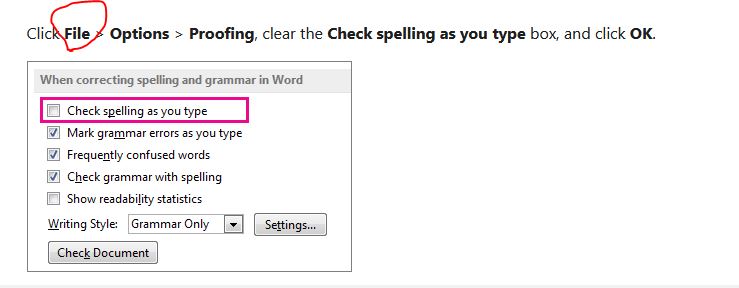

1-38 ايجاد يادداشت(Comment)